Ants Colony and Multi-Agents Ants Colony and Multi-Agents Individual ants are simple insects with limited memory and capable of performing simple actions. However, an ant colony expresses a complex collective behavior providing intelligent solutions to problems such as carrying large items, forming bridges and finding the shortest routes from the nest to a food source. A single ant has no global knowledge about the task it is performing. The ant's actions are based on local decisions and are usually unpredictable. There are many other examples of Emergent Behavior in nature such as colonies of bacteria, bees and so on. The fascinating behavior of ants has been inspiring researches to create new approaches based on some of the abilities of the ants' colonies. The practical example covered in this essay involves finding a path linking two nodes in a graph. Foraging behavior of ants Ants use a signaling communication system based on the deposition of pheromone over the path it follows, marking a trail. Environment

Monitoring and Surveillance Agents Monitoring and surveillance agents (also known as predictive agents) are a type of intelligent agent software that observes and reports on computer equipment. Monitoring and surveillance agents are often used to monitor complex computer networks to predict when a crash or some other defect may occur. Another type of monitoring and surveillance agent works on computer networks keeping track of the configuration of each computer connected to the network. It tracks and updates the central configuration database when anything on any computer changes, such as the number or type of disk drives. An important task in managing networks lies in prioritizing traffic and shaping bandwidth. Examples[edit] NASA's Jet Propulsion Laboratory has an agent that monitors inventory, planning, and scheduling equipment ordering to keep costs down.Allstate Insurance has a network with thousands of computers. Haag & Cummings & McCubbrey & Pinsonneault & Donovan (2006). See also[edit]

Ant colony optimization algorithms Ant behavior was the inspiration for the metaheuristic optimization technique This algorithm is a member of the ant colony algorithms family, in swarm intelligence methods, and it constitutes some metaheuristic optimizations. Initially proposed by Marco Dorigo in 1992 in his PhD thesis,[1][2] the first algorithm was aiming to search for an optimal path in a graph, based on the behavior of ants seeking a path between their colony and a source of food. The original idea has since diversified to solve a wider class of numerical problems, and as a result, several problems have emerged, drawing on various aspects of the behavior of ants. Overview[edit] Summary[edit] In the natural world, ants (initially) wander randomly, and upon finding food return to their colony while laying down pheromone trails. Over time, however, the pheromone trail starts to evaporate, thus reducing its attractive strength. Common extensions[edit] Here are some of most popular variations of ACO Algorithms. to state where to

Report: 51% of web site traffic is 'non-human' and mostly malicious Incapsula, a provider of cloud-based security for web sites, released a study today showing that 51% of web site traffic is automated software programs, and the majority is potentially damaging, -- automated exploits from hackers, spies, scrapers, and spammers. The company says that typically, only 49% of a web site's visitors are actual humans and that the non-human traffic is mostly invisible because it is not shown by analytics software. This means that web sites are carrying a large hidden cost burden in terms of bandwidth, increased risk of business disruption, and worse. Here's a breakdown of an average web site's traffic: - 5% is hacking tools searching for an unpatched or new vulnerability in a web site. - 5% is scrapers. - 2% is automated comment spammers. - 19% is from "spies" collecting competitive intelligence. - 20% is from search engines - which is non-human traffic but benign. - 49% is from people browsing the Internet. I spoke with Marc Gaffan, co-founder of Incapsula.

Emergent Intelligence in Competitive Multi-Agent Systems by Sander M. Bohte, Han La Poutré Getting systems with many independent participants to behave is a great challenge. At CWI, the Computational Intelligence and Multi- Agent Games research group applies principles from both the economic field of mechanism design and state-of-the-art machine-learning techniques to develop systems in which 'proper' behaviour emerges from the selfish actions of their components. With the rapid transition of the real economy to electronic interactions and markets, applications are numerous: from automatic negotiation of bundles of personalized news, to efficient routing of trucks or targeted advertisement. In an economic setting, an individual - or agent - is assumed to behave selfishly: agents compete with each other to acquire the most resources (utility) from their interactions. Clearly, software agents in a multi-agent system must be intelligent and adaptive. In a similar vein, we considered the dynamic scheduling of trucking routes and freight.

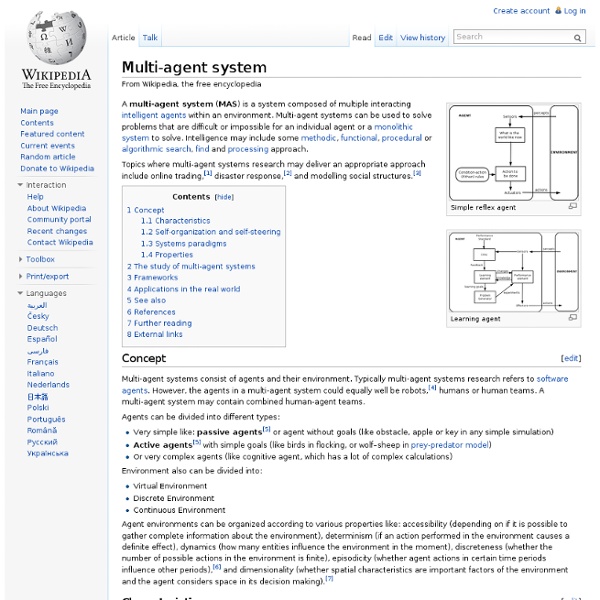

Intelligent agent Simple reflex agent Intelligent agents are often described schematically as an abstract functional system similar to a computer program. For this reason, intelligent agents are sometimes called abstract intelligent agents (AIA)[citation needed] to distinguish them from their real world implementations as computer systems, biological systems, or organizations. Some definitions of intelligent agents emphasize their autonomy, and so prefer the term autonomous intelligent agents. Still others (notably Russell & Norvig (2003)) considered goal-directed behavior as the essence of intelligence and so prefer a term borrowed from economics, "rational agent". Intelligent agents are also closely related to software agents (an autonomous computer program that carries out tasks on behalf of users). A variety of definitions[edit] Intelligent agents have been defined many different ways.[3] According to Nikola Kasabov[4] IA systems should exhibit the following characteristics: Structure of agents[edit]

Intelligence as an Emergent Behavior or, The Songs of Eden Published on Monday, March 16, 01998 • 16 years, 1 month ago Written by Danny Hillis for Daedalus Sometimes a system with many simple components will exhibit a behavior of the whole that seems more organized than the behavior of the individual parts. Consider the intricate structure of a snowflake. Symmetric shapes within the crystals of ice repeat in threes and sixes, with patterns recurring from place to place and within themselves at different scales. It would be very convenient if intelligence were an emergent behavior of randomly connected neurons in the same sense that snowflakes and whirlpools are the emergent behaviors of water molecules. This is a seductive idea, since it allows for the possibility of constructing intelligence without first understanding it. There has been a renewal of interest in emergent behavior in the form of neural networks and connectionist models, spin glasses and cellular automata, and evolutionary models.

Can Creativity be Automated? In 2004, New Zealander Ben Novak was just a guy with a couple of guitars and distant dreams of becoming a pop star. A year later one of Novak’s songs, “Turn Your Car Around,” had invaded Europe’s radio stations, becoming a top-10 hit. Novak had to beat long odds to get discovered. The process record labels use to find new talent—A&R, for “artists and repertoire”—is fickle and hard to explain; it rarely admits unknowns like him. So Novak got into the music business through a back door that had been opened not by a human, but by an algorithm tasked with finding hit songs. It’s widely accepted that creativity can’t be copied by machines. But now we’re learning that for some creative work, that simply isn’t true. The algorithm that kindled Novak’s music career belongs to Music X-Ray, whose founder, Mike McCready, has spent the last 10 years developing technology to detect musical hooks that are destined for the charts. Why, yes. Algorithms won’t only do work that requires a critical eye.

Emergent Intelligence of Networked Agents Contains the latest research on Emergent Intelligence of Networked Agents The study of intelligence emerged from interactions among agents has been popular. In this study it is recognized that a network structure of the agents plays an important role. The current state-of-the art in agent-based modeling tends to be a mass of agents that have a series of states that they can express as a result of the network structure in which they are embedded. This book is based on communications given at the Workshop on Emergent Intelligence of Networked Agents (WEIN 06) at the Fifth International Joint Conference on Autonomous Agents and Multi-agent Systems (AAMAS 2006), which was held at Future University, Hakodate, Japan, from May 8 to 12, 2006. Content Level » Research Keywords » Emergent Intelligence - Networked Agents Related subjects » Artificial Intelligence - Computational Intelligence and Complexity Table of contents / Preface Popular Content within this publication Show all authors Hide authors

Quand l’homme sert de machine à la machine Par Rémi Sussan le 17/11/10 | 4 commentaires | 2,798 lectures | Impression Tout le monde est d’accord pour dire que “l’infobésité” sera probablement l’un des gros problèmes du siècle qui commence. Et ce qui est déjà difficile pour tout un chacun devient une question vitale pour certaines catégories professionnelles, comme les militaires, les agents de renseignements et autres pratiquants d’activités à risque. On sait que l’intelligence artificielle est pour l’instant bien loin de pouvoir les aider. En revanche, on peut espérer compter sur des techniques d’hybridation homme-machine. C’est en partant de ce postulat que Paul Sajda, directeur du Laboratoire d’imagerie intelligente et de neuroinformatique de l’université de Columbia, a développé un système permettant de repérer rapidement dans une masse de photographies celles qui correspondent à certains critères prédéfinis. La technique est assez simple. Image : photogramme du film disponible sur IEEE.tv. Via Next Big Future.

Luca Gambardella: Ant Colony Optimization by Luca Maria Gambardella and Marco Dorigo Ant Colony Optimization: ants inspired systems for combinatorial optimization The ant colony optimization metaheuristic (ACO, Dorigo, Di Caro and Gambardella 1999) is a population-based approach to the solution of combinatorial optimization problems. ACO: Ant Colony Optimization Dorigo M., G.

drawball.com