OpenCV History[edit] Advance vision research by providing not only open but also optimized code for basic vision infrastructure. No more reinventing the wheel.Disseminate vision knowledge by providing a common infrastructure that developers could build on, so that code would be more readily readable and transferable.Advance vision-based commercial applications by making portable, performance-optimized code available for free—with a license that did not require to be open or free themselves. The first alpha version of OpenCV was released to the public at the IEEE Conference on Computer Vision and Pattern Recognition in 2000, and five betas were released between 2001 and 2005. The second major release of the OpenCV was on October 2009. In August 2012, support for OpenCV was taken over by a non-profit foundation, OpenCV.org, which maintains a developer[2] and user site.[3] Applications[edit] OpenCV's application areas include: Programming language[edit] OS support[edit] Windows prerequisites[edit]

OpenCV::detect() \ language (API) Detect object(s) in the current image depending on the current cascade description. This method finds rectangular regions in the current image that are likely to contain objects the cascade has been trained to recognize. It returns found regions as a sequence of rectangles. The default parameters (scale=1.1, min_neighbors=3, flags=0) are tuned for accurate (but slow) object detection. For a faster operation on real-time images, the more preferable settings are: scale=1.2, min_neighbors=2, flags=HAAR_DO_CANNY_PRUNING, min_size= Mode of operation flags: for each scale factor used the function will downscale the image rather than "zoom" the feature coordinates in the classifier cascade. If it is set, the function uses Canny edge detector to reject some image regions that contain too few or too much edges and thus can not contain the searched object. If it is set, the function finds the largest object (if any) in the image.

Welcome diewald_CV_kit diewald_CV_kit A library by Thomas Diewald for the Processing programming environment. Last update, 13/12/2012. -------------------------------------------------------------------------------- this library contains tools that are used in the field of computer vision. its not a wrapper of openCV or some other libraries , so maybe you are missing some features ( ... which may be implemented in the future). its designed to be very fast to use it for realtime applications (webcam-tracking, kinect-tracking, ...). also, it works very well in combination with the kinect-library (dlibs.freenect - the examples, that come with the library, demonstrates: kinect 3D/2D tracking a simple marker tracking image-blob tracking -------------------------------------------------------------------------------- online example: videos / screenshots: Processing Library - Computer Vision - diewald_CV_kit Download Installation Reference. Source.

vision_opencv electric: Documentation generated on January 11, 2013 at 11:58 AMfuerte: Documentation generated on December 28, 2013 at 05:43 PMgroovy: Documentation generated on March 27, 2014 at 12:20 PM (job status).hydro: Documentation generated on March 27, 2014 at 01:33 AM (job status).indigo: Documentation generated on March 27, 2014 at 01:22 PM (job status). Documentation The vision_opencv stack provides packaging of the popular OpenCV library for ROS. For OpenCV vision_opencv provides several packages: cv_bridge: Bridge between ROS messages and OpenCV. image_geometry: Collection of methods for dealing with image and pixel geometry In order to use ROS with OpenCV, please see the cv_bridge package. As of electric, OpenCV is a system dependency. Using OpenCV in your ROS code You just need to add a dependency on opencv2 and find_package it in your CMakeLists.txt as you would for any third party package: Report an OpenCV specific Bug For issues specific to OpenCV: Tutorials

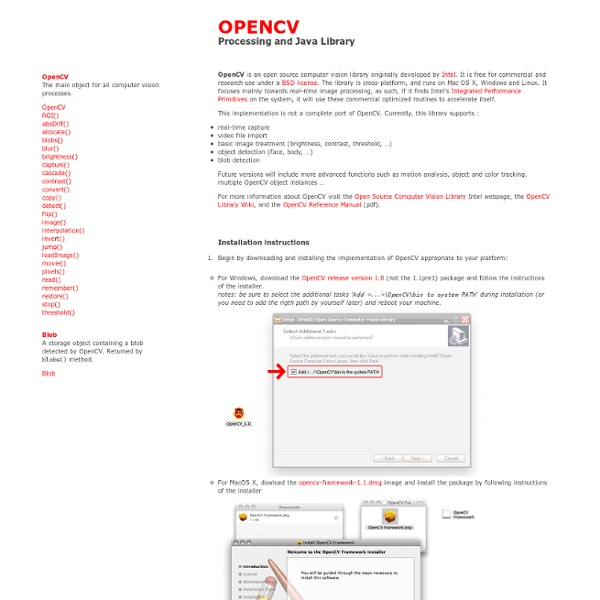

OpenCV 2 in Processing | codeanticode Xavier Hinault recently released a new computer vision library for Processing, based on JavaCV and OpenCV 2: JavacvPro. This is a great contribution to the community, since it appears that the development of the original OpenCV library for Processing has stalled, without moving beyond OpenCV 1.0 support. JavacvPro is based on the very solid JavaCV wrappers by Samuel Audet, which provide access to the latest version of OpenCV (2.3.1 at the time of writing this), and in fact also allows to use other computer vision frameworks like OpenKinect, and ARToolKit. The basic requirement to use JavacvPro is to install OpenCV 2.3.1. Windows installation from pre-compiled binaries: Linux install from source: MacOSX (also from source): Like this: Like Loading...

Announcing OpenCV for Processing I’m proud to announce the release of OpenCV for Processing, a computer vision library for Processing based on OpenCV. You can download it here. The goal of the library is to make it incredibly easy to get started with computer vision, to make it easy to experiment with the most common computer vision tools, and to make the full power of OpenCV’s API available to more advanced users. OpenCV for Processing is based on the official OpenCV Java bindings. The library ships with 20+ examples demonstrating its use for everything from face detection: (Code here: FaceDetection.pde) to contour finding: (Code here: FindContours.pde) to background subtraction: (Code here: BackgroundSubtraction.pde) to depth from stereo: (Code here: DepthFromStereo.pde) and AR marker detection: (Code here: MarkerDetection.pde) So far, OpenCV for Processing has been tested on 64-bit Mac OSX (Lion and Mountain Lion, specifically) as well as 32-bit Windows 7 and 64- and 32-bit Linux (thanks Arturo Castro). A Book!

image processing - What is the most accurate way of determining an object's color Identifying Objects based on color (RGB) | IMAGE PROCESSING Here I used a bitmap image with different shapes filled with primary colors Red, Blue and Green. The objects in the image are separated based on the colors. The image is a RGB image which is a 3 dimensional matrix. Lets use (i,j) for getting the pixel position of the image A. In the image, A (i, j, 1) represents the value of red color. A (i, j, 2) represents the green color. A (i, j, 3) represents the blue color. To separate the objects of color red: Check if A (i, j, 1) is positive. A (i, j, 2) and A (i, j, 3) will be zero. Similarly, other colors can be separated. A=imread('shapes.bmp'); figure,imshow(A); title('Original image'); %Preallocate the matrix with the size of A Red=zeros(size(A)); Blue=zeros(size(A)); Green=zeros(size(A)); for i=1:size(A,1) for j=1:size(A,2) %The Objects with Red color if(A(i,j,1) < = 0) Red(i,j,1)=A(i,j,1); Red(i,j,2)=A(i,j,2); Red(i,j,3)=A(i,j,3); end %The Objects with Green color if(A(i,j,2) < = 0) Green(i,j,1)=A(i,j,1); Green(i,j,2)=A(i,j,2); Green(i,j,3)=A(i,j,3); Red=uint8(Red);

Behavior-based Robotics Perception Perception A major tenet of the behavior-based approach is action-oriented perception where the task frames the perceptual input. Perception without the context of action is meaningless. At times, it is impossible to produce accurate data, even about the environmental information deemed crucial. Active perception allows perceptual processes to control themselves. Using such behavior-based sensory strategies, researchers at Carnegie-Mellon have created agents capable of driving real cars across America at speeds above 100 kph. RoboCup Software - GT RoboJackets From GT RoboJackets RoboCup Software encompasses all parts of the system that run on a host machine (not the robots). The entire software system is composed both of the components we play soccer and compete with as well as a sort of SDK that allows new components to be written quickly for idea and system testing. We currently host our software project on github, so check it out: soccer Everything after vision and before radio happens here. simulator Simulates a game environment, including vision and radio sslrefbox The standard SSL referee box for issuing referee commands to all teams radio Radio communication with on-field robots log_viewer Playback of logged data simple_logger Records vision and referee data just like soccer does, but with no processing. convert_tcpdump Reads vision and referee packets from a tcpdump file and generates a log file. RoboCup Setup A step by step guide to setting up your new Linux system with our code base. Coding standard