Web Page Analyzer - free website optimization tool website speed Free Website Performance Tool and Web Page Speed Analysis Try our free web site speed test to improve website performance. Enter a URL below to calculate page size, composition, and download time. The script calculates the size of individual elements and sums up each type of web page component. Help Speed Up the Web Tired of waiting for slow web sites? <a href=" Page Analyzer</a> - Free web page analysis tool calculates page size, composition, and download time. Consider optimizing your site - with our Website Optimization Secrets book, contacting us about our optimization services, or our Speed Tweak tutorials. Related Website Optimization Services Try our targeted services to optimize your web site's ROI. Version History See the version history for all revisions.

Dominique's Weblog About gathering web 2.0 personal data into one safer place Everyday web 2.0 application holds our personal data into proprietary servers. While hosting providers could offer dedicated machines, I imagined once that, in a perfect future, I may rent a server for hosting my own personal data and let authorize web 2.0 applications to operate on such data. While reading recently a French paper on semantic web (Le web sémantique ou l'importance des données liées), I have found an interesting idea [from David Larlet] about such dedicated data servers. " Personal data represent currently the value that an application get while offering its service, often a "free" one. In most cases, you can't get back this value without being a geek, then it would be lost in case of policy change, bankruptcy, database crash, etc. While such box gathers more and more services over time, within medium-term, such box will have its own IP(v6?) This may going to change web application landscape. Here are few ideas.

"Why is your desktop app not a web app?" While it may seem that everyone and everything is running on the web and mobile phone, what's the place for desktop applications? Is there one? How big is it? My hypothesis has been that the place of the desktop is simply becoming more clearly defined, rather than becoming less important. I then asked a number of technical leads of large desktop projects why they weren't creating web applications instead of desktop applications. Their answers can be read below. Jean-Claude Dauphin, UNESCO, creating J-ISIS, a bibliographic storage/retrieval system: It's mainly related to UNESCO's initiative to help reinforce the capacities of developing countries. Tonny Kohar, Kiyut, creating graphic applications, such as vector drawing programs and photo filters: Some applications require heavy interactive UI which, at the current stage, web applications are not cut out for. Dale Thoma, Saab Systems Grintek, creating applications for the South African National Defence Force: Rich functionality.

The WebM Video Format – the Saviour of Open Video on the Web? | I don’t think I’m exaggerating when I’m saying something really really important has happened for the future of the Open Web. Finally, it looks like there might be a solution to the video codecs and patent encumbered alternatives we have been dealing with. Background About two months ago I wrote What Will Happen To Open Video On The Web? and expressed fear for the future of video on the web, and that the H.264 codec will never be something solid to build on, due to it being heavily patented. Introducing the WebM project Today at Google I/O 2010, Google announced the WebM project, which is, simply put: …dedicated to developing a high-quality, open video format for the web that is freely available to everyone. In more detail, it is a video format consisting of: VP8, a video codec released today under a BSD-style, royalty-free license (owned by Google from their acquire of On2 Technologies).Vorbis, open-source audio codec.A container format based on Matroska. What this means Who supports this?

How do you convince the average web user to switch to a non-IE browser? As web designers and developers, we love to see how our sites and web apps look and function using a really good browser. It’s true that with the release of IE9, Microsoft has made great progress in the so-called browser wars. And although IE9 is a fast and reliable browser that has pretty good support for CSS3 and HTML5, there are still quite a few missing technologies that we all would like to see in Internet Explorer soon. But the reality is that while we as developers know that the user experience is greatly improved when a site is viewed in Chrome, Opera, Safari, or even Firefox, our users are not aware of this. Personally, I always do what I can to promote the good browsers. I had this experience recently when I went to my friend Alex’s home for dinner. “Why do you use Internet Explorer?” One of the first things I did was ask Alex some questions about his feelings towards browsing the web, and the software he uses. “Why do you use Internet Explorer? “No, not really. “No. Oh, dear.

The Rise Of The API, The Future Of The Web Last month, Twitter and Facebook made some moves to hide RSS feeds and put focus more on their APIs. There was the typical ranting that followed the news, some in favor of RSS and others not. Now that the conversation and controversy of RSS being killed again has died down, I wanted to address the real question behind Twitter’s and Facebook’s decisions. The real issue at hand is the evolution of the web. If you focus on a single web application, you would never find a reason to really move away from JavaScript and JSON. 73% of the APis on Programmable Web use REST. Granted, this is a biased sampling because Programmable Web is focused on public web application APIs. Some of the data format questions can be answered by other means as well. One important reason for the rise of APIs is that REST’s simplicity has allowed almost anyone to define an API. API usage is heavily determined by how easy it is to start programming against the API.

NekoHTML TagSoup home page Index Introduction This is the home page of TagSoup, a SAX-compliant parser written in Java that, instead of parsing well-formed or valid XML, parses HTML as it is found in the wild: poor, nasty and brutish, though quite often far from short. TagSoup is designed for people who have to process this stuff using some semblance of a rational application design. By providing a SAX interface, it allows standard XML tools to be applied to even the worst HTML. This is also the README file packaged with TagSoup. TagSoup is free and Open Source software. The TagSoup logo is courtesy Ian Leslie. TagSoup 1.2.1 released TagSoup 1.2.1 is a much-delayed bug fix release. Download the TagSoup 1.2.1 jar file here. Taggle, a TagSoup in C++, available now A company called JezUK has released Taggle, which is a straight port of TagSoup 1.2 to C++. The author says the code is alpha-quality now, so he'd appreciate lots of testers to shake out bugs. The code is currently in public Subversion: you can fetch it with

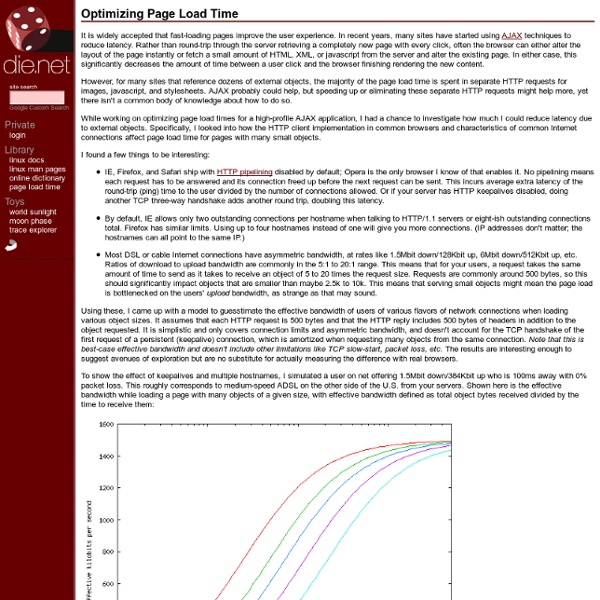

You only control 1/3 of your Page Load Performance! Application Performance You do not agree with that? Have you ever looked at the details of your page load time and analyzed what really impacts Page Load Time? Let me show you with a real life example and let me explain that in most cases you only control 1/3 of the time required to load a page as the rest is consumed by 3rd party content that you do not have under control. Be Aware of Third Party Content When analyzing web page load times we can use tools such as dynaTrace, Firebug or PageSpeed. The two screenshots below show these two pages as rendered by the browser. Screen shot of the page without and with highlighted Third Party Content The left screenshot shows the page with content delivered by your application. The super-fast page that finishes the download of all necessary resources after a little over two seconds is slowed down by eight seconds. 4 Typical Problems with 3rd Party Content Let me explain 4 typical problems that come with adding 3rd party content and why this impacts page load time.

Kill the Telcos Save the Internet - The Unsocial Network Someone is killing the Internet. Since you probably use the Internet everyday you might find this surprising. It almost sounds silly, and the reason is technical, but our crack team of networking experts has examined the patient and made the diagnosis. What did they find? Diagnostic team: the Packet Pushers gang (Greg Ferro, Jan Zorz, Ivan Pepelnjak) in the podcast How We Are Killing the Internet. Diagnosis: invasive tunnelation. This is a classic story in a strange setting--the network--but the themes are universal: centralization vs. decentralization (that's where the telcos obviously come in), good vs. evil, order vs. disorder, tyranny vs. freedom, change vs. stasis, simplicity vs. complexity. Our emergency medics for this battle, in this free flowing and wide ranging podcast, pinpoint the problem: through IPv6 and telco domination we are losing the original beauty and simplicity of the Internet. What are Tunnels? First, what are these tunnel things? Why do Tunnels Suck?

Making the web speedier and safer with SPDY In the two years since we announced SPDY, we’ve been working with the web community on evolving the spec and getting SPDY deployed on the Web. Chrome, Android Honeycomb devices, and Google's servers have been speaking SPDY for some time, bringing important benefits to users. For example, thanks to SPDY, a significant percentage of Chrome users saw a decrease in search latency when we launched SSL-search. We’ve also seen widespread community uptake and participation. Given SPDY's rapid adoption rate, we’re working hard on acceptance tests to help validate new implementations. With the help of Mozilla and other contributors, we’re pushing hard to finalize and implement SPDY draft-3 in early 2012, as standardization discussions for SPDY will start at the next meeting of the IETF. We look forward to working even closer with the community to improve SPDY and make the Web faster! To learn more about SPDY, see the link to a Tech Talk here, with slides here.

The New MaxCDN – Built for Design & Speed | The NetDNA Blog MaxCDN has gone through numerous revisions throughout the years, but we wanted to do something different... really different. After spending months on end mocking up designs, straggling to get content together and peer checked, and dabbling with code to make things just right, the new MaxCDN.com is finally here, and man is it looking better than ever. // Just Getting Started: Design Like any design process, this took many countless revisions to get just right. Originally, we wanted the site to look somewhat like the original Twitter Bootstrap website; the large buttons and large text headlines seemed to be the latest trend in web design. // Built for SPEED As the new MaxCDN design is a minimal, streamlined web design, we wanted to reflect that in the code. The new MaxCDN website loads in less than a second and does not even hit 300KB for the page size. See the stack of six blue lines near the middle? // How We Achieved a .50s Page Load /* The Code */' Implement all the latest technologies

How Facebook Makes Mobile Work at Scale for All Phones, on All Screens, on All Networks When you find your mobile application that ran fine in the US is slow in other countries, how do you fix it? That’s a problem Facebook talks about in a couple of enlightening videos from the @scale conference. Since mobile is eating the world, this is the sort of thing you need to consider with your own apps. In the US we may complain about our mobile networks, but that’s more #firstworldproblems talk than reality. Mobile networks in other countries can be much slower and cost a lot more. This is the conclusion from Chris Marra, Project Manager at Facebook, in a really interesting talk titled Developing Android Apps for Emerging Market. Facebook found in the US there’s 70.6% 3G penetration with 280ms average latency. Chris also talked about Facebook’s comprehensive research on who uses Facebook and what kind of phones they use. It turns out the typical phone used by Facebook users is from circa 2011, dual core, with less than 1GB of RAM. Facebook has moved to a product organization.