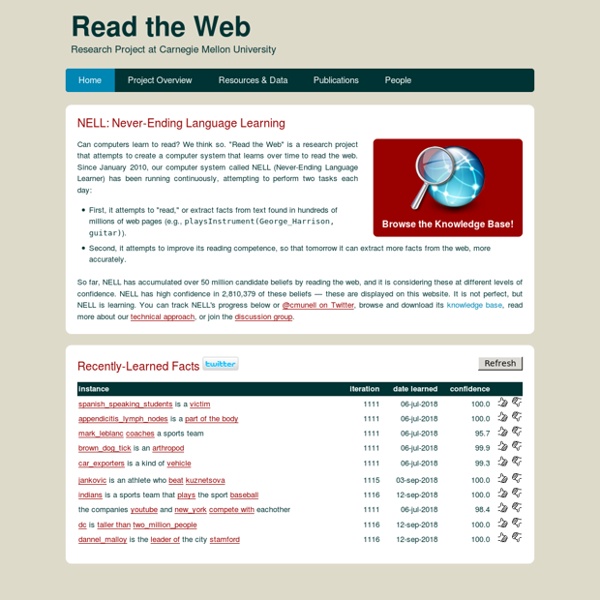

Conceptual dependency theory From Wikipedia, the free encyclopedia Conceptual dependency theory is a model of natural language understanding used in artificial intelligence systems. Roger Schank at Stanford University introduced the model in 1969, in the early days of artificial intelligence.[1] This model was extensively used by Schank's students at Yale University such as Robert Wilensky, Wendy Lehnert, and Janet Kolodner. Schank developed the model to represent knowledge for natural language input into computers. The model uses the following basic representational tokens:[3] real world objects, each with some attributes.real world actions, each with attributestimeslocations A set of conceptual transitions then act on this representation, e.g. an ATRANS is used to represent a transfer such as "give" or "take" while a PTRANS is used to act on locations such as "move" or "go". A sentence such as "John gave a book to Mary" is then represented as the action of an ATRANS on two real world objects, John and Mary.

Case-Based Reasoning Case-based reasoning is one of the fastest growing areas in the field of knowledge-based systems and this book, authored by a leader in the field, is the first comprehensive text on the subject. Case-based reasoning systems are systems that store information about situations in their memory. As new problems arise, similar situations are searched out to help solve these problems. Problems are understood and inferences are made by finding the closest cases in memory, comparing and contrasting the problem with those cases, making inferences based on those comparisons, and asking questions when inferences can't be made. This book presents the state of the art in case-based reasoning. This book is an excellent text for courses and tutorials on case-based reasoning. Direct Memory Access Parsing (DMAP) A Direct Memory Access Parser reads text and identifies the concepts in memory that text refers to. It does this by matching phrasal patterns attached to those concepts (mops). Attaching Phrases to Concepts For example, suppose we wanted to read texts about economic arguments, as given by people such as Milton Friedman and Lester Thurow. The first thing we have to do is define concepts for those arguments, those economists, and for the event of economists presenting arguments. Next we have to attach to these concepts phrases that are used to refer to them. More complex concepts, such as a change in an economic variable, or a communication about an event, require phrasal patterns . For example, the concept m-change-event has the role :variable which can be filled by any m-variable , such as m-interest-rates . The Concept Recognition Algorithm From the Friedman example, we can see that we want the following kinds of events to occur: Getting Output from DMAP with-monitors is a macro.

Universal Networking Language Universal Networking Language (UNL) is a declarative formal language specifically designed to represent semantic data extracted from natural language texts. It can be used as a pivot language in interlingual machine translation systems or as a knowledge representation language in information retrieval applications. Scope and goals[edit] UNL is designed to establish a simple foundation for representing the most central aspects of information and meaning in a machine- and human-language-independent form. As a language-independent formalism, UNL aims to code, store, disseminate and retrieve information independently of the original language in which it was expressed. At first glance, UNL seems to be a kind of interlingua, into which source texts are converted before being translated into target languages. Nevertheless, it is important to note that at present it would be foolish to claim to represent the “full” meaning of any word, sentence, or text for any language. Structure[edit]

The Process of Question Answering. ions - Search all of the collections listed below at once. Technical Reports - Scientific and technical (S&T) reports conveying results of Defense-sponsored research, development, test and evaluation (RDT&E) efforts on a wide range of topics. Collection includes both citations and many full-text, downloadable documents from mid-1900s to present. AULIMP - Air University Library Index to Military Periodicals. Subject index to significant articles, news items, and editorials from military and aeronautical periodicals, with citations from 1988 to present. BRD - Biomedical Research Database.

Universal Networking Language (UNL) Universal Networking Language (UNL) is an Interlingua developed by UNDL foundation. UNL is in the form of semantic network to represent and exchange information. Concepts and relations enable encapsulation of the meaning of sentences. The UNL consists of Universal Words (UWs), Relations and Attributes and knowledge base. Universal Words (UWs) Universal words are UNL words that carry knowledge or concepts. Examples: bucket(icl>container) water(icl>liquid) Relations Relations are labelled arcs that connect nodes (Uws) in the UNL graph. Examples: agt ( break(agt>thing,obj>thing), John(iof>person) ) Attributes Attributes are annotations used to represent grammatical categories, mood, aspect, etc. Example: work(agt>human). Knowledge Base The UNL Knowledge Base contains entries that define possible binary relations between UWs.

Great Books of the Western World The Great Books (second edition) Great Books of the Western World is a series of books originally published in the United States in 1952, by Encyclopædia Britannica, Inc., to present the Great Books in a 54-volume set. The original editors had three criteria for including a book in the series: the book must be relevant to contemporary matters, and not only important in its historical context; it must be rewarding to re-read; and it must be a part of "the great conversation about the great ideas", relevant to at least 25 of the 102 great ideas identified by the editors. The books were not chosen on the basis of ethnic and cultural inclusiveness, historical influence, or the editors' agreement with the views expressed by the authors.[1] A second edition was published in 1990 in 60 volumes. History[edit] After deciding what subjects and authors to include, and how to present the materials, the project was begun, with a budget of $2,000,000. Volumes[edit] Volume 1[edit] The Great Conversation

In-Depth Understanding This book describes a theory of memory representation, organization, and processing for understanding complex narrative texts. The theory is implemented as a computer program called BORIS which reads and answers questions about divorce, legal disputes, personal favors, and the like. The system is unique in attempting to understand stories involving emotions and in being able to deduce adages and morals, in addition to answering fact and event based questions about the narratives it has read. BORIS also manages the interaction of many different knowledge sources such as goals, plans, scripts, physical objects, settings, interpersonal relationships, social roles, emotional reactions, and empathetic responses. The book makes several original technical contributions as well. In-Depth Understanding is included in The MIT Press Artificial Intelligence Series.

A Syntopicon: An Index to The Great Ideas A Syntopicon: An Index to The Great Ideas (1952) is a two-volume index, published as volumes 2 and 3 of Encyclopædia Britannica’s collection Great Books of the Western World. Compiled by Mortimer Adler, an American philosopher, under the guidance of Robert Hutchins, president of the University of Chicago, the volumes were billed as a collection of the 102 great ideas of the western canon. The term “syntopicon” was coined specifically for this undertaking, meaning “a collection of topics.”[1] The volumes catalogued what Adler and his team deemed to be the fundamental ideas contained in the works of the Great Books of the Western World, which stretched chronologically from Homer to Freud. The Syntopicon lists, under each idea, where every occurrence of the concept can be located in the collection’s famous works. History[edit] The Syntopicon was created to set the Great Books collection apart from previously published sets (such as Harvard Classics). Purpose[edit] Content[edit] [edit]

Great Conversation From Wikipedia, the free encyclopedia Concept in the philosophy of literature The Great Conversation is the ongoing process of writers and thinkers referencing, building on, and refining the work of their predecessors. This process is characterized by writers in the Western canon making comparisons and allusions to the works of earlier writers and thinkers. As such it is a name used in the promotion of the Great Books of the Western World published by Encyclopædia Britannica Inc. in 1952. According to Hutchins, "The tradition of the West is embodied in the Great Conversation that began in the dawn of history and that continues to the present day".[3] Adler said, What binds the authors together in an intellectual community is the great conversation in which they are engaged. See also[edit] Notes[edit] External links[edit]

The Great Conversation by Robert Hutchins Great Books of the Western World (60 vols.) Overview Imagine: the entirety of the Western canon at your fingertips. The makers of Encyclopaedia Britannica bring you the Great Books of the Western World. Comprising 60 volumes containing 517 works written by 130 authors, these texts capture the major ideas, stories, and discoveries that shaped Western culture. The foundational library for a liberal arts education, the Great Books include works of literature, classics, mathematics and natural sciences, history and social sciences, philosophy and religion. From Homer to Hemingway, Aquinas to Nietzsche, and Galileo to Einstein, the classic conversations spanning history now continue in your digital library. Gain insight into these conversations with the Syntopicon. With Noet your library is connected. About Mortimer J. Mortimer Jerome Adler (December 28, 1902–June 28, 2001) was a philosopher, professor, and editor.

Internet Relay Chat protocol for real-time Internet chat and messaging Internet Relay Chat (IRC) is an application layer protocol that facilitates communication in the form of text. The chat process works on a client/server networking model. IRC clients are computer programs that users can install on their system or web based applications running either locally in the browser or on a 3rd party server. These clients communicate with chat servers to transfer messages to other clients.[1] IRC is mainly designed for group communication in discussion forums, called channels,[2] but also allows one-on-one communication via private messages[3] as well as chat and data transfer,[4] including file sharing.[5] History[edit] Beginning[edit] IRC was created by Jarkko Oikarinen in August 1988 to replace a program called MUT (MultiUser Talk) on a BBS called OuluBox at the University of Oulu in Finland, where he was working at the Department of Information Processing Science. EFnet[edit] The Undernet fork[edit] Channels[edit]

Noet.com