elasticsearch/logstash-forwarder Trifacta Start page – collectd – The system statistics collection daemon Home · facebook/scribe Wiki 10 Hot Hadoop Startups to Watch CIO — It's no secret that data volumes are growing exponentially. What's a bit more mysterious is figuring out how to unlock the value of all of that data. A big part of the problem is that traditional databases weren't designed for big data-scale volumes, nor were they designed to incorporate different types of data (structured and unstructured) from different apps. Lately, Apache Hadoop, an open-source framework that enables the processing of large data sets in a distributed environment, has become almost synonymous with big data. According to Gartner estimates, the current Hadoop ecosystem market is worth roughly $77 million. [ How the 9 Leading Commercial Hadoop Distributions Stack Up ] [ 18 Essential Hadoop Tools for Crunching Big Data ] Here are 10 startups hoping to grab a piece of that nearly $1 billion pie. (Please note that this lineup favors newer startups. 1. Headquarters: San Mateo, Calif. CEO: Ben Werther, who formerly served as vice president of products at DataStax.

etsy/statsd Open sourcing Databus: LinkedIn's low latency change data capture system Co-authors: Sunil Nagaraj, Shirshanka Das, Kapil Surlaker We are pleased to announce the open source release of Databus - a real-time change data capture system. Originally developed in 2005, Databus has been in production in its latest revision at Linkedin since 2011. The Databus source code is available in our github repo for you to get started! What is Databus? LinkedIn has a diverse ecosystem of specialized data storage and serving systems. This leads to a need for reliable, transactionally consistent change capture from primary data sources to derived data systems throughout the ecosystem. As shown above, systems such as Search Index and Read Replicas act as Databus consumers using the client library. How does Databus work? Databus offers the following important features: Source-independent: Databus supports change data capture from multiple sources including Oracle and MySQL. As depicted, the Databus System comprises of relays, bootstrap service and the client library. Try it out

Hadoop Riemann - A network monitoring system Skytree driskell/log-courier spotify/ffwd

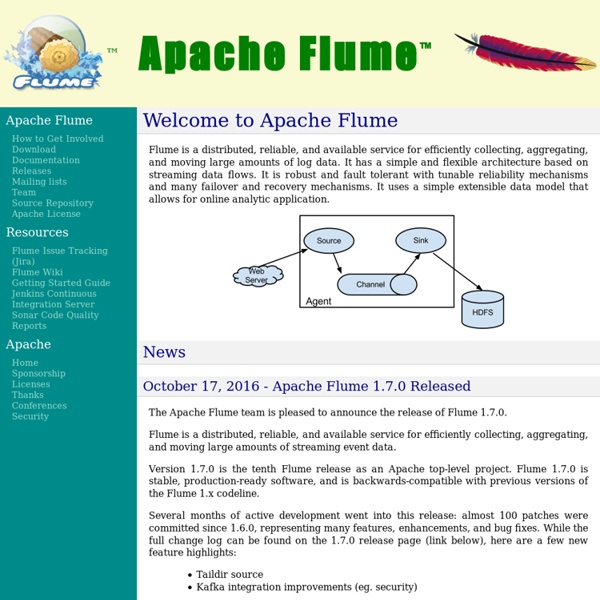

A distributed, reliable, and available service for efficiently collecting, aggregating, and moving large amounts of log data. by sergeykucherov Jul 15