Im Test: Als Webseiten- und App-Tester Geld verdienen | N-JOY - Leben Stand: 01.11.2016 18:20 Uhr Auf Webseiten und in Apps rumsurfen und Geld verdienen - funktioniert das? Wir haben es ausprobiert und wurden auf eine harte Geduldsprobe gestellt. Bevor eine neue Online-Seite oder App auf die breite Masse losgelassen wird, stehen jede Menge Tests an: Wie übersichtlich ist die Seite? Wie funktioniert es? Wenn ein Auftrag reinkommt, bekommt ihr eine Webseite oder App genannt und müsst eine Aufgabe lösen oder eine Frage beantworten - zum Beispiel: "Wie heißt der Chef des Unternehmens dieser Webseite?" Weitere Informationen In der Antwort müsst ihr sehr detailliert beschreiben, wie ihr euch auf der Seite bewegt habt, um zum Ergebnis zu kommen ("Ich scrolle auf der Seite nach unten und fahre mit der Maus über den Menüpunkt XY, darauf öffnet sich ein Dropdown-Menü und ich klicke auf den Unterpunkt XY...“). Anbieter für diese Tests sind zum Beispiel Testbirds, Applause und Testcloud. Was verdient ihr? Für wen ist dieser Nebenjob interessant? Tipp Geduld haben! Fazit

JasperSnoek/spearmint The Jython Project mechanize Stateful programmatic web browsing in Python, after Andy Lester’s Perl module WWW::Mechanize. The examples below are written for a website that does not exist (example.com), so cannot be run. There are also some working examples that you can run. import reimport mechanize br = mechanize.Browser()br.open(" follow second link with element text matching regular expressionresponse1 = br.follow_link(text_regex=r"cheese\s*shop", nr=1)assert br.viewing_html()print br.title()print response1.geturl()print response1.info() # headersprint response1.read() # body br.select_form(name="order")# Browser passes through unknown attributes (including methods)# to the selected HTMLForm.br["cheeses"] = ["mozzarella", "caerphilly"] # (the method here is __setitem__)# Submit current form. # print currently selected form (don't call .submit() on this, use br.submit())print br.form mechanize exports the complete interface of urllib2:

Bürgerwerke | Die Genossenschaften Die Bürgerwerke sind ein Verbund von derzeit 60 Energiegenossenschaften aus ganz Deutschland. Insgesamt stehen diese für über 10.000 engagierte Energiebürger und über 300 dezentrale Kraftwerke in Bürgerhand. Gemeinsam machen wir Energiewende. Die Energiegenossenschaften der Bürgerwerke - Überall regional Ökostrom aus Bürgerhand – Unsere Anlagen Gemeinsam betrieben die Energiegenossenschaften der Bürgerwerke derzeit über 300 Anlagen mit einer Leistung von über 23 Megawatt. Einige der Erneuerbare-Energien-Anlagen, die Sie hier auf der Karte eingezeichnet sehen, speisen direkt für den Bürgerstrom-Tarif der Bürgerwerke ein. Zunächst ein wichtiger Hinweis: Natürlich können Sie den Bürgerstrom der Bürgerwerke auch beziehen, wenn Sie nicht Mitglied in einer Energiegenossenschaft sind. Die Bürgerwerke sind eine Genossenschaft, deren Gesellschafter zu 100% durch Energiegenossenschaften gestellt werden. Als Mitglied einer Energiegenossenschaft haben Sie viele Vorteile:

9.7. itertools — Functions creating iterators for efficient looping This module implements a number of iterator building blocks inspired by constructs from APL, Haskell, and SML. Each has been recast in a form suitable for Python. The module standardizes a core set of fast, memory efficient tools that are useful by themselves or in combination. For instance, SML provides a tabulation tool: tabulate(f) which produces a sequence f(0), f(1), .... These tools and their built-in counterparts also work well with the high-speed functions in the operator module. Infinite Iterators: Iterators terminating on the shortest input sequence: Combinatoric generators: 9.7.1. The following module functions all construct and return iterators. itertools.chain(*iterables) Make an iterator that returns elements from the first iterable until it is exhausted, then proceeds to the next iterable, until all of the iterables are exhausted. def chain(*iterables): # chain('ABC', 'DEF') --> A B C D E F for it in iterables: for element in it: yield element Roughly equivalent to: 9.7.2.

Recetario - PyAr - Python Argentina Nuestro CookBook, en vías desarrollo. A este lugar uno recurre cada vez que se encuentra en la cocina de Python, cuchillo en mano y se da cuenta que a sus ingredientes le faltan el toque de un cheff experto. Nuestra especialidad son las recetas autóctonas. ¿Platos magistrales que fallan al sazonar con acentos y eñes? 1. 1.1. Recetario/CreandoUnNuevoProyectoPython: Receta para crear un entorno de trabajo y un esqueleto minimo para un nuevo proyecto Python 1.2. Autocompletado en consola interactiva: tip sobre como agregar autocompleción con tab en la consola interactiva imitando el comportamiento ipython. 2. 2.1. 3. 3.1. /ExtraerMails de un texto utilizando el módulo re. 4. 4.1. aLetras aLetras : Función que al recibir un número lo convierte a letras. 4.2. Reverse : Función que invierte los caracteres. 4.3. validar_cuit /ValidarCuit : Función para validar un CUIT/CUIL estilo 00-00000000-0 4.4. digito_verificador_modulo10 4.5. 4.6. 5. 5.1. 5.2. 6. 6.1. 6.2. 6.3.

Die 5 besten (kostenfreien) Online-Foto-Editoren - WebCampus - E-Learning Komplettlösung In diesem Artikel stellen wir Ihnen die fünf besten und kostenfreien Online Foto-Editoren vor. Alle Programme sind webbasiert, sodass diese direkt im Webbrowser geöffnet werden können – ohne dass etwas herunterladen werden muss. So können Sie ihr Bildmaterial kreativ und individuell erstellen, um es dann verschönert weiter zu verwenden (z.B., um es in Ihrem WebCampus zu integrieren). Platz 1: befunky →WebCampus Empfehlung befunky bietet Ihnen drei kostenfreie Dienste in einem: Bildbearbeitung, Collagen-Maker und Designer. Screenshot Platz 2: Pixlr Pixlr bietet neben einer Desktop- und Mobil-Software außerdem zwei Web Apps an, die direkt im Browser geöffnet werden: Pixlr Express und Pixlr Editor. Pixlr Express: Pixlr Editor: Platz 3: Fotor Fotor bietet ähnlich wie befunky drei Programme in einem: Editor, Collage und Design. Platz 4: Pablo von Buffer Auch Buffer bietet eine kostenfreie Bildbearbeitungssoftware, die keine Registrierung benötigt: Pablo. Platz 5: Stencil

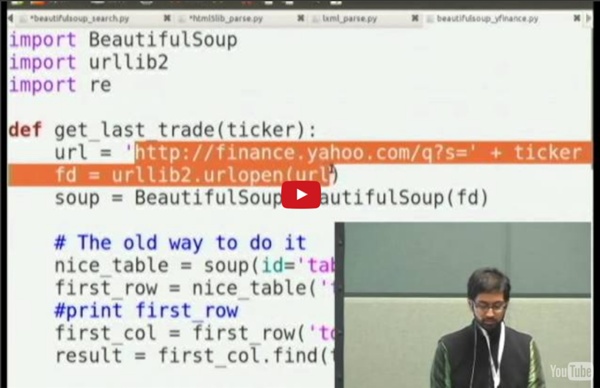

Beautiful Soup Documentation — Beautiful Soup 4.2.0 documentation Beautiful Soup is a Python library for pulling data out of HTML and XML files. It works with your favorite parser to provide idiomatic ways of navigating, searching, and modifying the parse tree. It commonly saves programmers hours or days of work. These instructions illustrate all major features of Beautiful Soup 4, with examples. I show you what the library is good for, how it works, how to use it, how to make it do what you want, and what to do when it violates your expectations. This document covers Beautiful Soup version 4.12.1. You might be looking for the documentation for Beautiful Soup 3. This documentation has been translated into other languages by Beautiful Soup users: Getting help If you have questions about Beautiful Soup, or run into problems, send mail to the discussion group. When reporting an error in this documentation, please mention which translation you’re reading. Here’s an HTML document I’ll be using as an example throughout this document. $ python setup.py install

Twisted Documentation: Twisted Documentation Go to the latest version of this document. Introduction Executive summary Connecting your software - and having fun too! Getting Started Networking and Other Event Sources Twisted Internet A brief overview of the twisted.internet package. Reactor basics The event loop at the core of your program. Index Version: 13.2.0 Krebs on Security FMin · hyperopt/hyperopt Wiki This page is a tutorial on basic usage of hyperopt.fmin(). It covers how to write an objective function that fmin can optimize, and how to describe a search space that fmin can search. Hyperopt's job is to find the best value of a scalar-valued, possibly-stochastic function over a set of possible arguments to that function. The way to use hyperopt is to describe: the objective function to minimizethe space over which to searchthe database in which to store all the point evaluations of the searchthe search algorithm to use This (most basic) tutorial will walk through how to write functions and search spaces, using the default Trials database, and the dummy random search algorithm. Parallel search is possible when replacing the Trials database with a MongoTrials one; there is another wiki page on the subject of using mongodb for parallel search. Choosing the search algorithm is as simple as passing algo=hyperopt.tpe.suggest instead of algo=hyperopt.random.suggest. 1. 1.1 The Simplest Case 2.

PyFlickrStreamr 0.1 Package Index > PyFlickrStreamr > 0.1 Not Logged In Status Nothing to report PyFlickrStreamr 0.1 Download PyFlickrStreamr-0.1.tar.gz PyFlickrStreamr provides a continuous, blocking python interface for streaming Flickr photos in near real-time. ============= PyFlickrStreamr ============= PyFlickrStreamr provides a continuous, blocking python interface for streaming Flickr photos in near real-time. Downloads (All Versions): 5 downloads in the last day 38 downloads in the last week 179 downloads in the last month Website maintained by the Python community Real-time CDN by Fastly / hosting by Rackspace / design by Tim Parkin

Luftfahrt Bundesamt - Schlichtung Das Luftfahrt-Bundesamt informiert als nationale Durchsetzungs- und Beschwerdestelle unter anderem für die Verordnungen (EG) Nr. 261/2004 und Nr. 1107/2006 über die wesentlichen Aspekte des nationalen Schlichtungsverfahrens im Luftverkehr. Welche Vorteile habe ich als Fluggast durch die Einführung des Schlichtungsverfahrens im Luftverkehr? Mit der Einführung der Schlichtung im Luftverkehr steht dem Fluggast eine schnelle, kostenfreie und effektive Möglichkeit zur Durchsetzung seiner zivilrechtlichen Ansprüche zur Verfügung. Es besteht für den Fluggast zwar nach wie vor die Möglichkeit, einen Rechtsanwalt oder ein Inkassounternehmen mit der Durchsetzung seiner zivilrechtlichen Ansprüche zu beauftragen. Jedoch liegt in diesem Fall das Kostenrisiko beim Fluggast, während das Schlichtungsverfahren für ihn regelmäßig kostenlos ist. Was passiert? Welche Zuständigkeit obliegt dem Luftfahrt-Bundesamt im Zusammenhang mit den Verordnungen (EG) Nr. 261/2004 und Nr. 1107/2006? An wen wende ich mich?