GRASP (object-oriented design) General Responsibility Assignment Software Patterns (or Principles), abbreviated GRASP, consists of guidelines for assigning responsibility to classes and objects in object-oriented design. Larman states that "the critical design tool for software development is a mind well educated in design principles. It is not the UML or any other technology." Thus, GRASP is really a mental toolset, a learning aid to help in the design of object-oriented software. The Controller pattern assigns the responsibility of dealing with system events to a non-UI class that represents the overall system or a use case scenario. A use case controller should be used to deal with all system events of a use case, and may be used for more than one use case (for instance, for use cases Create User and Delete User, one can have a single UserController, instead of two separate use case controllers). It is defined as the first object beyond the UI layer that receives and coordinates ("controls") a system operation.

Encapsulation (object-oriented programming) In programming languages, encapsulation is used to refer to one of two related but distinct notions, and sometimes to the combination[1][2] thereof: The second definition is motivated by the fact that in many OOP languages hiding of components is not automatic or can be overridden; thus, information hiding is defined as a separate notion by those who prefer the second definition. class Program { public class Account { private decimal accountBalance = 500.00m; public decimal CheckBalance() { return accountBalance; } } static void Main() { Account myAccount = new Account(); decimal myBalance = myAccount.CheckBalance(); /* This Main method can check the balance via the public * "CheckBalance" method provided by the "Account" class * but it cannot manipulate the value of "accountBalance" */ }} Below is an example in Java: Encapsulation is also possible in older, non-object-oriented languages. Clients call the API functions to allocate, operate on, and deallocate objects of an opaque type.

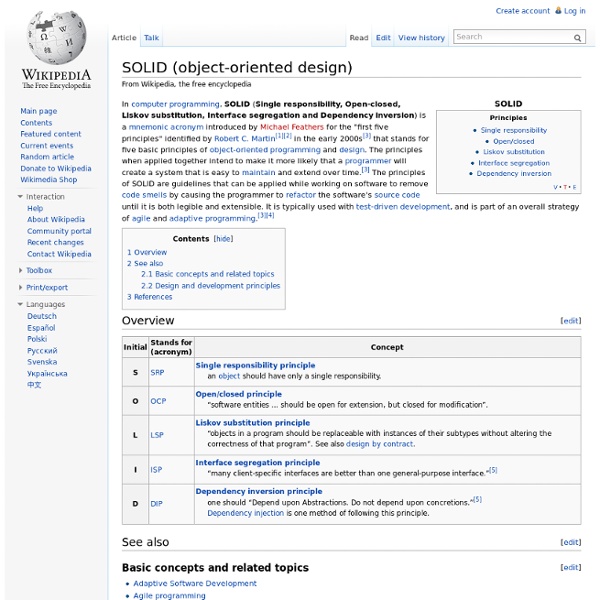

ArticleS.UncleBob.PrinciplesOfOod The Principles of OOD What is object oriented design? What is it all about? What are it's benefits? Of all the revolutions that have occurred in our industry, two have been so successful that they have permeated our mentality to the extent that we take them for granted. Programs written in these languages may look structured and object oriented, but looks can be decieving. In March of 1995, in comp.object, I wrote an article that was the first glimmer of a set of principles for OOD that I have written about many times since. These principles expose the dependency management aspects of OOD as opposed to the conceptualization and modeling aspects. Dependency Management is an issue that most of us have faced. The first five principles are principles of class design. The next six principles are about packages. The first three package principles are about package cohesion, they tell us what to put inside packages: Sincerely, Henrik Robert, yes thank you, I'm aware of those. Hi Bob! Regards

Separation of concerns The value of separation of concerns is simplifying development and maintenance of computer programs. When concerns are well-separated, individual sections can be reused, as well as developed and updated independently. Of special value is the ability to later improve or modify one section of code without having to know the details of other sections, and without having to make corresponding changes to those sections. Implementation[edit] Separation of concerns is an important design principle in many other areas as well, such as urban planning, architecture and information design.[5] The goal is to more effectively understand, design, and manage complex interdependent systems, so that functions can be reused, optimized independently of other functions, and insulated from the potential failure of other functions. Origin[edit] The term separation of concerns was probably coined by Edsger W. Let me try to explain to you, what to my taste is characteristic for all intelligent thinking.

Data access object Although this design pattern is equally applicable to most programming languages, most types of software with persistence needs, and most types of databases, it is traditionally associated with Java EE applications and with relational databases accessed via the JDBC API because of its origin in Sun Microsystems' best practice guidelines[1] ("Core J2EE Patterns") for that platform. Advantages[edit] The advantage of using data access objects is the relatively simple and rigorous separation between two important parts of an application that can and should know almost nothing of each other, and which can be expected to evolve frequently and independently. can be used in a large percentage of applications - anywhere data storage is required.hide all details of data storage from the rest of the application.act as an intermediary between the application and the database. Tools and frameworks[edit] See also[edit] References[edit]

Clean Coders You ain't gonna need it "You aren't gonna need it"[1][2] (acronym: YAGNI)[3] is a principle of extreme programming (XP) that states a programmer should not add functionality until deemed necessary.[4] Ron Jeffries writes, "Always implement things when you actually need them, never when you just foresee that you need them."[5] The phrase also appears altered as, "You aren't going to need it"[6][7] or sometimes phrased as, "You ain't gonna need it". YAGNI is a principle behind the XP practice of "do the simplest thing that could possibly work" (DTSTTCPW).[2][3] It is meant to be used in combination with several other practices, such as continuous refactoring, continuous automated unit testing and continuous integration. Used without continuous refactoring, it could lead to messy code and massive rework. YAGNI is not universally accepted as a valid principle, even in combination with the supporting practices. Rationale[edit] See also[edit] References[edit] Jump up ^ Extreme Programming Installed, Ronald E.

ValueObject domain driven design · API design tags: In P of EAA I described Value Object as a small object such as a Money or date range object. Their key property is that they follow value semantics rather than reference semantics. You can usually tell them because their notion of equality isn't based on identity, instead two value objects are equal if all their fields are equal. Although all fields are equal, you don't need to compare all fields if a subset is unique - for example currency codes for currency objects are enough to test equality. A general heuristic is that value objects should be entirely immutable. Early J2EE literature used the term value object to describe a different notion, what I call a Data Transfer Object. You can find some more good material on value objects on the wiki and by Dirk Riehle.

Core J2EE Patterns Don't repeat yourself Applying DRY[edit] DRY vs WET solutions[edit] Violations of DRY are typically referred to as WET solutions, which is commonly taken to stand for either "write everything twice" or "we enjoy typing".[2][3] See also[edit] References[edit] External links[edit]

Data transfer object Data transfer object (DTO)[1][2] is an object that carries data between processes. The motivation for its use has to do with the fact that communication between processes is usually done resorting to remote interfaces (e.g. web services), where each call is an expensive operation.[2] Because the majority of the cost of each call is related to the round-trip time between the client and the server, one way of reducing the number of calls is to use an object (the DTO) that aggregates the data that would have been transferred by the several calls, but that is served by one call only.[2] See also[edit] This pattern is often incorrectly used outside of remote interfaces. This has triggered a response from its author[3] where he reiterates that the whole purpose of DTOs is to shift data in expensive remote calls. See also[edit] Value object Note: A Value object is not a DTO. References[edit] External links[edit]

The Unit Of Work Pattern And Persistence Ignorance Patterns in Practice The Unit Of Work Pattern And Persistence Ignorance Jeremy Miller In the April 2009 issue of MSDN Magazine ("Persistence Patterns") I presented some common patterns that you will encounter when using some sort of Object/Relational Mapping (O/RM) technology to persist business entity objects. In this article, I would like to continue the discussion of persistence patterns with the Unit of Work design pattern and examine the issues around persistence ignorance. The Unit of Work Pattern One of the most common design patterns in enterprise software development is the Unit of Work. The Unit of Work pattern isn't necessarily something that you will explicitly build yourself, but the pattern shows up in almost every persistence tool that I'm aware of. Other times, you may want to write your own application-specific Unit of Work interface or class that wraps the inner Unit of Work from your persistence tool. Manage transactions. Using the Unit of Work Persistence Ignorance

KISS principle From Wikipedia, the free encyclopedia Design principle preferring simplicity A simple sign of the KISS principle (without the fourth word). Origin[edit] While popular usage has transcribed it for decades as "Keep it simple, stupid", Johnson transcribed it simply as "Keep it simple stupid" (no comma), and this reading is still used by many authors.[9] The principle is best exemplified by the story of Johnson handing a team of design engineers a handful of tools, with the challenge that the jet aircraft they were designing must be repairable by an average mechanic in the field under combat conditions with only these tools. The acronym has been used by many in the U.S. military, especially the U.S. Variants[edit] The principle most likely finds its origins in similar minimalist concepts, such as: Heath Robinson contraptions and Rube Goldberg's machines, intentionally overly-complex solutions to simple tasks or problems, are humorous examples of "non-KISS" solutions. Usage[edit] In politics[edit]

RESTful ?