Data Dumps - Freebase API

Data Dumps are a downloadable version of the data in Freebase. They constitute a snapshot of the data stored in Freebase and the Schema that structures it, and are provided under the same CC-BY license. The Freebase/Wikidata mappings are provided under the CC0 license. Freebase Triples The RDF data is serialized using the N-Triples format, encoded as UTF-8 text and compressed with Gzip. < "2001-02"^^< . If you're writing your own code to parse the RDF dumps its often more efficient to read directly from GZip file rather than extracting the data first and then processing the uncompressed data. <subject><predicate><object> . Note: In Freebase, objects have MIDs that look like /m/012rkqx. The subject is the ID of a Freebase object. Topic descriptions often contain newlines. Freebase Deleted Triples The columns in the dataset are defined as: License

Basic Concepts - Freebase API

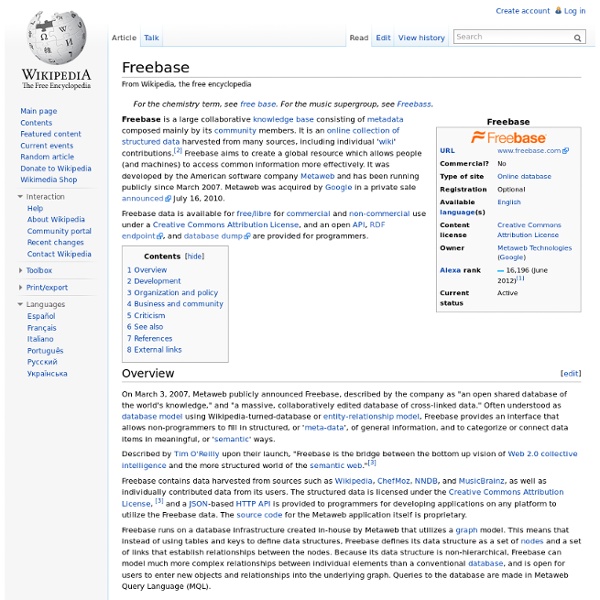

If you are new to Freebase, this section covers the basic terminology and concepts required to understand how Freebase works. Graphs Topics Freebase has over 39 million topics about real-world entities like people, places and things. Since Freebase data is represented a graph, these topics correspond to the nodes in the graph. However, not every node is a topic. Examples of the types of topics found in Freebase: Physical entities, e.g., Bob Dylan, the Louvre Museum, the Saturn planet, to Artistic/media creations, e.g., The Dark Knight (film), Hotel California (song), to Classifications, e.g., noble gas, Chordate, to Abstract concepts, e.g., love, to Schools of thoughts or artistic movements, e.g., Impressionism. Some topics are notable because they hold a lot of data (e.g., Wal-Mart), and some are notable because they link to many other topics, potentially in different domains of information. Types and Properties Any given topic can be seen for many different perspectives for example:

Introducing the Knowledge Graph: things, not strings

Cross-posted on the Inside Search Blog Search is a lot about discovery—the basic human need to learn and broaden your horizons. But searching still requires a lot of hard work by you, the user. So today I’m really excited to launch the Knowledge Graph, which will help you discover new information quickly and easily. Take a query like [taj mahal]. But we all know that [taj mahal] has a much richer meaning. The Knowledge Graph enables you to search for things, people or places that Google knows about—landmarks, celebrities, cities, sports teams, buildings, geographical features, movies, celestial objects, works of art and more—and instantly get information that’s relevant to your query. Google’s Knowledge Graph isn’t just rooted in public sources such as Freebase, Wikipedia and the CIA World Factbook. The Knowledge Graph enhances Google Search in three main ways to start: 1. 2. How do we know which facts are most likely to be needed for each item? 3.