L'agriculture croît dans les mathématiques

Réduction des pesticides et engrais, prédiction des récoltes, adaptation au changement climatique... Les défis posés à l’agriculture sont multiples et les mathématiciens ont des solutions à apporter. Focus sur leurs travaux à l'occasion du Salon de l'agriculture qui se tient à la Porte de Versailles jusqu'au 3 mars. Peut-on mettre l’agriculture en équations ? Simulation de la croissance de plantes (betterave, Arabidopsis, blé, riz, maïs, tournesol, chrysanthème, concombre, tomate, poivron, caféier, pin) par le logiciel Digiplante du laboratoire de mathématiques et informatique de CentraleSupélec. Prédire la récolte grâce aux modèles Les semenciers qui mettent au point de nouvelles variétés tirent aussi un vrai bénéfice de ces modélisations, qui leur permettent d’optimiser leurs essais : sur cent essais d’une nouvelle variété réalisés en différents endroits de la planète, 20 seront effectivement conduits en conditions de culture, et le reste sera effectué par simulation.

Strawberry Perl for Windows

Maimosine met le monde en modèles

De l’exercice physique à la surchauffe des microprocesseurs, les mathématiciens de Maimosine, centre de modélisation et de simulation numérique, aident les chercheurs et industriels du bassin grenoblois à mieux comprendre certains phénomènes complexes. Le point commun entre un coureur en plein effort, une pièce qui vibre dans un moteur, de la glace de mer en train de se fragmenter ou des microprocesseurs qui surchauffent ? Ce sont tous des sujets d’étude pour les mathématiciens de la Maison de la modélisation et de la simulation, nanosciences et environnement (Maimosine). Créée à Grenoble en 2010 et juchée sur les hauteurs d’une tour dominant le campus universitaire, elle constitue un refuge pour les chercheurs et entreprises de la région lorsqu’un phénomène complexe leur échappe. « Modéliser, c’est mettre en équations un phénomène, le simplifier. Optimiser l’effort physique V. Un des camps de l'expédition Damodar au Népal. Localiser un phénomène de vibration Le but ? À lire aussi :

Install Rust - Rust Programming Language

Getting started If you're just getting started with Rust and would like a more detailed walk-through, see our getting started page. Toolchain management with rustup Rust is installed and managed by the rustup tool. Rust has a 6-week rapid release process and supports a great number of platforms, so there are many builds of Rust available at any time. rustup manages these builds in a consistent way on every platform that Rust supports, enabling installation of Rust from the beta and nightly release channels as well as support for additional cross-compilation targets. If you've installed rustup in the past, you can update your installation by running rustup update. For more information see the rustup documentation. Configuring the PATH environment variable In the Rust development environment, all tools are installed to the ~/.cargo/bin %USERPROFILE%\.cargo\bin directory, and this is where you will find the Rust toolchain, including rustc, cargo, and rustup. Uninstall Rust

Modéliser plus pour simuler moins

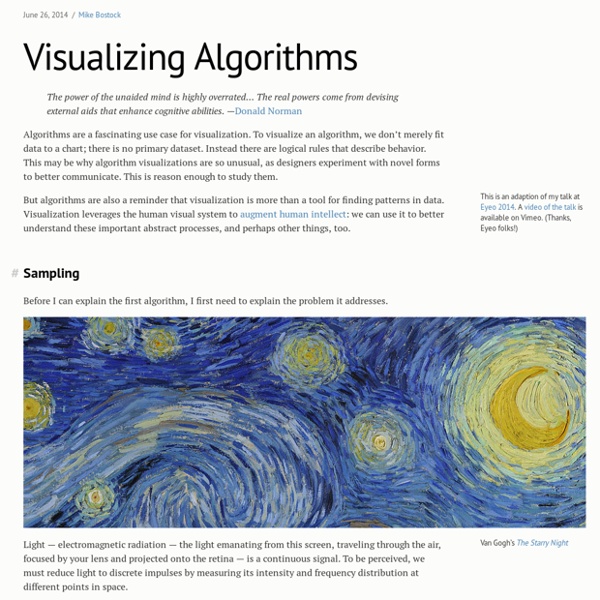

À l’occasion du colloque « Modélisation : succès et limites », le chercheur Frédéric Alexandre nous éclaire sur les développements actuels de la simulation et de la modélisation. Frédéric Alexandre, vous êtes chercheur au Laboratoire bordelais de recherche en informatique (LaBRI1) et intervenant du colloque « Modélisation : succès et limites » qui se tient le 6 décembre 2016. Qu’entend-on au juste aujourd'hui par modélisation et simulation ? Visualisation issue de la simulation numérique des impacts de gouttelettes sur une surface liquide. En quoi cette démarche de modélisation-simulation a-t-elle bouleversé la façon de faire de la recherche dans certaines disciplines ? Ces quinze dernières années, les progrès des algorithmes ont autant contribué à l'accélération des calculs que la puissance des processeurs. On nous annonce depuis longtemps la fin de la loi de Moore relative à l'accroissement régulier de la puissance des ordinateurs.

Other Installation Methods · The Rust Programming Language

Which installer should you use? Rust runs on many platforms, and there are many ways to install Rust. If you want to install Rust in the most straightforward, recommended way, then follow the instructions on the main installation page. That page describes installation via rustup, a tool that manages multiple Rust toolchains in a consistent way across all platforms Rust supports. Why might one not want to install using those instructions? Offline installation. rustup downloads components from the internet on demand. Rust’s platform support is defined in three tiers, which correspond closely with the installation methods available: in general, the Rust project provides binary builds for all tier 1 and tier 2 platforms, and they are all installable via rustup. Other ways to install rustup The way to install rustup differs by platform: On Unix, run curl -sSf | sh in your shell. curl -sSf | sh -s -- --help Standalone installers

Comment calculer le prix du calcul

Le nouveau supercalculateur Jean-Zay, installé à l’Idris, centre de calcul intensif du CNRS, est un des ordinateurs financés et mis à la disposition de la recherche française. Mais comment achète-t-on un tel engin ? Qu’apportera-t-il à l’intelligence artificielle ? Éléments de réponse dans ce billet de Denis Veynante publié avec Libération. Une fois par mois, retrouvez sur notre site les Inédits du CNRS, des analyses scientifiques originales publiées en partenariat avec Libération. Acheter un supercalculateur n’est pas une mince affaire. Le nouveau supercalculateur Jean-Zay, acquis en janvier 2019, est installé à l’Institut du développement et des ressources en informatique scientifique (Idris) du CNRS. C. Marché de la mémoire tendu Ainsi, sous des marques différentes peuvent se cacher des machines avec les mêmes processeurs Intel, accélérateurs graphiques NVIDIA, baies flash DDN, réseau Mellanox, etc. Solution énergétique L’allié de l'IA

Arb - a C library for arbitrary-precision ball arithmetic — Arb 2.17.0-git documentation