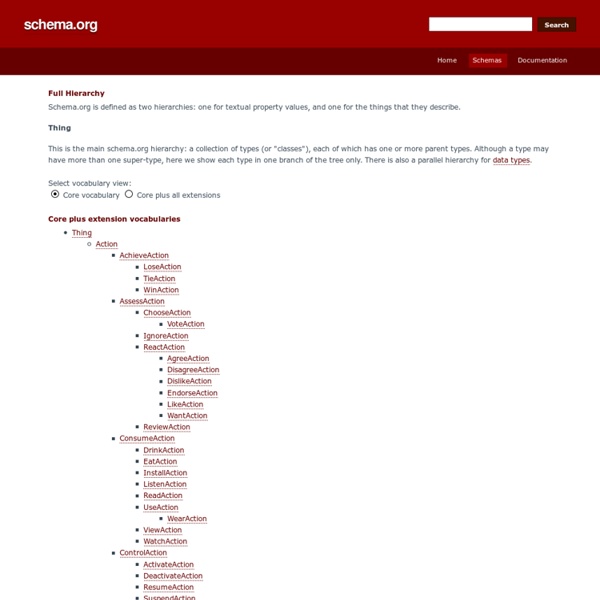

W3C | Linked Data : Current Status What is Linked Data? The Semantic Web is a Web of Data — of dates and titles and part numbers and chemical properties and any other data one might conceive of. The collection of Semantic Web technologies (RDF, OWL, SKOS, SPARQL, etc.) provides an environment where application can query that data, draw inferences using vocabularies, etc. However, to make the Web of Data a reality, it is important to have the huge amount of data on the Web available in a standard format, reachable and manageable by Semantic Web tools. Furthermore, not only does the Semantic Web need access to data, but relationships among data should be made available, too, to create a Web of Data (as opposed to a sheer collection of datasets). To achieve and create Linked Data, technologies should be available for a common format (RDF), to make either conversion or on-the-fly access to existing databases (relational, XML, HTML, etc). What is Linked Data Used For? Examples Learn More Current Status of Specifications

Schema Markup - Rich Snippets Plugin For WordPress. Free & Easy Breadcrumbs: The breadcrumb trail indicates the page’s position in the site hierarchy. Users can directly access the page or navigate all the way up, one level at a time. Events: These rich snippets focus on the events that may be organized in a locality. They display details such as the Date, the Event name and the venue. Organizations: Such rich snippets can be used to display the organizations or the company’s contact details. Such snippets are displayed in the Knowledge panel sometimes. Products: You can use the product based rich snippet to display product details, such as the price, the availability, review and ratings. Recipes: You can use rich snippets for recipes that include information such as preparation and cooking time, nutritional values and reviews and ratings. Reviews and Ratings: One among the important elements in a rich snippet is the review and ratings section. The highlighted area tell you that the recipe received a 4.7 rating out of 5, on the basis of 36 votes.

W3C | Semantic Web Case Studies Case studies include descriptions of systems that have been deployed within an organization, and are now being used within a production environment. Use cases include examples where an organization has built a prototype system, but it is not currently being used by business functions. The list is updated regularly, as new entries are submitted to W3C. There is also an RSS1.0 feed that you can use to keep track of new submissions. Please, consult the separate submission page if you are interested in submitting a new use case or case study to be added to this list. (), by , , Activity area:Application area of SW technologies:SW technologies used:SW technology benefits: A short overview of the use cases and case studies is available as a slide presentation in Open Document Format and in PDF formats.

Majestic®: Marketing Search Engine and SEO Backlink Checker SPARQL 1.1 Protocol 4.1 Security There are at least two possible sources of denial-of-service attacks against SPARQL protocol services. First, under-constrained queries can result in very large numbers of results, which may require large expenditures of computing resources to process, assemble, or return. Another possible source are queries containing very complex — either because of resource size, the number of resources to be retrieved, or a combination of size and number — RDF Dataset descriptions, which the service may be unable to assemble without significant expenditure of resources, including bandwidth, CPU, or secondary storage. Since a SPARQL protocol service may make HTTP requests of other origin servers on behalf of its clients, it may be used as a vector of attacks against other sites or services. SPARQL protocol services may remove, insert, and change underlying data via the update operation. Different IRIs may have the same appearance.

Audit SEO de vos pages - Alyze LOD2 | Interlinked Data seobserver.com | Analyse du site web pour seobserver.com | WooRank.com En quoi est-ce important ? Touts les pages de votre site ne doivent pas spécialement être indexées pour apparaitre dans les résultats de recherche. Cela peut inclure les pages de connexion, les pages de contenus dupliquées, etc. Un fichier robots.txt peut être utilisé pour empêcher les moteurs de recherche de parcourir certaines pages, celles-ci n’apparaitront donc pas dans les résultats de recherche. Il est très facile de créer ce fichier texte et de l’éditer en l’intégrant à votre serveur, mais certaines précautions sont nécessaires afin d’éviter des erreurs. Il est aussi important de noter que les malwares et les robots de spam ignoreront totalement les instructions spécifiées dans le fichier robots.txt, et étant donné que ce type de fichier est public, n’importe qui peut avoir un aperçu sur vos restrictions. Tâches à réaliser Le fichier robots.txt devra être placé à la racine du site, comme ceci : MonSiteWeb.com/robots.txt

OntoWiki — Agile Knowledge Engineering and Semantic Web CubeViz -- Exploration and Visualization of Statistical Linked Data Facilitating the Exploration and Visualization of Linked Data Supporting the Linked Data Life Cycle Using an Integrated Tool Stack Increasing the Financial Transparency of European Commission Project Funding Managing Multimodal and Multilingual Semantic Content Improving the Performance of Semantic Web Applications with SPARQL Query Caching