Introduction to RSS(Rich Summary Site) Recently, there has been an unprecedented caution regarding data privacy. With infamous leaks and instances of phishing and spamming all around, no one wants to put their personal information out there without restraint, in fear of being the next unfortunate target. This makes staying posted with favored content on the massive expanse of the World Wide Web a daunting task. It seems like its time to grab onto the steering wheels. What is RSS? RSS stands for Rich Site Summary or Really Simple Syndication. To set up RSS for a website an XML file has to be created known as the RSS document or RSS Feed.Below is a sample RSS document. Explanation of the code : First comes the XML tag, its version and encoding scheme.The following line marks the beginning of the RSS tag with its version in use. .The next few lines show the channel tag, which marks the beginning of the RSS Feed. Once the XML is ready and validated, it is uploaded to the server.

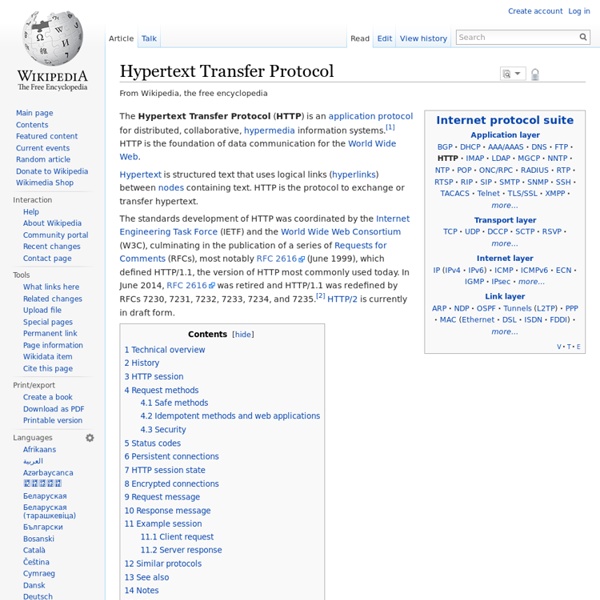

Transmission Control Protocol Web browsers use TCP when they connect to servers on the World Wide Web, and it is used to deliver email and transfer files from one location to another. HTTP, HTTPS, SMTP, POP3, IMAP, SSH, FTP, Telnet and a variety of other protocols are typically encapsulated in TCP. Historical origin[edit] In May 1974 the Institute of Electrical and Electronic Engineers (IEEE) published a paper titled "A Protocol for Packet Network Intercommunication."[1] The paper's authors, Vint Cerf and Bob Kahn, described an internetworking protocol for sharing resources using packet-switching among the nodes. Network function[edit] The protocol corresponds to the transport layer of TCP/IP suite. TCP is utilized extensively by many of the Internet's most popular applications, including the World Wide Web (WWW), E-mail, File Transfer Protocol, Secure Shell, peer-to-peer file sharing, and some streaming media applications. TCP segment structure[edit] A TCP segment consists of a segment header and a data section.

Evernote - Notes Organizer on the App Store Capture ideas when inspiration strikes. Bring your notes, to-dos, and schedule together to tame life’s distractions and accomplish more—at work, at home, and everywhere in between. Evernote syncs to all your devices, so you can stay productive on the go. “Use Evernote as the place you put everything… Don’t ask yourself which device it’s on—it’s in Evernote” – The New York Times “When it comes to taking all manner of notes and getting work done, Evernote is an indispensable tool.” – PC Mag • Write, collect, and capture ideas as searchable notes, notebooks, and to-do lists. • Clip interesting articles and web pages to read or use later. • Add different types of content to your notes: text, docs, PDFs, sketches, photos, audio, web clippings, and more. • Use your camera to scan and organize paper documents, business cards, whiteboards, and handwritten notes. • Manage your to-do list with Tasks—set due dates and reminders, so you never miss a deadline. Also available from Evernote:

Application layer Although both models use the same term for their respective highest level layer, the detailed definitions and purposes are different. In the OSI model, the definition of the application layer is narrower in scope. The OSI model defines the application layer as the user interface responsible for displaying received information to the user. TCP/IP protocols[edit] The IETF definition document for the application layer in the Internet Protocol Suite is RFC 1123. Remote login to hosts: TelnetFile transfer: File Transfer Protocol (FTP), Trivial File Transfer Protocol (TFTP)Electronic mail transport: Simple Mail Transfer Protocol (SMTP)Networking support: Domain Name System (DNS)Host initialization: BOOTPRemote host management: Simple Network Management Protocol (SNMP), Common Management Information Protocol over TCP (CMOT) Other protocol examples[edit] References[edit] External links[edit]

Create interactive presentations with Genially, free and online | Genially Interactive slides contain clickable hotspots, links, buttons, and animations that are activated at the touch of a button. Instead of reading or watching passively, your audience can actively interact with the content. Genially’s interaction presentation software allows you to combine text, photos, video clips, audio and other content in one deck. If you’re a teacher, you can share multiple materials in one single learning resource. An interactive slide deck is more user-friendly than a Microsoft PowerPoint presentation or Google Slides document. The other benefit of interactive content is increased engagement.

Transport layer Transport layer implementations are contained in both the TCP/IP model (RFC 1122),[2] which is the foundation of the Internet, and the Open Systems Interconnection (OSI) model of general networking, however, the definitions of details of the transport layer are different in these models. In the Open Systems Interconnection model the transport layer is most often referred to as Layer 4. The best-known transport protocol is the Transmission Control Protocol (TCP). Services[edit] Transport layer services are conveyed to an application via a programming interface to the transport layer protocols. Analysis[edit] The transport layer is responsible for delivering data to the appropriate application process on the host computers. Some transport layer protocols, for example TCP, but not UDP, support virtual circuits, i.e. provide connection oriented communication over an underlying packet oriented datagram network. TCP is used for many protocols, including HTTP web browsing and email transfer.

Prezi Online presentation design platform Prezi is a presentation software company founded in 2009 in Budapest[1] Prezi provides AI-powered tools that enable users to create presentations. As of 2025, they have more than 160 million users worldwide[2] who have created approximately 400 million presentations.[3][1][4] In 2019, they launched Prezi Video, a tool that allows for virtual presentations within the video screen of a live or recorded video.[5] In 2024, Prezi introduced "Prezi AI", an AI-powered presentation tool.[6][7] In early 2011, Prezi launched its first iPad application. In March 2014, Prezi pledged $100 million in free licenses to Title 1 schools as part of the Obama administration's ConnectED program.[13] November of that year saw the announcement of $57 million in new funding from Spectrum Equity and Accel Partners.[14] In April 2017, Prezi Next—a new HTML5-based product—was released.[18] In May 2017, Prezi acquired Infogram, a data visualization company based in Latvia.[19]

Internet layer Internet-layer protocols use IP-based packets. The internet layer does not include the protocols that define communication between local (on-link) network nodes which fulfill the purpose of maintaining link states between the local nodes, such as the local network topology, and that usually use protocols that are based on the framing of packets specific to the link types. Such protocols belong to the link layer. A common design aspect in the internet layer is the robustness principle: "Be liberal in what you accept, and conservative in what you send"[1] as a misbehaving host can deny Internet service to many other users. Purpose[edit] The internet layer has three basic functions: In Version 4 of the Internet Protocol (IPv4), during both transmit and receive operations, IP is capable of automatic or intentional fragmentation or defragmentation of packets, based, for example, on the maximum transmission unit (MTU) of link elements. Core protocols[edit] Security[edit] IETF standards[edit]

Faison Cemetery Historic cemetery in North Carolina, United States United States historic place Faison Cemetery is a historic cemetery located at Faison, Duplin County, North Carolina. It was added to the National Register of Historic Places in 2006.[1] Point-to-point protocol PPP is used over many types of physical networks including serial cable, phone line, trunk line, cellular telephone, specialized radio links, and fiber optic links such as SONET. PPP is also used over Internet access connections. Internet service providers (ISPs) have used PPP for customer dial-up access to the Internet, since IP packets cannot be transmitted over a modem line on their own, without some data link protocol. Description[edit] PPP was designed somewhat after the original HDLC specifications. RFC 2516 describes Point-to-Point Protocol over Ethernet (PPPoE) as a method for transmitting PPP over Ethernet that is sometimes used with DSL. PPP is a layered protocol that has three components: PPP is specified in RFC 1661. Automatic self configuration[edit] Link Control Protocol (LCP) initiates and terminates connections gracefully, allowing hosts to negotiate connection options. After the link has been established, additional network (layer 3) configuration may take place. Link Dead

Meet Securely connect, collaborate, and celebrate from anywhere. With Google Meet, everyone can safely create and join high-quality video meetings for groups of up to 250 people. • Meet safely - video meetings are encrypted in transit and our array of safety measures are continuously updated for added protection• Host large meetings - invite up to 250 participants to a meeting, whether they’re in the same team or outside of your organization• Engage in meetings - engage on meetings without interrupting, through Q&A, Polls, and Hand Raise• Easy access on any device - share a link and invite team members to join your conversations with one click from a web browser or the Google Meet mobile app• Share your screen - present documents, slides, and more during your conference call.• Follow along - live, real-time captions powered by Google speech-to-text technology *Tile view for Android tablets coming soon.**Not all features available for non-paying users. *Not available in all Workspace plans.

Internet Protocol This article is about the IP network protocol only. For Internet architecture or other protocols, see Internet protocol suite. The Internet Protocol (IP) is the principal communications protocol in the Internet protocol suite for relaying datagrams across network boundaries. Its routing function enables internetworking, and essentially establishes the Internet. Historically, IP was the connectionless datagram service in the original Transmission Control Program introduced by Vint Cerf and Bob Kahn in 1974; the other being the connection-oriented Transmission Control Protocol (TCP). The first major version of IP, Internet Protocol Version 4 (IPv4), is the dominant protocol of the Internet. Function[edit] The Internet Protocol is responsible for addressing hosts and for routing datagrams (packets) from a source host to a destination host across one or more IP networks. Datagram construction[edit] Sample encapsulation of application data from UDP to a Link protocol frame Reliability[edit]