Artificial intelligence AI research is highly technical and specialized, and is deeply divided into subfields that often fail to communicate with each other.[5] Some of the division is due to social and cultural factors: subfields have grown up around particular institutions and the work of individual researchers. AI research is also divided by several technical issues. Some subfields focus on the solution of specific problems. The central problems (or goals) of AI research include reasoning, knowledge, planning, learning, natural language processing (communication), perception and the ability to move and manipulate objects.[6] General intelligence is still among the field's long-term goals.[7] Currently popular approaches include statistical methods, computational intelligence and traditional symbolic AI. The field was founded on the claim that a central property of humans, intelligence—the sapience of Homo sapiens—"can be so precisely described that a machine can be made to simulate it History[edit]

The Magical Number Seven, Plus or Minus Two "The Magical Number Seven, Plus or Minus Two: Some Limits on Our Capacity for Processing Information"[1] is one of the most highly cited papers in psychology.[2][3][4] It was published in 1956 by the cognitive psychologist George A. Miller of Princeton University's Department of Psychology in Psychological Review. It is often interpreted to argue that the number of objects an average human can hold in working memory is 7 ± 2. This is frequently referred to as Miller's Law. Miller's article[edit] In his article, Miller discussed a coincidence between the limits of one-dimensional absolute judgment and the limits of short-term memory. Miller recognized that the correspondence between the limits of one-dimensional absolute judgment and of short-term memory span was only a coincidence, because only the first limit, not the second, can be characterized in information-theoretic terms (i.e., as a roughly constant number of bits). The "magical number 7" and working memory capacity[edit]

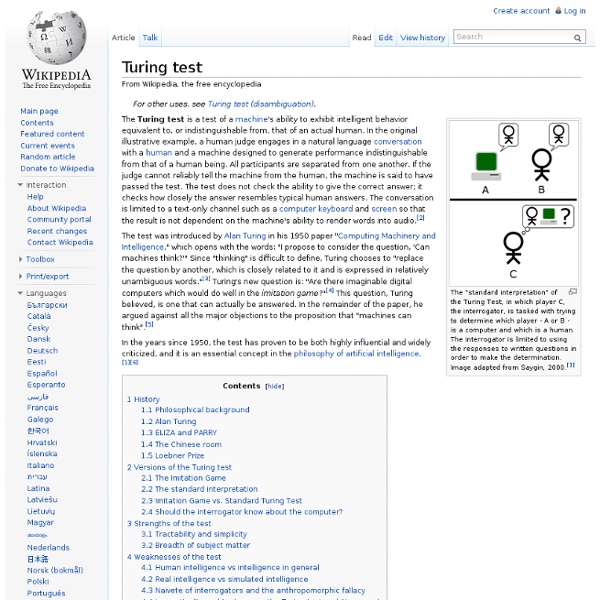

Loebner Prize The Loebner Prize is an annual competition in artificial intelligence that awards prizes to the chatterbot considered by the judges to be the most human-like. The format of the competition is that of a standard Turing test. In each round, a human judge simultaneously holds textual conversations with a computer program and a human being via computer. Based upon the responses, the judge must decide which is which. The contest began in 1990 by Hugh Loebner in conjunction with the Cambridge Center for Behavioral Studies, Massachusetts, United States. Within the field of artificial intelligence, the Loebner Prize is somewhat controversial; the most prominent critic, Marvin Minsky, has called it a publicity stunt that does not help the field along.[1] In addition, the time limit of 5 minutes and the use of untrained and unsophisticated judges has resulted in some wins that may be due to trickery rather than to plausible intelligence. Prizes[edit] Competition rules and restrictions[edit]

Alan Turing - a short biography This short biography, based on the entry for the written in 1995 for the Oxford Dictionary of Scientific Biography, gives an overview of Alan Turing's life and work. It can be read as s summary of my book Alan Turing: The Enigma. 1. The Origins of Alan Turing Alan Mathison Turing was born on 23 June 1912, the second and last child (after his brother John) of Julius Mathison and Ethel Sara Turing. The unusual name of Turing placed him in a distinctive family tree of English gentry, far from rich but determinedly upper-middle-class in the peculiar sense of the English class system. In four inadequate words Alan Turing appears now as the founder of computer science, the originator of the dominant technology of the late twentieth century, but these words were not spoken in his own lifetime, and he may yet be seen in a different light in the future. The name of Turing was best known for the work of Julius' brother H. 2. 3. 4. 5. 6. 7. 8. His work on the morphogenetic theory continued.

Machine vision Early Automatix (now part of Microscan) machine vision system Autovision II from 1983 being demonstrated at a trade show. Camera on tripod is pointing down at a light table to produce backlit image shown on screen, which is then subjected to blob extraction. Machine vision (MV) is the technology and methods used to provide imaging-based automatic inspection and analysis for such applications as automatic inspection, process control, and robot guidance in industry.[1][2] The scope of MV is broad.[2][3][4] MV is related to, though distinct from, computer vision.[2] Applications[edit] The primary uses for machine vision are automatic inspection and industrial robot guidance.[5] Common machine vision applications include quality assurance, sorting, material handling, robot guidance, and optical gauging.[4] Methods[edit] Imaging[edit] Image processing[edit] After an image is acquired, it is processed.[19] Machine vision image processing methods include[further explanation needed] Outputs[edit]

Vidéothèque CNRS : Turing Model (The) Sales and Loans Registration is required in order to use the shopping cart to buy or borrow films (click on the Registration tab “Sign-In”). Sales Films in DVD or VHS Pal video format are sold for private domestic use or institutional use. Shipping costs (tapes and DVDs) - France: 2.50 € VAT-free (3.00 € VAT inc.) for the first item + 1.00 € VAT-free (1.20 € VAT inc.) for each additional item - Europe: 3.33 € VAT-free (4.00 € VAT inc.) for the first item + 1.25 € VAT-free (1.50 € VAT inc.) for each additional item - All other countries: 5.00 € VAT-free (6.00 € VAT inc.) for the first item + 1.66 € VAT-free (2.00 € VAT inc.) for each additional item - Shipping via Colissimo or Chronopost: costs will be based on current charges for these services. Payment may be made by- bank transfer contact us - credit card (Carte Bleue, Visa, Mastercard, e-Carte Bleue) Loans Films may be lent only for institutional or professional use. Fines and Overdue Charges

Robotics Robotics is the branch of mechanical engineering, electrical engineering and computer science that deals with the design, construction, operation, and application of robots,[1] as well as computer systems for their control, sensory feedback, and information processing. These technologies deal with automated machines that can take the place of humans in dangerous environments or manufacturing processes, or resemble humans in appearance, behavior, and/or cognition. Many of today's robots are inspired by nature contributing to the field of bio-inspired robotics. The concept of creating machines that can operate autonomously dates back to classical times, but research into the functionality and potential uses of robots did not grow substantially until the 20th century.[2] Throughout history, robotics has been often seen to mimic human behavior, and often manage tasks in a similar fashion. Etymology[edit] History of robotics[edit] Robotic aspects[edit] Components[edit] Power source[edit]

The highly productive habits of Alan Turing June 23 marks the 100th birthday of Alan Turing. If I had to name five people whose personal efforts led to the defeat of Nazi Germany, the English mathematician would surely be on my list. Turing's genius played a key role in helping the Allies win the Battle of the Atlantic—a naval blockade against the Third Reich that depended for success on the cracking and re-cracking of Germany's Enigma cipher. But even before this history-changing achievement, Turing laid the groundwork for the world we live in today by positing a "universal computing machine" in 1936. Turing's essential idea, aptly summarized by his centenary biographer Andrew Hodges, was "one machine, for all possible tasks." Sadly, if the saying "no good deed goes unpunished" ever applied to anyone, it applied to Turing. Alan Turing's achievements speak for themselves—but the way he lived his remarkable and tragically shortened life is less known. 1. When Mrs. This sort of literal truth drove Alan's superiors to distraction.

Machine translation Machine translation, sometimes referred to by the abbreviation MT (not to be confused with computer-aided translation, machine-aided human translation (MAHT) or interactive translation) is a sub-field of computational linguistics that investigates the use of software to translate text or speech from one natural language to another. On a basic level, MT performs simple substitution of words in one natural language for words in another, but that alone usually cannot produce a good translation of a text because recognition of whole phrases and their closest counterparts in the target language is needed. Solving this problem with corpus and statistical techniques is a rapidly growing field that is leading to better translations, handling differences in linguistic typology, translation of idioms, and the isolation of anomalies.[1] The progress and potential of machine translation have been debated much through its history. History[edit] Translation process[edit] Approaches[edit] Rule-based[edit]

How Alan Turing Cracked The Enigma Code | Imperial War Museums Turingery and Delilah In July 1942, Turing developed a complex code-breaking technique he named ‘Turingery’. This method fed into work by others at Bletchley in understanding the ‘Lorenz’ cipher machine. Lorenz enciphered German strategic messages of high importance: the ability of Bletchley to read these contributed greatly to the Allied war effort. Turing travelled to the United States in December 1942, to advise US military intelligence in the use of Bombe machines and to share his knowledge of Enigma. The Universal Turing Machine In 1936, Turing had invented a hypothetical computing device that came to be known as the ‘universal Turing machine’. Legacy In 1952, Alan Turing was arrested for homosexuality – which was then illegal in Britain. The legacy of Alan Turing’s life and work did not fully come to light until long after his death.