Impdp logtime=all metrics=y and 12cR2 parallel metadata. Importer les données avec Data Pump sans les exporter. NOLOGGING What it is (and what it is not) Whenever a insert or bulk load operations are performed on a database, also redo and undo blocks are created.

Through the NOLOGGING option less redo blocks are written by the corresponding operation. When are NOLOGGING operations useful: To improve the performance of large insert operationsTo improve the build times of large indexesTo improve the build times of large tables (CTAS)The amount of redo IO and result in further reducing the number of archive log files What operations can use NOLOGGING? Create Table As Select (CTAS)ALTER TABLE operationenALTER TABLE statements (add/move/merge/split partitions)INSERT /*+APPEND*/CREATE INDEXALTER INDEX statements (add/move/merge/split partitions) Examples of NOLOGGING operations: NOLOGGING is either an object parameter to the partition => Tables => LOB Storage => Index or at the creation by ALTER or CREATE commands.

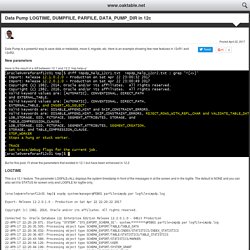

Data Pump LOGTIME, DUMPFILE, PARFILE, DATA_PUMP_DIR in 12c. Data Pump is a powerful way to save data or metadata, move it, migrate, etc.

Here is an example showing few new features in 12cR1 and 12cR2. New parameters Here is the result of a diff between 12.1 and 12.2 ‘imp help=y’ But for this post, I’ll show the parameters that existed in 12.1 but have been enhanced in 12.2 This is a 12.1 feature. [oracle@vmreforanf12c01 tmp]$ expdp system/manager@PDB01 parfile=impdp.par logfile=impdp.log Export: Release 12.2.0.1.0 - Production on Sat Apr 22 22:20:22 2017 Copyright (c) 1982, 2016, Oracle and/or its affiliates.

You will always appreciate finding timestamps in the log file. You can see that my DUMPFILE contains also the timestamp in the file name. [oracle@vmreforanf12c01 tmp]$ cat impdp.par schemas=SCOTT logtime=all dumpfile=SCOTT_%T. PARFILE parameters I don’t usually use a PARFILE and prefer to pass all parameters on the command line, even if this requires escaping a lot of quotes, because I like to ship the log file with the DUMPFILE.

Databases and Performance: Speeding up Imports. There are a number of techniques you can use to speed up an Oracle Import, some of which I'll describe here.

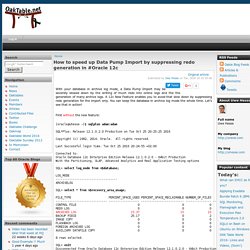

This is not any attempt at a comprehensive list, just some of the main techniques I have used that can really speed up some parts of an import. I've seen a standard import come down from 2.5 hours to about 0.75 hours using these techniques. The first thing to realise is that an exported dump file is a "logical" dump of the database - it contains the SQL statements necessary to recreate all the database structures, and the data records to be loaded into each table. The import works by executing these SQL statements against the target database, thus recreating the contents of the original database that was exported. We can leverage this to our advantage in various ways. How to speed up Data Pump Import by suppressing redo generation in #Oracle 12c.

With your database in archive log mode, a Data Pump Import may be severely slowed down by the writing of much redo into online logs and the the generation of many archive logs.

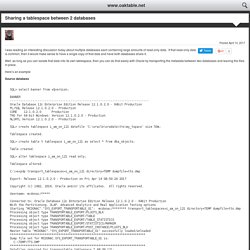

A 12c New Feature enables you to avoid that slow down by suppressing redo generation for the import only. You can keep the database in archive log mode the whole time. Let’s see that in action! Sharing a tablespace between 2 databases. I was reading an interesting discussion today about multiple databases each containing large amounts of read-only data.

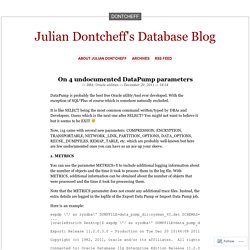

If that read-only data is common, then it would make sense to have a single copy of that data and have both databases share it. Well, as long as you can isolate that data into its own tablespace, then you can do that easily with Oracle by transporting the metadata between two databases and leaving the files in place. Here’s an example Source database. On 4 undocumented DataPump parameters. DataPump is probably the best free Oracle utility/tool ever developed.

With the exception of SQL*Plus of course which is somehow naturally excluded. It is like SELECT being the most common command written/typed by DBAs and Developers. Guess which is the next one after SELECT? You might not want to believe it but it seems to be EXIT Now, 11g came with several new parameters: COMPRESSION, ENCRYPTION, TRANSPORTABLE, NETWORK_LINK, PARTITION_OPTIONS, DATA_OPTIONS, REUSE_DUMPFILES, REMAP_TABLE, etc. which are probably well-known but here are few undocumented ones you can have as an ace up your sleeve. Monitoring an Oracle Data Pump Import. Monitor data pump import impdp script. Question: I an running impdp and it looks like the import is hung.

How do I monitor a running data pump import job? I need to monitor the import in real-time and ensure that the import is working and not broken. Answer: Monitoring Oracle imports is tricky, especially after the rows are added and Oracle is busy adding indexes, constraints, and CBO statistics. At this stage, the import looks hung, but it's not stalled, it's working.

Activation du mode TRACE avec Export/Import Datapump. Des options pour impdp - ArKZoYd. Datapump. How to speed up Data Pump Import by suppressing redo generation in #Oracle 12c. One Can Succeed at Almost Anything For Which He Has Enthusiasm...: Performance Tuning of Data Pump. The Data Pump utilities are designed especially for very large databases.

If our site has very large quantities of data versus metadata, we should experience a dramatic increase in performance compared to the original Export and Import utilities. The Data Pump Export and Import utilities enable us to dynamically increase and decrease resource consumption for each job. This is done using the PARALLEL parameter to specify a degree of parallelism for the job. Data Pump Performance Tuning. I was tasked to move an entire small database from 1 server to another and finish the task ASAP.

The reason was the site will be down until the task is completed. A quick test of exporting, coping files to remote server then importing had the following numbers: Here are the commands we initially used: expdp username/password directory=dump_dir dumpfile=full.dmp logfile=full.log full=y parallel=16 impdp username/password directory=dump_dir dumpfile=full.dmp logfile=full.log parallel=16 In our attempt to tune this process, we introduced exporting in parallel to multiple files and the results were amazing. Data Pump Performance. The Data Pump utilities are designed especially for very large databases.

If your site has very large quantities of data versus metadata, you should experience a dramatic increase in performance compared to the original Export and Import utilities. This chapter briefly discusses why the performance is better and also suggests specific steps you can take to enhance performance of export and import operations.

Data Pump export/import Performance tips.