OpenAnalytics. Machine Learning. R. R. Spark Packages. SparkR by amplab-extras. SparkR is an R package that provides a light-weight frontend to use Apache Spark from R.

SparkR exposes the Spark API through the RDD class and allows users to interactively run jobs from the R shell on a cluster. NOTE: As of April 2015, SparkR has been officially merged into Apache Spark and is shipping in an upcoming release (1.4) due early summer 2015. You can contribute and follow SparkR developments on the Apache Spark mailing lists and issue tracker. NOTE: The API from the upcoming Spark release (1.4) will not have the same API as described here. Initial support for Spark in R be focussed on high level operations instead of low level ETL. Features SparkR exposes the RDD API of Spark as distributed lists in R. Sc <- sparkR.init("local") lines <- textFile(sc, " wordsPerLine <- lapply(lines, function(line) { length(unlist(strsplit(line, " "))) }) In addition to lapply, SparkR also allows closures to be applied on every partition using lapplyWithPartition.

Installing SparkR . . . R Pogramming. R-Language Tools. Cours edx R. R-How2s.

Big Data Predictive Analytics with Revolution R Enterprise - Revolution Analytics. Homepage. Mcrosof R Open. Testing Packages with Experimental R Devel Build for Windows · rwinlib/r-base Wiki. Rtools 3.3 is in the process of being updated to use a new compiler toolchain produced by Jeroen Ooms based on GCC 4.9.3 and Mingw-W64 V3.

The upcoming R 3.3 release is planning on adopting this new toolchain. Package authors using compiled code should test their packages with the new toolchain to ensure compatability. This document includes instructions for downloading the requisite versions of R, Rtools, and (optionally) RStudio to perform this testing. Step 1: Install R-devel-experimental for Windows There is an experimental build of R-devel that uses the new Rtools 3.3 toolchain available on CRAN. Step 2: Install the latest Rtools 3.3 There is an updated build of Rtools 3.3 that includes the new toolchain available on CRAN.

Note that to be compatible with the instructions below you should choose to install Rtools 3.3 to the default location (c:\Rtools). Step 3: Test installation of your package from CRAN Rterm / RGUI RStudio Testing install.packages("Rcpp") The Comprehensive R Archive Network. Integration of R, RStudio and Hadoop in a VirtualBox Cloudera Demo VM on Mac OS X. Motivation I was inspired by Revolution’s blog and step-by-step tutorial from Jeffrey Breen on the set up of a local virtual instance of Hadoop with R.

However, this tutorial describes the implementation using VMware’s application. One downside to using VMware is that it’s not free. I know most of the people including me like to hear the words open-source and free, especially when it is a smooth ride. VirtualBox offers an open-source alternative and thenceforth, I chose this. Description Hadoop Apache Hadoop is an open-source software framework that supports data-intensive distributed applications, licensed under the Apache v2 license. R and Hadoop The most common way to link R and Hadoop is to use HDFS (potentially managed by Hive or HBase) as the long-term store for all data, and use MapReduce jobs (potentially submitted from Hive, Pig, or Oozie) to encode, enrich, and sample data sets from HDFS into R.

Cloudera Hadoop Demo VM Steps…………… Platforms used in this tutorial: 1. Pick type: Linux. Microsoft’s New Data Science Virtual Machine. Earlier this week, Andrie showed you how to set up and provision your own virtual machine (VM) to run R and RStudio in Azure.

Another option is to use the new Microsoft Data Science Virtual Machine, a pre-configured instance that includes a suite of tools useful to data scientists, including: Revolution R Open (performance-enhanced R)Anaconda PythonVisual Studio Community EditionPower BI Desktop (with R capabilities)SQL Server Express (with R integration)Azure SDK (including the ability to run R experiments) There's no software charge associated with using this VM, you'll pay only the standard Azure infrastructure fees (starting at about 2 cents an hour for basic instances; more for more powerful instances).

If you're new to Azure, you can get started with an Azure Free Trial. By the way, if you're not familiar with these tools in the Data Science VM, Jan Mulkens provides a backgrounder on Data science with Microsoft, including an overview of the Microsoft components. Related March 2, 2016.

What is R? R is data analysis software: data scientists, statisticians, analysts, quants, and others who need to make sense of data use R for statistical analysis, data visualization, and predictive modeling.

R is a programming language: you do data analysis in R by writing scripts and functions in the R programming language. R is a complete, interactive, object-oriented language: designed by statisticians, for statisticians. The language provides objects, operators and functions that make the process of exploring, modeling, and visualizing data a natural one. Why is R so useful. As an Excel power user (someone called me a guru recently!)

I know Excel can be used to do pretty much anything – I’ve even seen Excel being used to play the Game of Life. If this is the case why do we need R? In this post I’ll tell you why and then show you. Reproducible We can write an R script once to do any of the following : Acquire dataCleanTransformAnalyseModelReportPublish If the R is written in the correct way it’s reproducible by default.

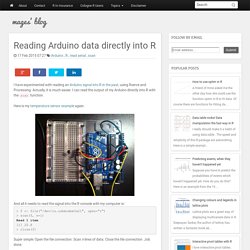

Flexible Excel is flexible as mentioned above. How about R? At the time of writing there are over 6000 packages available for use. Scalability and Availability. R in Insurance. Reading Arduino data directly into R. I have experimented with reading an Arduino signal into R in the past, using Rserve and Processing.

Actually, it is much easier. I can read the output of my Arduino directly into R with the scan function. Here is my temperature sensor example again: And all it needs to read the signal into the R console with my computer is: > f <- file("/dev/cu.usbmodem3a21", open="r") > scan(f, n=1) Read 1 item [1] 20.8 > close(f) Super simple: Open the file connection. Note: This worked for me on my Mac and I am sure it will work in a very similar way on a Linux box as well, but I am not so sure about Windows. You might notice that each serial device shows up twice in /dev, once as a tty.* and once as a cu.*. You find the file address of your Arduino by opening the Arduino software and looking it up under the menu Tools > Port. RserveCLI - Home.

SurajGupta/RserveCLI2. Rserve - Binary R server - RForge.net. Start every R tutorial for free - DataCamp. Reference index for {rugarch} R Programming. Learn R. R Resources. R.