Markov process. Markov process example Introduction[edit] A Markov process is a stochastic model that has the Markov property.

It can be used to model a random system that changes states according to a transition rule that only depends on the current state. This article describes the Markov process in a very general sense, which is a concept that is usually specified further. Particularly, the system's state space and time parameter index needs to be specified. Note that there is no definitive agreement in literature on the use of some of the terms that signify special cases of Markov processes. Markov processes arise in probability and statistics in one of two ways.

Markov property[edit] The general case[edit] Let , for some (totally ordered) index set ; and let be a measurable space. Adapted to the filtration is said to possess the Markov property with respect to the if, for each and each with s < t, For discrete-time Markov chains[edit] Examples of Markov chains. This page contains examples of Markov chains in action.

Board games played with dice[edit] A game of snakes and ladders or any other game whose moves are determined entirely by dice is a Markov chain, indeed, an absorbing Markov chain. This is in contrast to card games such as blackjack, where the cards represent a 'memory' of the past moves. To see the difference, consider the probability for a certain event in the game. In the above mentioned dice games, the only thing that matters is the current state of the board. A center-biased random walk[edit] Consider a random walk on the number line where, at each step, the position (call it x) may change by +1 (to the right) or −1 (to the left) with probabilities: (where c is a constant greater than 0) For example if the constant, c, equals 1, the probabilities of a move to the left at positions x = −2,−1,0,1,2 are given by. Www.dartmouth.edu/~chance/teaching_aids/books_articles/probability_book/Chapter11.pdf.

Markov random walk. v1.2. Application to Markov Chains. Application to Markov Chains Introduction Suppose there is a physical or mathematical system that has n possible states and at any one time, the system is in one and only one of its n states.

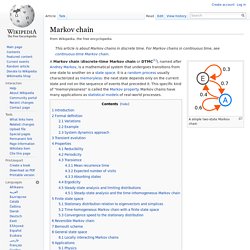

As well, assume that at a given observation period, say k th period, the probability of the system being in a particular state depends only on its status at the k-1st period. Such a system is called Markov Chain or Markov process. Let us clarify this definition with the following example. Example Suppose a car rental agency has three locations in Ottawa: Downtown location (labeled A), East end location (labeled B) and a West end location (labeled C). 1. 2. 3. After making a delivery, a driver goes to the nearest location to make the next delivery. We model this problem with the following matrix: T is called the transition matrix of the above system. Now, let’s start with a simple question. Let's try this for another pair. For an example, look at the matrix. Www.math.rutgers.edu/courses/338/coursenotes/chapter5.pdf. Markov chain. A simple two-state Markov chain A Markov chain (discrete-time Markov chain or DTMC[1]), named after Andrey Markov, is a mathematical system that undergoes transitions from one state to another on a state space.

It is a random process usually characterized as memoryless: the next state depends only on the current state and not on the sequence of events that preceded it. This specific kind of "memorylessness" is called the Markov property. Markov chains have many applications as statistical models of real-world processes. Introduction[edit] A Markov chain is a stochastic process with the Markov property. In literature, different Markov processes are designated as "Markov chains". Elements of the Theory of Markov Processes and Their Applications - Albert T. Bharucha-Reid. Wiki - Syllabus. Markov Processes.