LinkedIn open sources stream-processing engine Samza, its take on Storm. LinkedIn has open sourced a technology called Samza, which the company uses to process data in real time.

It sounds an awful lot like Storm — the de facto stream-processing technology for web properties that has a home inside Twitter — only Samza is built on top of Hadoop and utilizes LinkedIn’s homemade Kafka messaging system. But Storm and Samza are rather similar. As LinkedIn’s Chris Riccomini wrote in the blog post introducing Samza, “[It] helps you build applications that process feeds of messages—update databases, compute counts and other aggregations, transform messages, and a lot more.” Those are some classic Storm application and, indeed, the Samza documentation includes a page dedicated to comparing the two systems. When LinkedIn was spreading the word of Samza through various forums and other online communities last month, one commenter on Grokbase noted the possible benefits of Samza: “Like many we use Storm for near real-time processing our Kafka based streams.

Percolator, Dremel and Pregel – Google’s new data-crunching ecosystem. Hadoop traces its origins to Google where two early projects GFS (Google File System) and GMR (Google Map Reduce) were written besides Big Table, to manage large volumes of data.

These systems are great at crunching large volumes of data in a distributed computing environment (with commodity servers) in batch mode. Any changes to the data requires streaming over the entire data-set and thus big latency. So it is good for “Data in Rest” or static data. Now Google finds itself limited by its own invention of GFS/GMR/BigTable. Hence they have been working on the post-Hadoop set of data crunching tools – Percolator, Dremel, and Pregel. Percolator is a system for incrementally processing updates to a large data set. Dremel is for ad-hoc analytics. Pregel is a system for large-scale graph processing and graph data analysis. Complex event processing. Event processing is a method of tracking and analyzing (processing) streams of information (data) about things that happen (events),[1] and deriving a conclusion from them. Complex event processing, or CEP, is event processing that combines data from multiple sources[2] to infer events or patterns that suggest more complicated circumstances.

The goal of complex event processing is to identify meaningful events (such as opportunities or threats)[3] and respond to them as quickly as possible. These events may be happening across the various layers of an organization as sales leads, orders or customer service calls. Or, they may be news items,[4] text messages, social media posts, stock market feeds, traffic reports, weather reports, or other kinds of data.[1] An event may also be defined as a "change of state," when a measurement exceeds a predefined threshold of time, temperature, or other value.

Conceptual description[edit] CEP relies on a number of techniques,[7] including: History[edit]

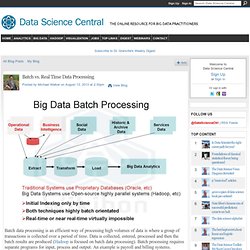

Graph Data Processing. Real-Time Data Processing. Batch Data Processing. In-Memory Processing. Batch vs. Real Time Data Processing. Batch data processing is an efficient way of processing high volumes of data is where a group of transactions is collected over a period of time.

Data is collected, entered, processed and then the batch results are produced (Hadoop is focused on batch data processing). Batch processing requires separate programs for input, process and output. An example is payroll and billing systems. In contrast, real time data processing involves a continual input, process and output of data. Data must be processed in a small time period (or near real time). While most organizations use batch data processing, sometimes an organization needs real time data processing. Complex event processing (CEP) combines data from multiple sources to detect patterns and attempt to identify either opportunities or threats.

Operational Intelligence (OI) uses real time data processing and CEP to gain insight into operations by running query analysis against live feeds and event data. See: