Raspberry Pi Face Recognition Treasure Box. Optical Player Tracking. In partnership with Hego US and FOX Sports, Sportvision delivers a new graphics system that helps broadcasters visualize personnel changes on the field, call attention to interesting match-ups, and dissect and analyze plays in new ways.

Especially with wide angle shots, it can be difficult for fans to see who is lined up left or right, who is back to receive the punt, or who is lined up in the slot. Optical player tracking enables new on-air enhancements to help fans track the action in real-time, much like they do today in NASCAR via RACEf/x pointers and graphics.

Hego US’ optical tracking software includes two banks of eight unmanned cameras set up high in the stadium at adjacent 35-yard lines. The cameras track all moving objects and technicians identify and tag players by number. Once on-screen, the effects remain until removed, enabling analysts to quickly point out a particular player and follow him throughout an entire play. Motion Tracking on the Cheap with a PIC. View topic - [Resolved] get the Server data. 2D Room Mapping With a Laser and a Webcam. Getting Started. When you download and install the EyeTribe SDK, EyeTribe Server and EyeTribe UI are installed on your computer.

This tutorial serves as a starting point to get you started with the EyeTribe UI for Windows. Starting EyeTribe UI EyeTribe UI application is started either from the icon on the desktop or from the TheEyeTribe folder located in your start menu inside All Programs. The software is by default installed in C:\Program Files (x86)\EyeTribe\. In this folder you will find two sub folders, Client and Server. Eyetribe-docs. Eye tracking, or gaze tracking, is a technology that consists in calculating the eye gaze point of a user as he or she looks around.

A device equipped with an eye tracker enables users to use their eye gaze as an input modality that can be combined with other input devices like mouse, keyboard, touch and gestures, referred as active applications. Furthermore, eye gaze data collected with an eye tracker can be employed to improve the design of a website or a magazine cover, which are described more thoroughly later on as passive applications. Applications that can benefit from eye tracking include games, OS navigation, e-books, market research studies, and usability testing. Computers Watching Movies. Computers Watching Movies (Exhibition Cut)computationally-produced HD video with stereo audio(please play full screen) Computers Watching Movies shows what a computational system sees when it watches the same films that we do.

The work illustrates this vision as a series of temporal sketches, where the sketching process is presented in synchronized time with the audio from the original clip. Viewers are provoked to ask how computer vision differs from their own human vision, and what that difference reveals about our culturally-developed ways of looking. This Is Your Computer Watching The Matrix. 1.4. Matplotlib: plotting — Scipy lecture notes. 1.4.2.

RGBDToolkit - DSLR + DEPTH Filmmaking. Google launches Glass Dev Kit preview, shows off augmented reality apps. Today Google launched the Glass Development Kit (GDK) "Sneak Preview," which will finally allow developers to make real, native apps for Google Glass.

While there have previously been extremely limited Glass apps that used the Mirror API, developers now have full access to the hardware. Google Glass runs a heavily skinned version of Android 4.0.4, so Glass development is very similar to Android development. The GDK is downloaded through the Android SDK Manager, and Glass is just another target device in the Eclipse plugin.

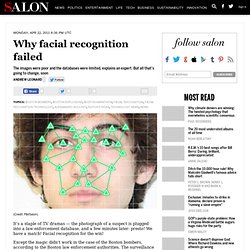

Developers have access to the Glass voice recognition within their app as an intent, but it looks like only Google can add "OK, Glass" commands to the main voice menu. Why facial recognition failed. It’s a staple of TV dramas — the photograph of a suspect is plugged into a law enforcement database, and a few minutes later: presto!

We have a match! Facial recognition for the win! Except the magic didn’t work in the case of the Boston bombers, according to the Boston law enforcement authorities. Pourquoi on reconnaît ses amis dans la foule, même quand on ne voit pas leur visage. Vous êtes dans la rue, et au loin, mais vraiment loin, tellement loin que vous ne pouvez pas distinguer son visage, vous reconnaissez un(e) de vos ami(e)s.

Mais, si vous n’avez pas vu sa tête, comment donc avez-vous fait pour savoir qui c’était? Grâce à sa morphologie, expliquent les psychologues de l’université de Dallas-Texas, qui publient leur étude dans Psychological Science. Pour arriver à ce résultat, ils ont montré à des participants des photos où l’on distinguait mal le visage des personnes. Comme au jeu du memory, il fallait trouver les images qui présentaient les mêmes personnes, explique le Pacific Standard, des images que des logiciels de reconnaissance faciale n’avaient pas réussi à identifier. publicité Le résultat de l’étude montre que les participants s’en sont mieux sortis lorsqu’ils pouvaient «voir les corps des personnes sur les photographies», ce qui n'était pas le cas «quand ils ne pouvaient voir que leur visage».

Real-Time Adaptive 3D Face Tracking and Eye Gaze Estimation. BlackHat USA 2011: Faces Of Facebook-Or, How The Largest Real ID Database In The World Came To Be. Hands-on with Google’s latest acquisition: Flutter, a webcam gesture app. Apple patents eye-tracking 3D technology for iPhone, iPad, or Mac. 3D is among the least liked technologies for many geeks.

Sure, it can be cool to add an extra dimension to your content, but it’s been pushed so clumsily by TV and gadget manufacturers that it feels more like a forced gimmick than an exciting new way of interacting with content. The eye strain that it often induces doesn’t exactly help either. With that in mind, you may want to take this with a few extra grains of salt. Apple has patented a method of presenting 3D content on its devices that wouldn’t require glasses or even a stereoscopic display. Hockey Fans to Test Facial Recognition Technology. Adding An Eye-Tracker To An Android. Last April Denmark-based start-up The Eye Tribe demonstrated prototype eye-tracking technology for mobile devices.

Its system bounces infrared light off the user’s pupils; that’s not particularly new; The Eye Tribe’s twist is using existing processors in a device to process the tracking data. This month, the company began taking orders for a US $99 developers kit; the company hopes that the kit will turn out to be a holiday 2013 stocking stuffer for the developer in your life.

For 2013, the kit will just be available for Windows tablets (photo above); coming in early 2014, the company says, will be the Android kit (photo below). The company doesn’t expect to see its infrared attachment hanging off of every mobile device; rather, it plans, by getting developers to start working with its technology, to have a head start when device manufacturers decide to build infrared systems into their products. Why facial recognition tech failed in the Boston bombing manhunt. In the last decade, the US government has made a big investment in facial recognition technology. The Department of Homeland Security paid out hundreds of millions of dollars in grants to state and local governments to build facial recognition databases—pulling photos from drivers' licenses and other identification to create a massive library of residents, all in the name of anti-terrorism.

In New York, the Port Authority is installing a "defense grade" computer-driven surveillance system around the World Trade Center site to automatically catch potential terrorists through a network of hundreds of digital eyes. But then an act of terror happened in Boston on April 15. Download - myEye Project. Opengazer: open-source gaze tracker for ordinary webcams. OpenEyes - Eye tracking for the masses. Free Eye Tracker API for Eye Tracking Integration. The S2 Eye Tracker supports an open standard eye-gaze interface. The interface uses TCP/IP for data communication and XML for data structures. Our vision is to see this API adopted by many eye tracker developers, providing application developers a standardized interface to eye gaze hardware. For now this easy to use and free eye tracker API provides a simple way to interface with the S2 Eye Tracker. The S2 Eye Tracker API requires no software download whatsoever.

Inexpensive or Free Head & Eye Tracking. Inexpensive or Free Head & Eye Tracking Software For individuals that have lost the ability to use a standard mouse to control their computer, there are several low cost or no cost alternatives. It seems these methods work best when the target areas (the spots where you click) are larger, requiring less precise movements. To move the mouse around the screen without using your hands, you need to have software and a tracking device. Head tracking software to move the mouse around the screen: