Outsourced Software Product Development Company. Pivotal Tracker. Farhan Thawar, VP Engineering of Pivotal Labs, giving a talk in Toronto Pivotal Labs is an agile software development consulting firm with headquarters in San Francisco and offices in Manhattan and Boulder, Colorado.

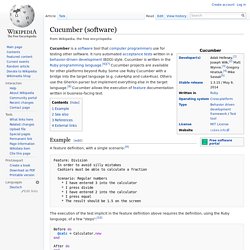

Pivotal is a wholly owned subsidiary of EMC Corporation. Pivotal promotes Ruby on Rails, pair programming, test-driven development and behavior driven development. Clients include Groupon, Best Buy,[1] EMI Music, Zendesk, Mavenlink, Twitter, and Urban Dictionary. The company was founded in 1989 by Rob Mee and Sherry Erskine.[2] In 2008, Pivotal Labs released Pivotal Tracker, which it had been using as their internal project management and collaboration software, to the Ruby on Rails community. Cucumber (software) A feature definition, with a single scenario:[9] Feature: Division In order to avoid silly mistakes Cashiers must be able to calculate a fraction Scenario: Regular numbers * I have entered 3 into the calculator * I press divide * I have entered 2 into the calculator * I press equal * The result should be 1.5 on the screen The execution of the test implicit in the feature definition above requires the definition, using the Ruby language, of a few "steps":[10] Before do @calc = Calculator.newend After doend Given /I have entered (\d+) into the calculator/ do |n| @calc.push n.to_iend When /I press (\w+)/ do |op| @result = @calc.send op end Then /the result should be (.*) on the screen/ do |result| @result.should == result.to_fend.

Continuous integration. CI was originally intended to be used in combination with automated unit tests written through the practices of test-driven development.

Initially this was conceived of as running all unit tests and verifying they all passed before committing to the mainline. This helps avoid one developer's work in progress breaking another developer's copy. If necessary, partially complete features can be disabled before committing using feature toggles. Later elaborations of the concept introduced build servers, which automatically run the unit tests periodically or even after every commit and report the results to the developers.

The use of build servers (not necessarily running unit tests) had already been practised by some teams outside the XP community. Regression testing. The intent of regression testing is to ensure that a change such as those mentioned above has not introduced new faults.[1] One of the main reasons for regression testing is to determine whether a change in one part of the software affects other parts of the software.[2] Common methods of regression testing include rerunning previously completed tests and checking whether program behavior has changed and whether previously fixed faults have re-emerged.

Regression testing can be performed to test a system efficiently by systematically selecting the appropriate minimum set of tests needed to adequately cover a particular change. Unit testing. In computer programming, unit testing is a method by which individual units of source code, sets of one or more computer program modules together with associated control data, usage procedures, and operating procedures are tested to determine if they are fit for use.[1] Intuitively, one can view a unit as the smallest testable part of an application.

In procedural programming, a unit could be an entire module, but it is more commonly an individual function or procedure. In object-oriented programming, a unit is often an entire interface, such as a class, but could be an individual method.[2] Unit tests are short code fragments[3] created by programmers or occasionally by white box testers during the development process. Ideally, each test case is independent from the others. Integration testing. Purpose[edit] The purpose of integration testing is to verify functional, performance, and reliability requirements placed on major design items.

These "design items", i.e. assemblages (or groups of units), are exercised through their interfaces using black box testing, success and error cases being simulated via appropriate parameter and data inputs. Simulated usage of shared data areas and inter-process communication is tested and individual subsystems are exercised through their input interface.

Test cases are constructed to test whether all the components within assemblages interact correctly, for example across procedure calls or process activations, and this is done after testing individual modules, i.e. unit testing. The overall idea is a "building block" approach, in which verified assemblages are added to a verified base which is then used to support the integration testing of further assemblages. Big Bang[edit] Top-down and Bottom-up[edit] System testing. System testing of software or hardware is testing conducted on a complete, integrated system to evaluate the system's compliance with its specified requirements.

System testing falls within the scope of black box testing, and as such, should require no knowledge of the inner design of the code or logic. [1] As a rule, system testing takes, as its input, all of the "integrated" software components that have passed integration testing and also the software system itself integrated with any applicable hardware system(s). The purpose of integration testing is to detect any inconsistencies between the software units that are integrated together (called assemblages) or between any of the assemblages and the hardware. Acceptance testing.