Openni/Contests/ROS 3D/Minority Report Interface. Minority Report Interface Description: A minority report-like interface that lets you drag around photos Submitted By: Garratt Gallagher Keywords: Minority Report Video.

Openni/Contests/ROS 3D/RGBD-6D-SLAM. Description: The Kinect is used to generate a colored 3D model of an object or a complete room.

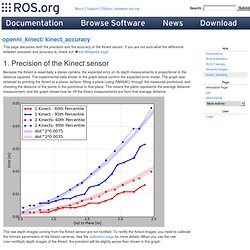

Submitted By: Felix Endres, Juergen Hess, Nikolas Engelhard, Juergen Sturm, Daniel Kuhner, Philipp Ruchti, Wolfram Burgard Keywords: RGBD-SLAM, 3D-SURF, Feature Matching, RANSAC, Graph SLAM, Model Generation, Real-time This page describes the software package that we submitted for the ROS 3D challenge. Openni_kinect/kinect_accuracy. This page discusses both the precision and the accuracy of the Kinect sensor.

If you are not sure what the difference between precision and accuracy is, check out this Wikipedia page. Precision of the Kinect sensor. OctoMap - 3D occupancy mapping. 3D Stixels Obtained from Stereo Data in a Urban Environment. 3D mapping with Kinect style depth camera. Build a 3D Scanner From A $25 Laser Level - Systm. NI Mate. How to build your own 3-D camera. Image. Features.