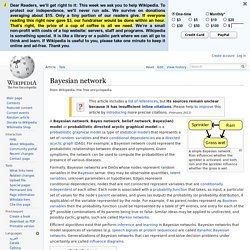

OpenHTMM Released. Bayesian network. A simple Bayesian network.

Rain influences whether the sprinkler is activated, and both rain and the sprinkler influence whether the grass is wet. Bayes' theorem. A blue neon sign, showing the simple statement of Bayes's theorem In probability theory and statistics, Bayes' theorem (alternatively Bayes' law or Bayes' rule) relates current to prior belief.

It also relates current to prior evidence. It is important in the mathematical manipulation of conditional probabilities.[1] Bayes' rule can be derived from more basic axioms of probability, specifically conditional probability. When applied, the probabilities involved in Bayes' theorem may have any of a number of probability interpretations. In one of these interpretations, the theorem is used directly as part of a particular approach to statistical inference. ln particular, with the Bayesian interpretation of probability, the theorem expresses how a subjective degree of belief should rationally change to account for evidence: this is Bayesian inference, which is fundamental to Bayesian statistics. Naive Bayes classifier. A naive Bayes classifier is a simple probabilistic classifier based on applying Bayes' theorem with strong (naive) independence assumptions.

A more descriptive term for the underlying probability model would be "independent feature model". An overview of statistical classifiers is given in the article on pattern recognition. Introduction[edit] In simple terms, a naive Bayes classifier assumes that the value of a particular feature is unrelated to the presence or absence of any other feature, given the class variable. For example, a fruit may be considered to be an apple if it is red, round, and about 3" in diameter. For some types of probability models, naive Bayes classifiers can be trained very efficiently in a supervised learning setting. Despite their naive design and apparently oversimplified assumptions, naive Bayes classifiers have worked quite well in many complex real-world situations. Pattern recognition. Pattern recognition algorithms generally aim to provide a reasonable answer for all possible inputs and to perform "most likely" matching of the inputs, taking into account their statistical variation.

This is opposed to pattern matching algorithms, which look for exact matches in the input with pre-existing patterns. A common example of a pattern-matching algorithm is regular expression matching, which looks for patterns of a given sort in textual data and is included in the search capabilities of many text editors and word processors. In contrast to pattern recognition, pattern matching is generally not considered a type of machine learning, although pattern-matching algorithms (especially with fairly general, carefully tailored patterns) can sometimes succeed in providing similar-quality output to the sort provided by pattern-recognition algorithms.

Overview[edit] Probabilistic classifiers[edit] They output a confidence value associated with their choice. . To output labels . . . PlanetMath. From Wolfram MathWorld. Examples of Markov chains. This page contains examples of Markov chains in action.

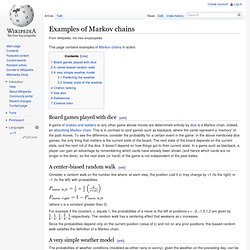

Board games played with dice[edit] A game of snakes and ladders or any other game whose moves are determined entirely by dice is a Markov chain, indeed, an absorbing Markov chain. This is in contrast to card games such as blackjack, where the cards represent a 'memory' of the past moves. To see the difference, consider the probability for a certain event in the game. In the above mentioned dice games, the only thing that matters is the current state of the board. A center-biased random walk[edit] Consider a random walk on the number line where, at each step, the position (call it x) may change by +1 (to the right) or −1 (to the left) with probabilities: (where c is a constant greater than 0) For example if the constant, c, equals 1, the probabilities of a move to the left at positions x = −2,−1,0,1,2 are given by respectively. Markov process. Markov process example Introduction[edit] A Markov process is a stochastic model that has the Markov property.

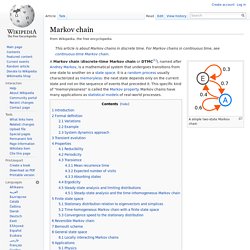

It can be used to model a random system that changes states according to a transition rule that only depends on the current state. This article describes the Markov process in a very general sense, which is a concept that is usually specified further. Particularly, the system's state space and time parameter index needs to be specified. Markov chain. A simple two-state Markov chain A Markov chain (discrete-time Markov chain or DTMC[1]), named after Andrey Markov, is a mathematical system that undergoes transitions from one state to another on a state space.

It is a random process usually characterized as memoryless: the next state depends only on the current state and not on the sequence of events that preceded it. This specific kind of "memorylessness" is called the Markov property. Machine Intelligence Laboratory - Speech Group. The Speech Research Group is part of the Machine Intelligence Laboratory.

Its mission is to advance our knowledge of computer-based spoken language processing and develop effective algorithms for implementing applications. Its primary specialism is in large vocabulary speech transcription and related technologies. It also has active research interests in spoken dialogue systems, multimedia document retrieval, statistical machine translation, speech synthesis and machine learning. Research Areas The research topic areas covered by the Speech Research Group include: acoustic modelling using statistical models basic research in machine learning dialogue optimisation using reinforcement learning large vocabulary recognition multimedia document retrieval portability in speech recognition speaker and environment adaptation spoken dialogue systems and VoiceXML statistical language modelling statistical machine translation transcription of found speech.