Using a Graph Database for Deep Learning Text Classification. Graphify is a Neo4j unmanaged extension that provides plug and play natural language text classification.

Graphify gives you a mechanism to train natural language parsing models that extract features of a text using deep learning. When training a model to recognize the meaning of a text, you can send an article of text with a provided set of labels that describe the nature of the text. Over time the natural language parsing model in Neo4j will grow to identify those features that optimally disambiguate a text to a set of classes.

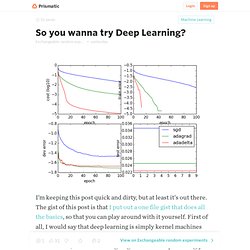

The feature hierarchy is generated probabilistically as a result of a statistical analysis of neighboring words to a feature. By doing this it becomes possible to recognize a large set of features in test data by eliminating possibilities at each layer. The lowest level representation of a feature is closest to the root pattern. Vector Space Model. So you wanna try Deep Learning? I’m keeping this post quick and dirty, but at least it’s out there.

The gist of this post is that I put out a one file gist that does all the basics, so that you can play around with it yourself. First of all, I would say that deep learning is simply kernel machines whose kernel we learn. That’s gross but that’s not totally false. A Gentle Introduction to Backpropagation. Why is this blog being written?

Neural networks have always fascinated me ever since I became aware of them in the 1990s. They are often represented with a hypnotizing array of connections. In the last decade, deep neural networks have dominated pattern recognition, often replacing other algorithms for applications like computer vision and voice recognition. At least in specialized tasks, they indeed come close to mimicking the miraculous feats of cognition our brains are capable of.

Richard Socher - Deep Learning Tutorial. Slides Updated Version of Tutorial at NAACL 2013 See Videos High quality video of the 2013 NAACL tutorial version are up here: quality version of the 2012 ACL version: on youtube Abstract.

Machine Learning. Course Description In this course, you'll learn about some of the most widely used and successful machine learning techniques.

You'll have the opportunity to implement these algorithms yourself, and gain practice with them. You will also learn some of practical hands-on tricks and techniques (rarely discussed in textbooks) that help get learning algorithms to work well. This is an "applied" machine learning class, and we emphasize the intuitions and know-how needed to get learning algorithms to work in practice, rather than the mathematical derivations. Familiarity with programming, basic linear algebra (matrices, vectors, matrix-vector multiplication), and basic probability (random variables, basic properties of probability) is assumed. Homepages.inf.ed.ac.uk/vlavrenk/iaml.html. Introducing PredictionIO. PredictionIO is an open source machine learning server for software developers to create predictive features, such as personalization, recommendation and content discovery.

Building a production-grade engine to predict users’ preferences and personalize content for them used to be time-consuming. Not anymore with PredictionIO’s latest v0.7 release. We are going to show you how PredictionIO streamlines the data process and make it friendly for developers and production deployment. A movie recommendation case will be used for illustration purpose. We want to offer “Top 10 Personalized Movie Recommendation” for each user. Prerequisite First, let’s explain a few terms we use in PredictionIO. Apps Apps in PredictionIO are not apps with program code. Engines Engines are logical identities that an external application can interact with via the API. Learning From Data - Online Course (MOOC) A real Caltech course, not a watered-down version on YouTube & iTunes Free, introductory Machine Learning online course (MOOC) Taught by Caltech Professor Yaser Abu-Mostafa [article]Lectures recorded from a live broadcast, including Q&APrerequisites: Basic probability, matrices, and calculus8 homework sets and a final examDiscussion forum for participantsTopic-by-topic video library for easy review Outline This is an introductory course in machine learning (ML) that covers the basic theory, algorithms, and applications.

ML is a key technology in Big Data, and in many financial, medical, commercial, and scientific applications. The Neural Representation Benchmark and its Evaluation on Brain and Machine. Index of /~welling/teaching/ICS273Afall11. Learning From Data MOOC - The Lectures. Taught by Feynman Prize winner Professor Yaser Abu-Mostafa.

The fundamental concepts and techniques are explained in detail. The focus of the lectures is real understanding, not just "knowing. " Lectures use incremental viewgraphs (2853 in total) to simulate the pace of blackboard teaching. The 18 lectures (below) are available on different platforms: Here is the playlist on YouTube Lectures are available on iTunes U course app The Learning Problem - Introduction; supervised, unsupervised, and reinforcement learning.

Machine Learning Online Courses. Www.mohakshah.com/tutorials/icml2012/Tutorial-ICML2012/Tutorial_at_ICML_2012_files/ICML2012-Tutorial.pdf. Machine Learning Demos. Intro. to Statistical Machine Learning. Statistical Data Mining Tutorials. The following links point to a set of tutorials on many aspects of statistical data mining, including the foundations of probability, the foundations of statistical data analysis, and most of the classic machine learning and data mining algorithms.

These include classification algorithms such as decision trees, neural nets, Bayesian classifiers, Support Vector Machines and cased-based (aka non-parametric) learning. They include regression algorithms such as multivariate polynomial regression, MARS, Locally Weighted Regression, GMDH and neural nets. Hunch. Part I slides (Powerpoint) Introduction Part II.a slides (Powerpoint) Tree Ensembles Part II.b slides (Powerpoint) Graphical models Part III slides (Summary + GPU learning + Terascale linear learning) This tutorial gives a broad view of modern approaches for scaling up machine learning and data mining methods on parallel/distributed platforms.

Demand for scaling up machine learning is task-specific: for some tasks it is driven by the enormous dataset sizes, for others by model complexity or by the requirement for real-time prediction. The tutorial is based on (but not limited to) the material from our upcoming Cambridge U. Presenters. ML:Useful Resources - Coursera.

Books Tutorials and Talks. Introducing Apache Mahout. Scalable, commercial-friendly machine learning for building intelligent applications Grant IngersollPublished on September 08, 2009 Increasingly, the success of companies and individuals in the information age depends on how quickly and efficiently they turn vast amounts of data into actionable information. Whether it's for processing hundreds or thousands of personal e-mail messages a day or divining user intent from petabytes of weblogs, the need for tools that can organize and enhance data has never been greater.

Therein lies the premise and the promise of the field of machine learning and the project this article introduces: Apache Mahout (see Related topics). UFLDL Tutorial - Ufldl.