Proxmox cluster Ceph : migration 4.4 vers 5.0. Un mémo sur comment mettre à niveau la version de Proxmox 4.4 vers 5.0 la dernière version stable disponible à ce jour.

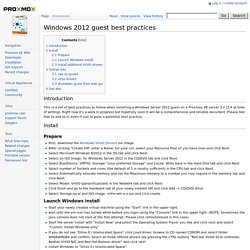

A savoir, ici dans cet article la mise à niveau va être un poil plus complexe qu’une simple monté de version d’un seul serveur Proxmox, car dans mon cas c’est un cluster de haute disponibilité avec du Ceph. La première étape sera une mise à niveau de la verson Ceph Jewel vers Luminous. La migration de Proxmox 4.4 vers 5.0 va se faire sur chaque nœud du cluster l’un après l’autre sans coupure de service. Qemu-guest-agent. Windows 2012 guest best practices. Introduction This is a set of best practices to follow when installing a Windows Server 2012 guest on a Proxmox VE server 3.x (3.4 at time of writing).

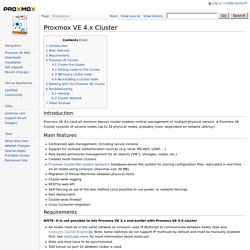

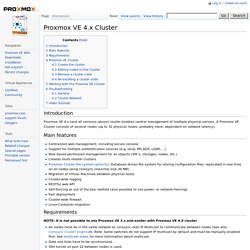

Right now it's a work in progress but hopefully soon it will be a comprehensive and reliable document. Please feel free to add to it, even if just to pose a potential best practice. Getting started - pve-monitor. Netboot.xyz. Proxmox VE 4.x Cluster. Introduction Proxmox VE 4.x (and all versions above) cluster enables central management of multiple physical servers.

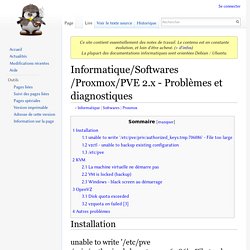

A Proxmox VE Cluster consists of several nodes (up to 32 physical nodes, probably more, dependent on network latency). Main features. Informatique/Softwares/Proxmox/PVE 2.x - Problèmes et diagnostiques — Ordinoscope.net. Unable to write '/etc/pve/priv/authorized_keys.tmp.706086' - File too large.

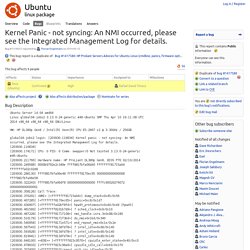

Sans titre. Bug #1318551 “Kernel Panic - not syncing: An NMI occurred, pleas...” : Bugs : linux package. Ubuntu Server 14.04 amd64 Linux global04-jobs2 3.13.0-24-generic #46-Ubuntu SMP Thu Apr 10 19:11:08 UTC 2014 x86_64 x86_64 x86_64 GNU/Linux.

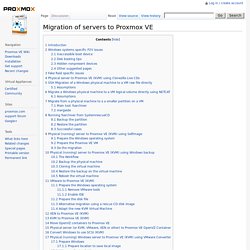

Migration of servers to Proxmox VE. Introduction You can migrate existing servers to Proxmox VE.

Moving Linux servers is always quite easy so you will not find much hints for troubleshooting here. Windows systems specific P2V issues. Bug #1318551 “Kernel Panic - not syncing: An NMI occurred, pleas...” : Bugs : linux package. Proxmox VE 4.x Cluster. Introduction Proxmox VE 4.x (and all versions above) cluster enables central management of multiple physical servers.

A Proxmox VE Cluster consists of several nodes (up to 32 physical nodes, probably more, dependent on network latency). Main features Requirements. Persistently bridge traffic between two or more Ethernet interfaces (Debian) Objective.

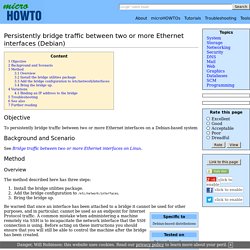

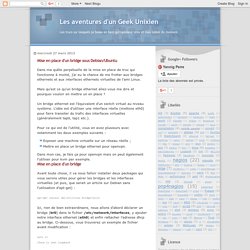

MicroHOWTO: Persistently bridge traffic between two or more Ethernet interfaces (Debian) Les aventures d'un Geek Unixien: Mise en place d'un bridge sous Debian/Ubuntu. Dans ma quête perpétuelle de la mise en place de truc qui fonctionne à moitié, j'ai eu la chance de me frotter aux bridges ethernets et aux interfaces ethernets virtuelles de l'ami Linux.

Mais qu'est ce qu'un bridge ethernet allez-vous me dire et pourquoi vouloir en mettre un en place ? Un bridge ethernet est l'équivalent d'un switch virtuel au niveau système. L'idée est d'utiliser une interface réelle (mettons eth0) pour faire transiter du trafic des interfaces virtuelles (généralement tap0, tap1 etc.). Pour ce qui est de l'utilité, vous en avez plusieurs avec notamment les deux exemples suivants : Exposer une machine virtuelle sur un réseau réelle ;Mettre en place un bridge ethernet pour openvpn. Dans mon cas, je fais ça pour openvpn mais on peut également l'utiliser pour kvm par exemple.

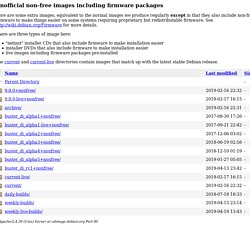

Index of /cdimage/unofficial/non-free/cd-including-firmware. Here are some extra images, equivalent to the normal images we produce regularly except in that they also include non-free firmware to make things easier on some systems requiring proprietary but redistributable firmware.

See for more details. There are three types of image here: "netinst" installer CDs that also include firmware to make installation easier installer DVDs that also include firmware to make installation easier live images including firmware packages pre-installed. Images pour firmwares non reconnus — wiki.debian-fr. Images Debian pour firmwares non reconnus. Missing firmware in Debian? Learn how to deal with the problem. You know it already, since Debian 6.0 non-free firmware are no longer provided by a standard Debian installation. This will cause some troubles to users who need them.

I’m thus going to do a small overview on the topic and teach you what you need to know to deal with the problem. What are firmware and how are they used? From the user’s point of view, a firmware is just some data that is needed by some piece of hardware in order to function properly. Storage Model. Proxmox VE is using a very flexible storage model. Virtual machine images can be stored on local storage (and more than one local storage type is supported) as well as on shared storage like NFS and on SAN (e.g. using iSCSI). All storage definitions are synchronized throughout the Proxmox_VE_2.0_Cluster, therefore it's just a matter of minutes before a SAN configuration is usable on all Proxmox_VE_2.0_Cluster nodes.

You may configure as many storage definitions as you like! One major benefit of storing VMs on shared storage is the ability to live-migrate running machines without any downtime, as all nodes in the cluster have direct access to VM disk images. Comparaison de différents FS Distribués : HDFS – GlusterFS – Ceph. Novembre 25, 2014 par Ludovic Houdayer Comparaison des différents FileSystem Distribués : HDFS – GlusterFS – Ceph Cette comparaison se fera tant au niveau des fonctionnalités que des capacités en lecture et écriture. Les tests ne sont pas faits par mes soins, mais par différentes sources externes (ne disposant pas de suffisamment de matériel). GlusterFS or Ceph: Who Will Win the Open Source Cloud Storage Wars? The open source cloud storage wars are here, and show no sign of stopping soon, as GlusterFS and Ceph vie to become the distributed scale-out storage software of choice for OpenStack.

The latest volley was fired this month by Red Hat (RHT), which commissioned a benchmarking test that reports more than 300 percent better performance with GlusterFS-based storage. Actually, it might be most precise to describe the GlusterFS-Ceph competition not just as a war, but as a proxy war. In many ways, the real fight is not between the two storage platforms themselves, but their respective, much larger backers: Red Hat, which strongly supports GlusterFS development, and Canonical (the company behind Ubuntu Linux), which has placed its bets on Ceph. (Ceph itself is directly sponsored by Inktank, which has a close relationship with Canonical, and in which Ubuntu founder Mark Shuttleworth has invested $1 million of his own money.) But these details don't really matter from the channel perspective. Gluster Vs. Ceph: Open Source Storage Goes Head-To-Head. Storage appliances using open-source Ceph and Gluster offer similar advantages with great cost benefits. Which is faster and easier to use?

Open-source Ceph and Red Hat Gluster are mature technologies, but will soon experience a kind of rebirth. GlusterFS 3.2 — La géo‐réplication. Introduction à la virtualisation du stockage. Au fur et à mesure que le service évolue, il devient de plus en plus difficile de gérer le stockage et ses nombreuses évolutions, tant au niveau de l’espace que des périphériques. La virtualisation du stockage en réseau permet d’avoir une vision homogène de ressources hétérogènes (baies de disques / NAS) dispersées sur un SAN.

Elle apporte des fonctionnalités intéressantes de copie et de réplication indépendamment des périphériques de stockage utilisés et de leur constructeur. Concept La meilleure définition de la virtualisation est probablement l’abstraction du stockage logique par rapport au stockage physique. La virtualisation du stockage est obtenue en isolant les données de leur localisation physique. What is storage virtualization? - Definition from WhatIs.com. Desktop Virtualization (VDI) VDI environments built on top of conventional frame-based enterprise arrays, with performance-throttling SAN controllers, are unsuited for VDI environments.

These enterprise arrays simply can’t respond to the read/write demand during boot storms, which occur in many VDI environments multiple times a day: in educational environments, as often as once an hour. Boot times, for any given desktop, balloon: ten, twenty, even thirty minutes to complete a boot cycle for a few dozen, or a few hundred, virtualized desktops. Performance so poor that users find their working environment suddenly unworkable. The solution, as far as conventional enterprise array vendors are concerned is: more storage. Racks and racks of it. Experienced VDI practitioners know that traditional enterprise storage arrays don’t work, for VDI implementations. Virtualisation du stockage : fédérer les volumes en une unique ressource. 01net. le 26/08/02 à 08h00 La virtualisation consiste à masquer la disparité des ressources de stockage, et à les présenter comme un volume logique homogène. Serveur de stockage sur réseau (NAS) Synology.

Migration of servers to Proxmox VE.