What is the Confluent Platform? — Confluent Platform 1.0 documentation. The Confluent Platform is a stream data platform that enables you to organize and manage the massive amounts of data that arrive every second at the doorstep of a wide array of modern organizations in various industries, from retail, logistics, manufacturing, and financial services, to online social networking.

With Confluent, this growing barrage of, often unstructured but nevertheless incredibly valuable, data becomes an easily accessible, unified stream data platform that’s always readily available for many uses throughout your entire organization. These uses can easily range from enabling batch Big Data analysis with Hadoop and feeding realtime monitoring systems, to more traditional large volume data integration tasks that require a high-throughput, industrial-strength extraction, transformation, and load (ETL) backbone. At its core, the Confluent Platform leverages Apache Kafka, a proven open source technology created by the founders of Confluent while at LinkedIn. Configuration. Kafka uses the property file format for configuration.

These can be supplied either from a file or programmatically. Some configurations have both a default global setting as well as a topic-level overrides. The topic level properties have the format of csv (e.g., "xyz.per.topic=topic1:value1,topic2:value2") and they override the default value for the specified topics. 3.1 Broker Configs. [Kafka-users] Producer.send questions. So, can QueueFullException occur in either sync or async mode (or just async mode)?

![[Kafka-users] Producer.send questions](http://cdn.pearltrees.com/s/pic/th/producer-questions-grokbase-103716752)

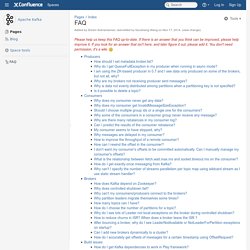

QueueFullException can only occur in async mode since there is no queue insync mode. If there's a MessageSizeTooLargeException, is there any visibility of thisto the caller? The kafka producer should not retry on unrecoverable exceptions. I gathered from one of your previous responses, that a MessageSizeTooLargeException. FAQ - Apache Kafka. Please help us keep this FAQ up-to-date.

If there is an answer that you think can be improved, please help improve it. If you look for an answer that isn't here, and later figure it out, please add it. You don't need permission, it's a wiki. Producers The broker list provided to the producer is only used for fetching metadata. Why do I get QueueFullException in my producer when running in async mode? This typically happens when the producer is trying to send messages quicker than the broker can handle. I am using the ZK-based producer in 0.7 and I see data only produced on some of the brokers, but not all, why?

This is related to an issue in Kafka 0.7.x (see the discussion in Basically, for a new topic, the producer bootstraps using all existing brokers. Why are my brokers not receiving producer sent messages? Ops. Here is some information on actually running Kafka as a production system based on usage and experience at LinkedIn.

Please send us any additional tips you know of. 6.1 Basic Kafka Operations This section will review the most common operations you will perform on your Kafka cluster. All of the tools reviewed in this section are available under the bin/ directory of the Kafka distribution and each tool will print details on all possible commandline options if it is run with no arguments. Apache Kafka. Common Kafka Addons. Apache Kafka is one of the most popular choices in choosing a durable and high-throughput messaging system.

Kafka's protocol doesn't conform to any queue agnostic standard protocol (that is, AMQP), and provides concepts and semantics that are similar, but still different, from other queuing systems. In this post I will cover some common Kafka tools and add-ons that you should consider employing when using Kafka as part of your system design. Data mirroring Most large-scale production systems deploy their systems to multiple data centers (or A availability zones / regions in the cloud) to either avoid a SPOF (Single Point of Failure) when the whole data center is brought down, or reduce latency by serving systems closer to customers at different geo-locations. Apache Kafka 0.8 training deck and tutorial. Intra-cluster Replication in Apache Kafka. Kafka is a distributed publish-subscribe messaging system.

It was originally developed at LinkedIn and became an Apache project in July, 2011. Today, Kafka is used by LinkedIn, Twitter, and Square for applications including log aggregation, queuing, and real time monitoring and event processing. In the upcoming version 0.8 release, Kafka will support intra-cluster replication, which increases both the availability and the durability of the system. In the following post, I will give an overview of Kafka's replication design.

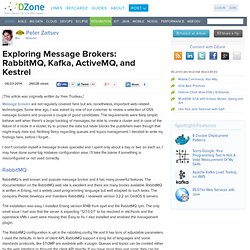

Exploring Message Brokers: RabbitMQ, Kafka, ActiveMQ, and Kestrel. [This article was originally written by Yves Trudeau.]

Message brokers are not regularly covered here but are, nonetheless, important web-related technologies. Some time ago, I was asked by one of our customer to review a selection of OSS message brokers and propose a couple of good candidates. The requirements were fairly simple: behave well when there’s a large backlog of messages, be able to create a cluster and in case of the failure of a node in a cluster, try to protect the data but never blocks the publishers even though that might imply data lost. Nothing fancy regarding queues and topics management. I decided to write my findings here, before I forget… Running a Multi-Broker Apache Kafka 0.8 Cluster on a Single Node. In this article I describe how to install, configure and run a multi-broker Apache Kafka 0.8 (trunk) cluster on a single machine.

The final setup consists of one local ZooKeeper instance and three local Kafka brokers. We will test-drive the setup by sending messages to the cluster via a console producer and receive those messages via a console receiver. I will also describe how to build Kafka for Scala 2.9.2, which makes it much easier to integrate Kafka with other Scala-based frameworks and tools that require Scala 2.9 instead of Kafka’s default Scala 2.8. Update Mar 2014: I have released a Wirbelsturm, a Vagrant and Puppet based tool to perform 1-click local and remote deployments, with a focus on big data related infrastructure such as Apache Kafka and Apache Storm. Thanks to Wirbelsturm you don’t need to follow this tutorial to manually install and configure a Kafka cluster. Why Loggly loves Apache Kafka: Infinitely scalable messaging makes Log Management better.

If you’re in the business of cloud-based log management, every aspect of your service needs to be designed for reliability and scale.

Here’s what Loggly faces, daily: A massive stream of incoming events with bursts reaching 100,000+ events per second and lasting several hoursThe need for a “no log left behind” policy: Every log has the potential to be the critical one, and our customers can’t afford for us to drop a single oneOperational troubleshooting use cases that demand near real-time indexing and time series index management At Loggly, our growth has been both amazing and challenging. We aim to be world’s most popular cloud-based log management service, but we also want to be a great neighbor. As such, we’re committed to open source technology and to giving back to the community.

Setup your own Apache Kafka cluster with Vagrant - Tutorial. Apache Kafka is a distributed publish-subscribe messaging system that aims to be fast, scalable, and durable. If you want to just get up and running quickly with a cluster of Vagrant virtual machines configured with Kafka, take a look at this awesome blog post. It sets up all the VMs for you and configures each node in the cluster, in one fell swoop.

However, if you want to learn how to install and configure a Kafka cluster yourself, utilizing your own Vagrant boxes, then read on. Apache Kafka – Publish-subscribe messaging rethought as a distributed commit log. Kafka is focused more on throughput rather than latency: if latency is critical, you should use a database rather than a message queue (may be with some caveats, e.g., for finance, telcos, or air traffic control; TIBCO et al cover that market well, however). Kafka's use of the JVM does not really impact throughput. Very little portion of the messages' lifecycle is spent in the JVM heap, Kafka makes aggressive us of the OS page-cache and avoids copies (e.g., using sendfile() whenever possible).

I agree that, e.g., if Kafka had to make heavy use of in-process memory (this is appropriate for databases) as opposed to OS managed buffers, then a language without a garbage collector (or perhaps a garbage collected language that gave you an option not to generate garbage in the first place...) would help. As the "slow compilation times" feature, scalac and sbt do a much better job of it than cmake and g++/clang :-) Apache Kafka: Next Generation Distributed Messaging System. Introduction Apache Kafka is a distributed publish-subscribe messaging system. It was originally developed at LinkedIn Corporation and later on became a part of Apache project. Kafka is a fast, scalable, distributed in nature by its design, partitioned and replicated commit log service.

Apache Kafka differs from traditional messaging system in: It is designed as a distributed system which is very easy to scale out. [Kafka-users] Arguments for Kafka over RabbitMQ ? Thanks so much for your replies. This has been a great help understandingRabbit better with having very little experience with it. I have a fewfollow up comments below. While you are correct the payload is a much bigger concern, managing themetadata and acks centrally on the broker across multiple clients at scaleis also a concern. This would seem to be exasperated if you have consumersat different speeds i.e. Storm and Hadoop consuming the same topic. In that scenario, say storm consumes the topic messages in real-time andHadoop consumes once a day.

To allow Hadoop to consume once a day, Rabbit obviously can’t keep 100s GBsin memory and will need to persist this data to its internal DB to beretrieved later. Scalable Real Time State Updates with Storm. In this post, I illustrate how to maintain in DB the current state of a real time event-driven process in a scalable and lock free manner thanks to the Storm framework.

(note: this page contains the tutorial as I initally posted it. I sometimes bring minor updates to this Storm state management tutorial on my blog which are not necessarily reflected here). Storm is an event based data processing engine. Implementing Real-Time Trending Topics with a Distributed Rolling Count Algorithm in Storm. Real-time Stream Processing and Visualization Using Kafka, Storm, and d3.js. How We Selected Apache Kafka on our Path to Real-time Data Ingestion - Engineering Rich Relevance Blog : Engineering Rich Relevance Blog. 10/29/2013 • Topics: Big data, Kafka, Programming by Murtaza Doctor.