Launch a Cluster Using the Command Line - AWS Data Pipeline. If you regularly run an Amazon EMR cluster to analyze web logs or perform analysis of scientific data, you can use AWS Data Pipeline to manage your Amazon EMR clusters.

With AWS Data Pipeline, you can specify preconditions that must be met before the cluster is launched (for example, ensuring that today's data been uploaded to Amazon S3.) This tutorial walks you through launching a cluster that can be a model for a simple Amazon EMR-based pipeline, or as part of a more involved pipeline. Creating the Pipeline Definition File The following code is the pipeline definition file for a simple Amazon EMR cluster that runs an existing Hadoop streaming job provided by Amazon EMR. This sample application is called WordCount, and you can also run it using the Amazon EMR console.

Copy this code into a text file and save it as MyEmrPipelineDefinition.json. This pipeline has three objects: Hourly, which represents the schedule of the work. Uploading and Activating the Pipeline Definition. Process Data Using Amazon EMR with Hadoop Streaming - AWS Data Pipeline. You can use AWS Data Pipeline to manage your Amazon EMR clusters.

With AWS Data Pipeline you can specify preconditions that must be met before the cluster is launched (for example, ensuring that today's data been uploaded to Amazon S3), a schedule for repeatedly running the cluster, and the cluster configuration to use. The following tutorial walks you through launching a simple cluster. In this tutorial, you create a pipeline for a simple Amazon EMR cluster to run a pre-existing Hadoop Streaming job provided by Amazon EMR and send an Amazon SNS notification after the task completes successfully. ETL Processing Using AWS Data Pipeline and Amazon Elastic MapReduce - AWS Big Data Blog. Add-steps — AWS CLI 1.10.46 Command Reference. Options --cluster-id (string) A unique string that identifies the cluster.

This identifier is returned by create-cluster and can also be obtained from list-clusters . --steps (list) A list of steps to be executed by the cluster. Shorthand Syntax: Name=string,Args=string,string,Jar=string,ActionOnFailure=string,MainClass=string,Type=string,Properties=string ... JSON Syntax: Examples 1. 2. NOTE: JSON arguments must include options and values as their own items in the list. 3. 4. 5. 6. RESOLVED: Apache Pig with -tagsource/-tagFile option generates incorrect columns ~ Webopius - Web design. If you are using the fantastic Apache Hadoop & Pig tools to process large datasets, you may encounter situations where Pig Latin isn’t returning the columns you expect.

Particularly if you are using PigStorage with the ‘-tagsource’ or ‘-tagFile’ options to generate a pseudo first column containing the filename being processed. Here’s an example. Consider this scenario: As you’d expect, the output consists of col0, col2 and col3 Now, if you change this slightly: (Note that because the pseudo column ‘filename’ has been added, all other columns have moved, so $0 in the previous example is now $1. What should happen is that exactly the same values appear as in the first example. This odd behaviour can be resolved by launching Pig with the command line ColumnMapKeyPrune option like this: Running example 2 above with this option set produces the result you’d expect to see. You can see this documented on this site along with some other useful debugging tips. Tags. PigActivity - AWS Data Pipeline. PigActivity provides native support for Pig scripts in AWS Data Pipeline without the requirement to use ShellCommandActivity or EmrActivity.

In addition, PigActivity supports data staging. When the stage field is set to true, AWS Data Pipeline stages the input data as a schema in Pig without additional code from the user. Using AWS Lambda for Event-driven Data Processing Pipelines - AWS Big Data Blog. Apache pig - Get today's date in yyyy-mm-dd format in Pig. Emr-sample-apps/do-reports2.pig at master · awslabs/emr-sample-apps. HowTo Format Date For Display or Use In a Shell Script. ByVivek GiteonFebruary 27, 2007 last updated March 31, 2016 How do I format the date to display on the screen on for my shell scripts as per my requirements on Linux or Unix like operating systems?

You need to use the standard date command to format date or time. You can use the same command with the shell script. Syntax The syntax is as follows for the GNU/date and BSD/date command: date +FORMAT date +"%FORMAT" date +"%FORMAT%FORMAT" date +"%FORMAT-%FORMAT" An operand with a leading plus (+) sign signals a user-defined format string which specifies the format in which to display the date and time. Task: Display date in mm-dd-yy format Open a terminal and type the following date command: $ date +"%m-%d-%y" Sample outputs: To turn on 4 digit year display: $ date +"%m-%d-%Y" Just display date as mm/dd/yy format: $ date +"%D" Apache pig - STORE output to a single CSV? Apache pig - STORE output to a single CSV? Java - How to get the current time stamp in PIG. Apache pig - How can I incorporate the current input filename into my Pig Latin script? Executing Work on Existing Resources Using Task Runner - AWS Data Pipeline.

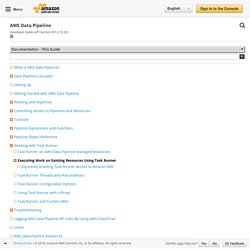

You can install Task Runner on computational resources that you manage, such as an Amazon EC2 instance, or a physical server or workstation.

Task Runner can be installed anywhere, on any compatible hardware or operating system, provided that it can communicate with the AWS Data Pipeline web service. This approach can be useful when, for example, you want to use AWS Data Pipeline to process data that is stored inside your organization’s firewall. By installing Task Runner on a server in the local network, you can access the local database securely and then poll AWS Data Pipeline for the next task to run.

When AWS Data Pipeline ends processing or deletes the pipeline, the Task Runner instance remains running on your computational resource until you manually shut it down. The Task Runner logs persist after pipeline execution is complete. Note You can only install Task Runner on Linux, UNIX, or Mac OS. This section explains how to install and configure Task Runner and its prerequisites. Using AWS Lambda for Event-driven Data Processing Pipelines - AWS Big Data Blog. Using AWS Lambda for Event-driven Data Processing Pipelines - AWS Big Data Blog. Building Scalable and Responsive Big Data Interfaces with AWS Lambda - AWS Big Data Blog. Submit Pig Work - Amazon Elastic MapReduce. This section demonstrates submitting Pig work to an Amazon EMR cluster.

The examples that follow are based on the Amazon EMR sample: Apache Log Analysis using Pig. The sample evaluates Apache log files and then generates a report containing the total bytes transferred, a list of the top 50 IP addresses, a list of the top 50 external referrers, and the top 50 search terms using Bing and Google. The Pig script is located in the Amazon S3 bucket Input data is located in the Amazon S3 bucket The output is saved to an Amazon S3 bucket.