Getting Started with Elasticsearch and Kibana on Amazon EMR. Collecting Logs into Elasticsearch and S3. Elasticsearch is an open sourcedistributed real-time search backend.

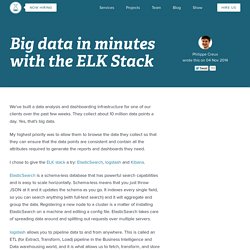

While Elasticsearch can meet a lot of analytics needs, it is best complemented with other analytics backends like Hadoop and MPP databases. As a "staging area" for such complementary backends, AWS's S3 is a great fit. As an added bonus, S3 serves as a highly durable archiving backend. This article shows how to Collect Apache httpd logs and syslogs across web servers.Securely ship the collected logs into the aggregator Fluentd in near real-time.Store the collected logs into Elasticsearch and S3.Visualize the data with Kibana in real-time. Prerequisites A basic understanding of FluentdAWS account credentials In this guide, we assume we are running td-agent on Ubuntu Precise. Setup: Elasticsearch and Kibana Add Elasticsearch's GPG key: $ sudo get -O - | sudo apt-key add - $ sudo echo "deb stable main" > /etc/apt/sources.list.d/elasticsearch.list $ sudo apt-get update $ sudo apt-get install elasticsearch.

Big data in minutes with the ELK Stack. We’ve built a data analysis and dashboarding infrastructure for one of our clients over the past few weeks.

They collect about 10 million data points a day. Yes, that’s big data. My highest priority was to allow them to browse the data they collect so that they can ensure that the data points are consistent and contain all the attributes required to generate the reports and dashboards they need. I chose to give the ELK stack a try: ElasticSearch, logstash and Kibana. Logstash Plugin for Amazon DynamoDB.

The Logstash plugin for Amazon DynamoDB gives you a nearly real-time view of the data in your DynamoDB table.

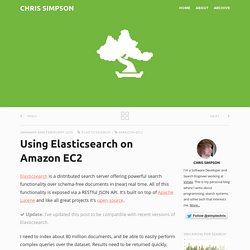

The Logstash plugin for DynamoDB uses DynamoDB Streams to parse and output data as it is added to a DynamoDB table. After you install and activate the Logstash plugin for DynamoDB, it scans the data in the specified table, and then it starts consuming your updates using Streams and then outputs them to Elasticsearch, or a Logstash output of your choice. Logstash is a data pipeline service that processes data, parses data, and then outputs it to a selected location in a selected format. Bigdesk for elasticsearch. Using Elasticsearch on Amazon EC2. Elasticsearch • amazon-ec2 Elasticsearch is a distributed search server offering powerful search functionality over schema-free documents in (near) real time.

All of this functionality is exposed via a RESTful JSON API. It's built on top of Apache Lucene and like all great projects it's open source. Update: I've updated this post to be compatible with recent versions of Elasticsearch. I need to index about 80 million documents, and be able to easily perform complex queries over the dataset.

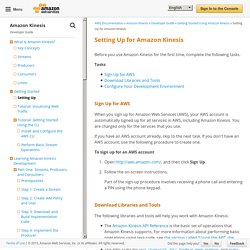

Due to its distributed nature, Elasticsearch is ideal for this task, and EC2 provides a convenient platform to scale as required. I'd reccomend downloading a copy locally first and familiarising yourself with the basics, but if you want to jump straight in, be my guest. I'll assume you already have an Amazon AWS account, and can navigate yourself around the AWS console. Fire up an instance of with your favourite AMI. Wget. Getting Started with Elasticsearch and Kibana on Amazon EMR. Input plugins. Logstash-plugins. Big data in minutes with the ELK Stack. Welcome to Apache Flume — Apache Flume. Open Source Data Collector. Awslabs/amazon-kinesis-client-ruby. Setting Up for Amazon Kinesis - Amazon Kinesis. Before you use Amazon Kinesis for the first time, complete the following tasks.

When you sign up for Amazon Web Services (AWS), your AWS account is automatically signed up for all services in AWS, including Amazon Kinesis. You are charged only for the services that you use. If you have an AWS account already, skip to the next task. If you don't have an AWS account, use the following procedure to create one. To sign up for an AWS account Open and then click Sign Up.Follow the on-screen instructions.Part of the sign-up procedure involves receiving a phone call and entering a PIN using the phone keypad.

Configure Your Development Environment To use the KCL, ensure that your Java development environment meets the following requirements: Java 1.7 (Java SE 7 JDK) or later. Note that the AWS SDK for Java includes Apache Commons and Jackson in the third-party folder.