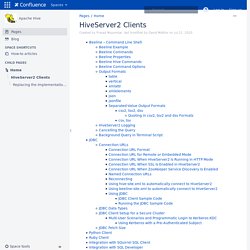

HiveServer2 Clients - Apache Hive. This page describes the different clients supported by HiveServer2.

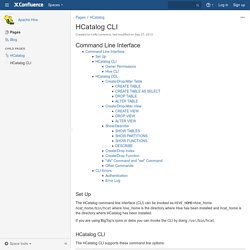

Version Introduced in Hive version 0.11. See HIVE-2935. HiveServer2 supports a command shell Beeline that works with HiveServer2. It's a JDBC client that is based on the SQLLine CLI ( There’s detailed documentation of SQLLine which is applicable to Beeline as well. Replacing the Implementation of Hive CLI Using Beeline The Beeline shell works in both embedded mode as well as remote mode. In remote mode HiveServer2 only accepts valid Thrift calls – even in HTTP mode, the message body contains Thrift payloads. Beeline Example. HCatalog CLI - Apache Hive. Set Up The HCatalog command line interface (CLI) can be invoked as HIVE_HOME=hive_home hcat_home/bin/hcat where hive_home is the directory where Hive has been installed and hcat_home is the directory where HCatalog has been installed.

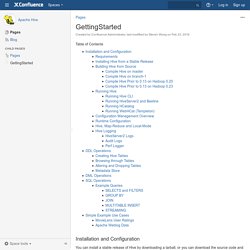

If you are using BigTop's rpms or debs you can invoke the CLI by doing /usr/bin/hcat. LanguageManual Cli - Apache Hive. HCatalog - Apache Hive. GettingStarted - Apache Hive. Table of Contents Installation and Configuration You can install a stable release of Hive by downloading a tarball, or you can download the source code and build Hive from that.

Requirements Java 1.7Note: Hive versions 1.2 onward require Java 1.7 or newer. Analytics with Kibana and Elasticsearch through Hadoop – part 1 – Introduction. Introduction I’ve recently started learning more about the tools and technologies that fall under the loose umbrella term of Big Data, following a lot of the blogs that Mark Rittman has written, including getting Apache log data into Hadoop, and bringing Twitter data into Hadoop via Mongodb.

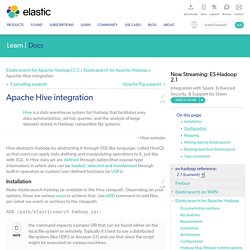

What I wanted to do was visualise the data I’d brought in, looking for patterns and correlations. Obviously the de facto choice at our shop would be Oracle BI, which Mark previously demonstrated reporting on data in Hadoop through Hive and Impala. But, this was more at the “Data Discovery” phase that is discussed in the new Information Management and Big Data Reference Architecture that Rittman Mead helped write with Oracle. I basically wanted a quick and dirty way to start chucking around columns of data without yet being ready to impose the structure of the OBIEE metadata model on it. The Data I’ve got three sources of data I’m going to work with, all related to the Rittman Mead website: The Tools. Apache Hive integration. Apache Hive integrationedit Hive abstracts Hadoop by abstracting it through SQL-like language, called HiveQL so that users can apply data defining and manipulating operations to it, just like with SQL.

In Hive data set are defined through tables (that expose type information) in which data can be loaded, selected and transformed through built-in operators or custom/user defined functions (or UDFs). Installationedit Make elasticsearch-hadoop jar available in the Hive classpath. Depending on your options, there are various ways to achieve that. ADD /path/elasticsearch-hadoop.jar; the command expects a proper URI that can be found either on the local file-system or remotely. As an alternative, one can use the command-line: Archival and Analytics - Importing MySQL data into Hadoop Cluster using Sqoop.

We won’t bore you with buzzwords like volume, velocity and variety.

This post is for MySQL users who want to get their hands dirty with Hadoop, so roll up your sleeves and prepare for work. Why would you ever want to move MySQL data into Hadoop? One good reason is archival and analytics. You might not want to delete old data, but rather move it into Hadoop and make it available for further analysis at a later stage. In this post, we are going to deploy a Hadoop Cluster and export data in bulk from a Galera Cluster using Apache Sqoop. We will use Apache Ambari to deploy Hadoop (HDP 2.1) on three servers. The ClusterControl node has been installed with an HAproxy instance to load balance Galera connections and listen on port 33306. Prerequisites All hosts are running CentOS 6.5 with firewall and SElinux turned off. 192.168.0.100 clustercontrol haproxy mysql Create an SSH key and configure passwordless SSH on hadoop1 to other Hadoop nodes to automate the deployment by Ambari Server. HiveServer2 Clients - Apache Hive.

This page describes the different clients supported by HiveServer2.

Version Icon. Hadoop Hive - Hadoop Hive- Command Line Interface (CLI) Usage: Usage: hive [-hiveconf x=y]* [<-i filename>]* [<-f filename>|<-e query-string>] [-S] -i <filename> Initialization Sql from file (executed automatically and silently before any other commands) -e 'quoted query string' Sql from command line -f <filename> Sql from file -S Silent mode in interactive shell where only data is emitted -hiveconf x=y Use this to set hive/hadoop configuration variables.

-e and -f cannot be specified together. In the absence of these options, interactive shell is started. However, -i can be used with any other options. To see this usage help, run hive -h The cli when invoked without the -i option will attempt to load HIVE_HOME/bin/.hiverc and $HOME/.hiverc as initialization files.