[1910.03531] Causal Inference for Comprehensive Cohort Studies. [1910.03368] Computing the Expected Value of Sample Information Efficiently: Expertise and Skills Required for Four Model-Based Methods. Authors:Natalia R.

![[1910.03368] Computing the Expected Value of Sample Information Efficiently: Expertise and Skills Required for Four Model-Based Methods](http://cdn.pearltrees.com/s/pic/th/information-efficiently-207486963)

Kunst, Edward Wilson, Fernando Alarid-Escudero, Gianluca Baio, Alan Brennan, Michael Fairley, David Glynn, Jeremy D. Goldhaber-Fiebert, Chris Jackson, Hawre Jalal, Nicolas A. Menzies, Mark Strong, Howard Thom, Anna Heath (on behalf of the Collaborative Network for Value of Information (ConVOI)) [1910.02722] Minimal sample size in balanced ANOVA models of crossed, nested and mixed classifications. [1910.02042] A reckless guide to P-values: local evidence, global errors. [1910.01305] Efficient Computation of Linear Model Treatment Effects in an Experimentation Platform. [1910.13954] Model-Robust Inference for Clinical Trials that Improve Precision by Stratified Randomization and Adjustment for Additional Baseline Variables.

[1808.04904] False Discovery Rate Controlled Heterogeneous Treatment Effect Detection for Online Controlled Experiments. [1803.06258] Online Controlled Experiments for Personalised e-Commerce Strategies: Design, Challenges, and Pitfalls. [1501.00450] Flexible Online Repeated Measures Experiment. [1808.00720] RecoGym: A Reinforcement Learning Environment for the problem of Product Recommendation in Online Advertising. [1808.04904] False Discovery Rate Controlled Heterogeneous Treatment Effect Detection for Online Controlled Experiments. [1808.08347] A Comparison of the Taguchi Method and Evolutionary Optimization in Multivariate Testing. [1810.11185] Beyond A/B Testing: Sequential Randomization for Developing Interventions in Scaled Digital Learning Environments. [1811.00457] Test & Roll: Profit-Maximizing A/B Tests. [1910.03788] On Post-Selection Inference in A/B Tests.

[1906.09757] The Identification and Estimation of Direct and Indirect Effects in A/B Tests through Causal Mediation Analysis. [1906.09712] Sequential estimation of quantiles with applications to A/B-testing and best-arm identification. [1906.06390] A/B Testing Measurement Framework for Recommendation Models Based on Expected Revenue. [1906.05959] Early Detection of Long Term Evaluation Criteria in Online Controlled Experiments. [1905.11797] Repeated A/B Testing. [1905.10176] Machine Learning Estimation of Heterogeneous Treatment Effects with Instruments. [1905.02068] Informed Bayesian Inference for the A/B Test. [1901.08984] A Discrepancy-Based Design for A/B Testing Experiments. [1901.10505] A/B Testing in Dense Large-Scale Networks: Design and Inference. [1902.02021] On Heavy-user Bias in A/B Testing. [1902.07133] Estimating Network Effects Using Naturally Occurring Peer Notification Queue Counterfactuals.

[1903.08762] Large-Scale Online Experimentation with Quantile Metrics. At Booking.com, Innovation Means Constant Failure. Listen and subscribe to this podcast via Apple Podcasts | Google Podcasts | RSS Harvard Business School professor Stefan Thomke discusses how past experience and intuition can be misleading when attempting to launch an innovative new product, service, business model, or process in his case “Booking.com” (co-author: Daniela Beyersdorfer) and his new book, “Experimentation Works.”

Instead, Booking.com and other innovative firms embrace a culture where testing, experimentation, and even failure are at the heart of what they do. Download this podcast HBR Presents is a network of podcasts curated by HBR editors, bringing you the best business ideas from the leading minds in management. The views and opinions expressed are solely those of the authors and do not necessarily reflect the official policy or position of Harvard Business Review or its affiliates. BRIAN KENNY: The scientific method. Test, Learn, Adapt: Developing Public Policy with Randomised Controlled Trials. Test, Learn, Adapt is a collaboration between the Behavioural Insights Team, Ben Goldacre, author of Bad Science, and David Torgerson, Director of the University of York Trials Unit.

The paper sets out a core aspect of the Behavioural Insights Team’s methodology. The paper argues that Randomised Controlled Trials (RCTs), which are now widely used in medicine, international development, and internet-based businesses, should be used much more extensively in public policy to enable policymakers to test which interventions are most effective. Test, Learn, Adapt also sets out nine separate steps that are required to set up any RCT.

Many of these steps will be familiar to anyone putting in place a well-designed policy evaluation – for example, deciding in advance the outcome that we are seeking to achieve. Others are less familiar – for example, randomly allocating the intervention to control or intervention groups. How Strong is Your Innovation Evidence? More and more organizations test their business ideas before implementing them.

The best ones perform a mix of experiments to prove that their ideas have legs. They ask two fundamental questions to design the ideal mix of experiments: Speed: How quickly does an experiment produce insights? Strength: How strong is the evidence produced by an experiment? How we lost (and found) millions by not A/B testing. We’ve always felt strongly that we should share our lessons in business and technology with the world, and that includes both our successes and our failures.

We’ve written about some great successes: how we’ve improved support response time, sped up applications, and improved reliability. Today I want to share an experience that wasn’t a success. This is the story of how we made a change to the Basecamp.com site that ended up costing us millions of dollars, how we found our way back from that, and what we learned in the process. What happened? This story starts back in February 2014 when we officially became Basecamp the company. The result was a fairly dramatic change, both in content and visual style. One very significant change here was that we removed the signup form from the homepage. In the first couple of months after we changed the marketing site, signups trended lower than they had at the start of the year.

Where did we go wrong and what can we learn? Multiple Responses in A/B Testing – Building Ibotta. Tl;dr Our data scientists use previous experiments to build a prior on the relationships of treatment effects between several response variables.

Using this prior information should 1) help make more accurate estimates and 2) mitigate multi comparison issues. Introduction At Ibotta the typical A/B test randomly assigns a treatment to a subset of the population with the goal of detecting an increase (or decrease) in a particular response variable, like downloads, redemptions or clicks . If the resulting treatment effect is larger than the noise associated with it at a specific cutoff then we conclude the treatment has a non zero (and hopefully good) effect. Now suppose someone has the great idea to look to see if the treatment effects another response variable.

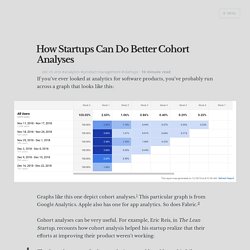

However, it’s not so easy to look at other response variables using p-values and traditional cutoff points because of multiple comparisons issues. How Startups Can Do Better Cohort Analyses · Philosophical Hacker. If you’ve ever looked at analytics for software products, you’ve probably run across a graph that looks like this: Graphs like this one depict cohort analyses.

This particular graph is from Google Analytics.

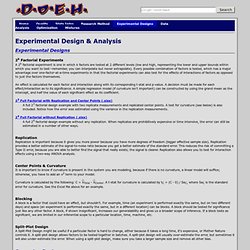

Use cases. A/B Testing. Python - Algorithm for A/B testing. Awesome-agile/A-B-Testing.md at master · lorabv/awesome-agile. Mobile Ads Click-Through Rate (CTR) Prediction. Complex Research Terminology Simplified: Paradigms, Ontology, Epistemology and Methodology. Research terminology simplified: Paradigms, axiology, ontology, epistemology ... - Laura Killam. 3.11 Validity and Reliability Of Research. External and Internal Validity. Types of experimental methods. True, Quasi, Pre, and Non Experimental designs. Quantitative Research Designs: Descriptive non-experimental, Quasi-experimental or Experimental? D.O.E. Handbook - Experimental Design. Experimental Designs 2k Factorial Experiments A 2k factorial experiment is one in which k factors are tested at 2 different levels (low and high, representing the lower and upper bounds within which you want to test--remember, you can interpolate but never extrapolate).

Every possible combination of factors is tested, which has a major advantage over one-factor-at-a-time experiments in that the factorial experiments can also test for the effects of interactions of factors as opposed to just the factors themselves. Overview quantitative. Regression discontinuity all comments. Between-Subjects and Within-Subjects Designs in Counseling Research.