Zoom

Trash

What are some promising open-source alternatives to Hadoop MapReduce for map/reduce.

Mincemeat.py: MapReduce on Python. Mapredus. Plasma. Mapreduce. Octopy - Project Hosting on Google Code. Inspired by Google's MapReduce and Starfish for Ruby, octo.py is a fast-n-easy MapReduce implementation for Python.

Octo.py doesn't aim to meet all your distributed computing needs, but its simple approach is amendable to a large proportion of parallelizable tasks. If your code has a for-loop, there's a good chance that you can make it distributed with just a few small changes. If you're already using Python's map() and reduce() functions, the changes needed are trivial! It is not an exact clone of the Big-G's MapReduce, but I'm guessing that you aren't operating a Google-like cluster with a distributed Google File System and can't use a MapReduce clone. Instead, the scope of the project is more akin to Starfish, running on an ad-hoc cluster of computers. CakePHP: the rapid development php framework: Errors. Riak Wiki: Welcome to the Riak Wiki.

Sector/Sphere: High Performance Distributed Data Storage and Processing. Space. Galago Guidebook. Warning Some of this text is out of date and refers to an older version of Galago.

I've added it to the new website for reference. What is Galago? Galago is a search engine toolkit primarily research and educational use. It is released under a BSD license, so it may be incorporated freely into commercial work, but most commercial users may want something with less of an experimental focus. Galago differs from the other open source search packages in primarily its customizability and scalability. Some of the indexes built in Galago can be processed by C++ code for faster retrieval performance. Retrieval Customization There are two general ways to customize the retrieval process: generating custom indexes, and using the query language.

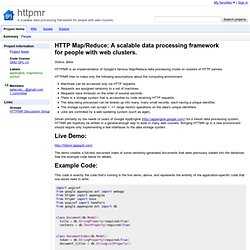

The custom index approach lets you use the flexible indexing tools in Galago to build specialized indexes that have your own ranking function built-in. The slower, but more flexible approach is to use the query language. TupleFlow. Httpmr - Project Hosting on Google Code. Status: Beta HTTPMR is an implementation of Google's famous Map/Reduce data processing model on clusters of HTTP servers.

HTTPMR tries to make only the following assumptions about the computing environment: Machines can be accessed only via HTTP requests. Requests are assigned randomly to a set of machines. Requests have timeouts on the order of several seconds. Driven primarily by the needs of users of Google AppEngine ( for a robust data processing system, HTTMR will hopefully be written in a general-enough way to work in many web clusters. The demo creates a full-text document index of some randomly-generated documents that were previously loaded into the datastore. Qizmt - Project Hosting on Google Code. MySpace Qizmt is a mapreduce framework for executing and developing distributed computation applications on large clusters of Windows servers.

The MySpace Qizmt project develops open-source software for reliable, scalable, super-easy, distributed computation software. MySpace Qizmt core features include: Highly Scalable Applications of MySpace Qizmt Data Mining Analytics Bulk Media Processing Content Indexing Core MySpace Qizmt Features MySpace Qizmt currently supports .Net 3.5 SP1 on Windows 2003 Server, Windows 2008 Server, Windows Vista and Windows 7. MySpace Qizmt IDE/Debugger User Documentation. Mapreduce Bash Script.

Cloudmapreduce - Project Hosting on Google Code. Cloud MapReduce was initially developed at Accenture Technology Labs.

It is a MapReduce implementation on top of the Amazon Cloud OS. Cloud MapReduce has minimal risk w.r.t. the MapReduce patent, compared to other open source implementations, as it is implemented in a completely different architecture than described in the Google paper. By exploiting a cloud OS's scalability, Cloud MapReduce achieves three primary advantages over other MapReduce implementations built on a traditional OS: It is faster than other implementations (e.g., 60 times faster than Hadoop in one case. Speedup depends on the application and data.). The Phoenix System for MapReduce Programming. Misco: A Mobile MapReduce Framework. Disco Project. MAPREDUCE-64] Map-side sort is hampered by io.sort.record.percent. One simple way might be to simply add TRACE level log messages at every collect() call with the current values of every index plus the spill number [...]

![MAPREDUCE-64] Map-side sort is hampered by io.sort.record.percent](http://cdn.pearltrees.com/s/pic/th/mapreduce-hampered-percent-10078138)

That could be an interesting visualization. I'd already made up the diagrams, but anything that helps the analysis and validation would be welcome. I'd rather not add a trace to the committed code, but data from it sounds great. I ran a simple test where I was running a sort of 10 byte records, and it turned out that the "optimal" io.sort.record.percent caused my job to be significantly slower. It was the case then that a small number of large spills actually ran slower than a large number of small spills.

IIRC those tests used a non-RawComparator, right? The documentation (such as it is) in HADOOP-2919 describes the existing code. The proposed design adds another parameter, the equator (while kvstart and bufstart could be replaced with a single variable similar to equator the effort seemed misspent).