Programming. Analytical Engine. Trial model of a part of the Analytical Engine, built by Babbage, as displayed at the Science Museum (London)[1] The Analytical Engine was a proposed mechanical general-purpose computer designed by English mathematician Charles Babbage.[2] It was first described in 1837 as the successor to Babbage's Difference engine, a design for a mechanical computer.

The Analytical Engine incorporated an arithmetic logic unit, control flow in the form of conditional branching and loops, and integrated memory, making it the first design for a general-purpose computer that could be described in modern terms as Turing-complete.[3][4] Babbage was never able to complete construction of any of his machines due to conflicts with his chief engineer and inadequate funding.[5][6] It was not until the 1940s that the first general-purpose computers were actually built.

Design[edit] Antikythera mechanism. The Antikythera mechanism (Fragment A – front) The Antikythera mechanism (Fragment A – back) The Antikythera mechanism (/ˌæntɨkɨˈθɪərə/ ANT-i-ki-THEER-ə or /ˌæntɨˈkɪθərə/ ANT-i-KITH-ə-rə) is an ancient analog computer[1][2][3][4] designed to predict astronomical positions and eclipses.

Image evolution. What is this?

A simulated annealing like optimization algorithm, a reimplementation of Roger Alsing's excellent idea. The goal is to get an image represented as a collection of overlapping polygons of various colors and transparencies. We start from random 50 polygons that are invisible. In each optimization step we randomly modify one parameter (like color components or polygon vertices) and check whether such new variant looks more like the original image. If it is, we keep it, and continue to mutate this one instead. Fitness is a sum of pixel-by-pixel differences from the original image. This implementation is based on Roger Alsing's description, though not on his code. How does it look after some time? 50 polygons (4-vertex) ~15 minutes 644 benefitial mutations 6,120 candidates 88.74% fitness 50 polygons (6-vertex) ~15 minutes 646 benefitial mutations 6,024 candidates 89.04% fitness 50 polygons (10-vertex) ~15 minutes 645 benefitial mutations 5,367 candidates 87.01% fitness Requirements.

Top 50 Free Open Source Classes on Computer Science : Comtechtor. Computer science is an interesting field to go into.

There are a number of opportunities in computer science that you can take advantage of. With computers increasingly becoming a regular part of life, those who can work with computers have good opportunities. You can find a good salary with a program in computer science, and as long as you are careful to keep up your skills. Ada Lovelace. Augusta Ada King, Countess of Lovelace (10 December 1815 – 27 November 1852), born Augusta Ada Byron and now commonly known as Ada Lovelace, was an English mathematician and writer chiefly known for her work on Charles Babbage's early mechanical general-purpose computer, the Analytical Engine.

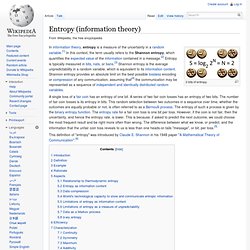

Her notes on the engine include what is recognised as the first algorithm intended to be carried out by a machine. Because of this, she is often described as the world's first computer programmer.[1][2][3] Ada described her approach as "poetical science" and herself as an "Analyst (& Metaphysician)". As a young adult, her mathematical talents led her to an ongoing working relationship and friendship with fellow British mathematician Charles Babbage, and in particular Babbage's work on the Analytical Engine. Biography[edit] Childhood[edit] Ada, aged four On 16 January 1816, Annabella, at George's behest, left for her parents' home at Kirkby Mallory taking one-month-old Ada with her. Entropy (information theory) 2 bits of entropy.

A single toss of a fair coin has an entropy of one bit. A series of two fair coin tosses has an entropy of two bits. The number of fair coin tosses is its entropy in bits. This random selection between two outcomes in a sequence over time, whether the outcomes are equally probable or not, is often referred to as a Bernoulli process. The entropy of such a process is given by the binary entropy function. This definition of "entropy" was introduced by Claude E. Gödel's incompleteness theorems. Gödel's incompleteness theorems are two theorems of mathematical logic that establish inherent limitations of all but the most trivial axiomatic systems capable of doing arithmetic.

The theorems, proven by Kurt Gödel in 1931, are important both in mathematical logic and in the philosophy of mathematics. The two results are widely, but not universally, interpreted as showing that Hilbert's program to find a complete and consistent set of axioms for all mathematics is impossible, giving a negative answer to Hilbert's second problem.

The first incompleteness theorem states that no consistent system of axioms whose theorems can be listed by an "effective procedure" (i.e., any sort of algorithm) is capable of proving all truths about the relations of the natural numbers (arithmetic). For any such system, there will always be statements about the natural numbers that are true, but that are unprovable within the system.