Decisiontrees - Missing values in ARFF (Weka) JaDTi - A Decision Tree implementation in Java. Classification via Decision Trees in WEKA This example illustrates. c4.5. Data mining algoritmer. Gå inte in på algoritmerna i detalj, snarare antyda lite olika principer.

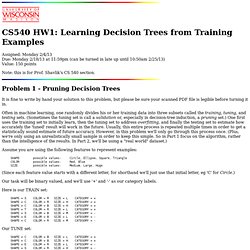

(Bok: sid 78) Pseudo-code for 1R For each attribute, For each value of the attribute, make a rule as follows: count how often each class appears find the most frequent class make the rule assign that class to this attribute-value Calculate the error rate of the rules Choose the rules with the smallest error rate Note: "missing" is always treated as a separate attribute value Dealing with numeric attributes Numeric attributes are discretized: the range of the attribute is divided into a set of intervals Instances are sorted according to attribute's values Breakpoints are placed where the (majority) class changes (so that the total error is minimized)

Pseudocodes - InneHall hakank.org. Gå inte in på algoritmerna i detalj, snarare antyda lite olika principer.

(Bok: sid 78) Pseudo-code for 1R For each attribute, For each value of the attribute, make a rule as follows: count how often each class appears find the most frequent class make the rule assign that class to this attribute-value Calculate the error rate of the rules Choose the rules with the smallest error rate Note: "missing" is always treated as a separate attribute value Dealing with numeric attributes Numeric attributes are discretized: the range of the attribute is divided into a set of intervals Instances are sorted according to attribute's values Breakpoints are placed where the (majority) class changes (so that the total error is minimized) Pseudo code decision tree2. Decision tree pseudocode. Decision tree guide.

Java code for decision tree algorithm. CS 540 - Homework 1. Note: this is for Prof.

Shavlik's CS 540 section. Problem 1 - Pruning Decision Trees It is fine to write by hand your solution to this problem, but please be sure your scanned PDF file is legible before turning it in. Often in machine learning, one randomly divides his or her training data into three subsets called the training, tuning, and testing sets. (Sometimes the tuning set is call a validation or, especially in decision-tree induction, a pruning set.)

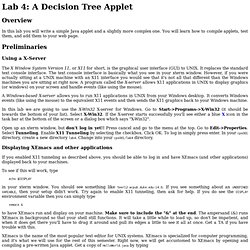

Assume you are using the following features to represent examples: SHAPE possible values: Circle, Ellipse, Square, Triangle COLOR possible values: Red, Blue SIZE possible values: Medium, Large, Huge (Since each feature value starts with a different letter, for shorthand we'll just use that initial letter, eg 'C' for Circle.) Our task will be binary valued, and we'll use '+' and '-' as our category labels. Lab 4: A Decision Tree Applet. Overview In this lab you will write a simple Java applet and a slightly more complex one.

You will learn how to compile applets, test them, and add them to your web page. Preliminaries Using a X-Server The X Window System Version 11, or X11 for short, is the graphical user interface (GUI) to UNIX. A Windows-based X-server allows you to run X11 applications in UNIX from your Windows desktop. In this lab we are going to use the X-Win32 X-server for Windows. Open up an xterm window, but don't log in yet!! Displaying XEmacs and other applications If you enabled X11 tunneling as described above, you should be able to log in and have XEmacs (and other applications) displayed back to your machines. To see if this will work, type echo $DISPLAY in your xterm window.

Xemacs & to have XEmacs run and display on your machine. Www.csc.liv.ac.uk/~khan/Tree.java. Aima-java.googlecode.com/svn/trunk/aima-core/src/main/java/aima/core/learning/learners/DecisionTreeLearner.java. Hints for CS572 Lab2. Lab 2: Implementing a Decision Tree Based Learning Agent in Out: Nov 11, 2002Due: Dec 2, 2002 ComS 572 Principles of Aritifical IntelligenceDimitris MargaritisDepartment of Computer ScienceIowa State University TA, Jie BaoDept of Computer ScienceIowa State Universitybaojie@cs.iastate. 11, 2002.

Decision Trees and Data Mining Software. Decision Trees & Data Mining. ID3 Decision Trees in Java. ID3 Decision Trees in Java In a previous post, I explored how one might apply decision trees to solve a complex problem.

This post will explore the code necessary to implement that decision tree. If you would like a full copy of the source code, it is available here in zip format. Entropy.java – In Entropy.java, we are concerned with calculating the amount of entropy, or the amount of uncertainty or randomness with a particular variable. For example, consider a classifier with two classes, YES and NO. Entropy may be calculated in the following way: Tree.java – This tree class contains all our code for building our decision tree. Notice that gain is calculated as a function of all the values of the attribute.

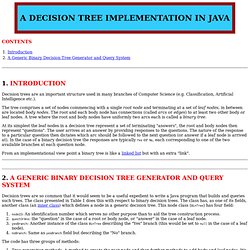

DiscreteAttribute.java Node.java – Node.java holds our information in the tree. FileReader.java – The least interesting class in the code playtennis.data is only a simple text file that describes the learned attribute “play tennis” for a particular learning episode. Decision trees. Decision trees are an important structure used in many branches of Computer Science (e.g.

Classification, Artificial Intelligence etc.). The tree comprises a set of nodes commencing with a single root node and terminating at a set of leaf nodes, in between are located body nodes. The root and each body node has connections (called arcs or edges) to at least two other body or leaf nodes. A tree where the root and body nodes have uniformly two arcs each is called a binary tree. At its simplest the leaf nodes in a decision tree represent a set of terminating "answers", the root and body nodes then represent "questions".