HOWTO scrape websites with Ruby and Mechanize. Web scraping is an approach for extracting data from websites that don’t provide an official API.

Web scraping code is inherently “brittle” (i.e. prone to breaking over time due to changes in the website content and structure), but it’s a flexible technique with a broad range of uses. Note that web scraping may be against the terms of use of some websites, please be a good web citizen and check first. Getting started Firstly, make sure you have the mechanize gem installed: Then copy/paste the following short Ruby script: require 'mechanize' mechanize = Mechanize.new page = mechanize.get(' puts page.title Run the script with a Ruby, and you should see the page title of the Stack Overflow website. Extracting data Mechanize uses the nokogiri gem internally to parse HTML responses. Scraping the Web with Ruby. When you run into a site that doesn't have an API, but you'd like to use the site's data, sometimes all you can do is scrape it!

In this article, we'll cover using Capybara and PhantomJS along with some standard libraries like CSV, GDBM, and OpenStruct to turn a website's content into CSV data. As an example, I'll be scraping my own site. Keep in mind if you are planning to scrape a site, be aware of their Terms of Service. Many sites don't allow scraping, so be a good web citizen and respect the wishes of the site's owners. Our goal here is to dump out a CSV file of the blog articles here on ngauthier.com that contains the titles, dates, urls, summary, and full body text. The first thing we're going to do is get Capybara running and just dump the fields from the front page out to the screen to make sure everything's working. First, we're going to load up capybara and poltergeist.

. #! Next up, we're going to visit my site. Web Content Scrapers. Extract information from the web with Ruby. Take advantage of web scraping software and website APIs for automated data extraction Websites no longer cater solely to human readers.

Many sites now support APIs that enable computer programs to harvest information. Screen scraping— the time-honored technique of parsing of HTML pages into more-digestible forms — can still come in handy. But opportunities to simplify web data extraction through the use of APIs are increasing rapidly. According to ProgrammableWeb, more than 10,000 website APIs were available at the time this article was published — an increase of more than 3,000 over the preceding 15 months. Ruby - How to scrape data from another website using Rails 3. Web scraping with Ruby and Nokogiri. If you're building a site that compares data from different sources, chances are you might find yourself dealing with a couple of sites that don't have an API.

But us web developers, we're a resourceful bunch, and we won't let a little thing like a lack of accessible information stop us, right? Right! Before APIs were the norm, an easy way to grab information on the interwebs was through web scraping. Web Scraping with Ruby using Mechanize & Nokogiri gems. Web Scraping can most succintly be described as "Creating an API where there is none".

It is mainly used to harvest data from the web that cannot be easily downloaded manually/does not provide an option for direct download. Scraped data can be used for a variety of purposes like online price comaprison, detecting changes in web page content, real-time data integration and web mashups. Scripting languages are best suited for web scraping as they provide an interactive interpreter which helps a lot when you are developing the scraper.

The option to try out new Xpath combinations and being able to get instant feedback saves a huge amout of time while developing. Scripting languages like Python, Ruby and Perl are popular choices for web scraping. Most of the popular libraries like 'Mechanize' have been ported to most of the popular scripting languages. Data Scraping and More With Ruby, Nokogiri, Sinatra and Heroku. In this article, you will learn the basics of scraping and parsing data from websites with Ruby and Nokogiri.

You will then take this information and build a sample application, first as a command line tool and then as a full Sinatra web app. Finally, you will deploy your new application on the heroku hosting platform. There is a screencast that accompanies this article. If you are interested in the process behind many of the thoughts and code in this article, watch the screencast. If you just want the facts, read the article. Data scraping is the process of extracting data from output that was originally intended for humans. Nokogiri is a Ruby Gem that extracts data from web pages using CSS selectors. Let’s look at an example: In this page, there are three bits of interesting information: price, time, and inventory status.

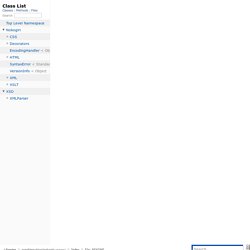

The above example is very simple, but hopefully gets the point across about how you can target content for extraction using CSS selectors. File: README — Documentation for sparklemotion/nokogiri (master) Nokogiri (鋸) is an HTML, XML, SAX, and Reader parser.

Among Nokogiri's many features is the ability to search documents via XPath or CSS3 selectors. XML is like violence - if it doesn’t solve your problems, you are not using enough of it. XPath 1.0 support for document searchingCSS3 selector support for document searchingXML/HTML builder Nokogiri parses and searches XML/HTML very quickly, and also has correctly implemented CSS3 selector support as well as XPath 1.0 support.

Before filing a bug report, please read our submission guidelines at: * The Nokogiri mailing list is available here: * The bug tracker is available here: * Tutorials - Nokogiri 鋸.