Untitled. A tale of two cameras – Made by Many. I’m going to share some of the thinking and code behind the Picle iPhone app starting with the camera functionality.

This will involve showing some code snippets and describing classes and frameworks found in the Apple iOS SDK. At the heart of the the Picle app is the ability to use the iPhone camera and microphone in quick succession. The user experience of this functionality is critical to feel of the app so plenty of work has been done both before and after the initial release. Indeed the next release will have this completely reworked. iNVASIVECODE. In previous posts, I showed you how to create a custom camera using AVFoundation and how to process an image with the accelerate framework.

Let’s now combine both results to create a (quasi-) real-time (I’ll explain later what I mean with quasi) video processing. Custom camera preview. Iphone - Accessing iOS 6 new APIs for camera exposure and shutter speed. Ios - How to specify the exposure, focus and white balance to the video recording? Objective c - How to take a photo automatically at focus in iPhone SDK? Ios - Record video with overlay text and image. iOS Camera Overlay Example Using AVCaptureSession. I made a post back in 2009 on how to overlay images, buttons and labels on a live camera view using UIImagePicker.

Well since iOS 4 came out there has been a better way to do this and it is high time I showed you how. You can get the new project’s source code on GitHub. This new example project is functionally the same as the other. It looks like this: AR Overlay Example App All the app does is allow you to push the “scan” button which then shows the “scanning” label for two seconds. OpenCV Tutorial - Part 4 - Computer Vision Talks. This is the fourth part of the OpenCV Tutorial.

In this part the solution of the annoying iOS video capture orientation bug will be described. Of course that’s not all. There are some new features - we will add processing of saved photos from your photo album. Also to introduce minor interface improvements and I’ll show you how to disable unsupported API like video capture in your app and run in on iOS Simulator. Startup images First of all, i would like to say a great thanks for my friend who made nice startup images for this project. Device, Interface and Video orientation. Record Video With AVCaptureSession – iOS Developers. Apple Resources:Media Capture The following are typically required AVCaptureDevice – represents the input device (camera or microphone) AVCaptureInput – (a concrete subclass of) to configure the ports from the input device (has one or more input ports which are instances of AVCaptureInputPort) AVCaptureOutput – (a concrete subclass of) to manage the output to a movie file or still image (accepts data from one or more sources, e.g. an AVCaptureMovieFileOutput object accepts both video and audio data) AVCaptureSession – coordinates the data flow from the input to the output AVCaptureVideoPreviewLayer – shows the user what a camera is recording AVCaptureConnection – connection between a capture input and a capture output in a capture session.

Can be used to enable or disable the flow of data from a given input or to a given output. Also to monitor the average and peak power levels in audio channels. Notifications. How to Make an Augmented Reality Game with Open CV - Part Two. If you're new here, you may want to subscribe to my RSS feed or follow me on Twitter.

Thanks for visiting! Make the world your virtual target range in this Augmented Reality tutorial This is the second part of a four-part series on implementing AR (Augmented Reality) in your games and apps. How to Make an Augmented Reality Game with Open CV. If you're new here, you may want to subscribe to my RSS feed or follow me on Twitter.

Thanks for visiting! Make the world your virtual target range in this Augmented Reality tutorial Looking to make your neighbor’s car explode by an errant cruise missile without the the hassle of court dates? Jj0b/AROverlayExample. OpenCV iOS - Video Processing. The OpenCV library comes as a so-called framework, which you can directly drag-and-drop into your XCode project.

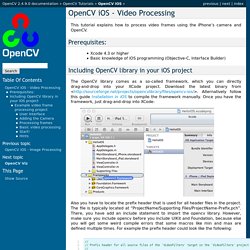

Download the latest binary from < Alternatively follow this guide Installation in iOS to compile the framework manually. Once you have the framework, just drag-and-drop into XCode: Also you have to locate the prefix header that is used for all header files in the project. The file is typically located at “ProjectName/Supporting Files/ProjectName-Prefix.pch”. There, you have add an include statement to import the opencv library. Ios - iPhone SDK record video then email it. Chapter 26. Object Detection by Color: Using the GPU for Real-Time Video Image Processing. GPU Gems 3 is now available for free online!

Please visit our Recent Documents page to see all the latest whitepapers and conference presentations that can help you with your projects. You can also subscribe to our Developer News Feed to get notifications of new material on the site. Chapter 26. Object Detection by Color: Using the GPU for Real-Time Video Image Processing Ralph Brunner Apple Frank Doepke Apple Bunny Laden Apple In this chapter, we describe a GPU-based technique for finding the location of a uniquely colored object in a scene and then utilizing that position information for video effects. Object detection and tracking have applications in robotics, surveillance, and other fields.

Because our program uses the Core Image image-processing framework on Mac OS X, we begin the chapter with a brief description of Core Image, why we use it, and how it works. 26.1 Image Processing Abstracted Core Image is an image-processing framework built into Mac OS X. 26.2 Object Detection by Color. GPU-accelerated video processing on Mac and iOS. I've been invited to give a talk at the SecondConf developer conference in Chicago, and I'm writing this to accompany it.

I'll be talking about using the GPU to accelerate processing of video on Mac and iOS. The slides for this talk are available here. The source code samples used in this talk will be linked throughout this article. Additionally, I'll refer to the course I teach on advanced iPhone development, which can be found on iTunes U here. UPDATE (7/12/2011): My talk from SecondConf on this topic can now be downloaded from the conference videos page here. BradLarson/GPUImage. OpenCV Tutorial - Part 4 - Computer Vision Talks. Record Video With AVCaptureSession – iOS Developers. How to Make an Augmented Reality Game with Open CV - Part Two.

How to Make an Augmented Reality Game with Open CV. Computer vision with iOS Part 2: Face tracking in live video — Aptogo. Update: Nov 28 2011 – The OpenCV framework has been rebuilt using opencv svn revision 7017 Introduction. Jj0b/AROverlayExample. iOS Camera Overlay Example Using AVCaptureSession. Ios - Record video with overlay text and image. Objective c - How to take a photo automatically at focus in iPhone SDK? Ios - How to specify the exposure, focus and white balance to the video recording? Iphone - Accessing iOS 6 new APIs for camera exposure and shutter speed. Using the camera to grab frames. Been playing around with AV Foundation. I've been trying to set up a camera using it and then grab some frames so I can manipulate them. I'm struggling. I've watched the WWDC videos on this and have looked at the sample code but I've still not got it working. I'd be grateful if someone could help me - I'm really trying to make something really worthwhile.

There's a technical note here - Technical Q&A QA1702: How to capture video frames from the camera as images using AV Foundation my code looks like this:- in the h file #import <UIKit/UIKit.h>#import <AVFoundation/AVFoundation.h>#import <QuartzCore/QuartzCore.h>#import <CoreMedia/CoreMedia.h>@interface testerViewController : UIViewController { AVCaptureSession* session; AVCaptureDevice* device; NSError* error; AVCaptureInput* input; AVCaptureStillImageOutput* output;}@end in the m file This is throwing up CVPixel errors. Post edited by suksmo on. iNVASIVECODE. A tale of two cameras – Made by Many.