[Solr-user] Autocomplete: match words anywhere in the token. Chantal Ackermann wrote: "I definitely need to have a look at how to use facetting in combinationwith multivalued fields for autocomplete.

![[Solr-user] Autocomplete: match words anywhere in the token](http://cdn.pearltrees.com/s/pic/th/autocomplete-anywhere-grokbase-98033290)

" My one kind of crazy idea is to (ab)use the Hilighting Component. If you make a query for auto-suggest terms based on facets, using Chantal's technique, but in that query you ask the Highlighting component to highlight matches only from the (non-tokenized) field you are faceting on, then this may possibly give you the terms from the multi-valued field that actually matched, and therefore which ones to actually offer as auto-suggestions. The idea would be that this would give you matching terms even though you've done various transformation (n-gramming, perhaps tokenization, or stemming, other normalization) in the field that you are actually searching upon but the highlighting component would magically figure out which full non-transformed terms from the other (faceted) field should be considered matches.

Cominvent AS - Enterprise search consultants. AutoComplete or AutoSuggest has in recent years become a “must-have” search feature.

Solr can do AutoComplete in a number of ways (such as Suggester, TermsComponent and Faceting using facet.prefix), but in this post we’ll consider a more advanced and flexible option, namely querying a dedicated Solr Core search index for the suggestions. You may think that this sounds heavy weight, but we’re talking small data here so it is really efficient and snappy! Even if it’s some work setting up, the benefits to this approach are really compelling: Suggest on multi word or sentencesSuggests on prefix of whole line and/or individual wordsFull relevancy tuning capabilities of Solr (in contrast to a single frequency sorting from TermsComponent or Faceting)Increased recall using Phonetics, Fuzzy, Character normalization, or any other Solr featureRich suggestions including thumbnails, extra texts etcEasy to mix different “categories” of suggestsions, e.g.

So let’s get to it. Running the example 1. 2. Advanced Suggest-As-You-Type with Solr. In my previous post, I talked about implementing Search-As-You-Type using Solr.

In this post I’ll cover a closely related functionality called Suggest-As-You-Type. Here’s the use case: A user comes to your search-driven website to find something. And it is your goal to be as helpful as possible. Part of this is by making term suggestions as they type. Apache Solr vs ElasticSearch - the Feature Smackdown! SpatialSearch. Solr3.1 Many applications wish to combine location data with text data.

This is often called spatial search or geo-spatial search. Most of these applications need to do several things: Represent spatial data in the index Filter by some spatial concept such as a bounding box or other shape Sort by distance Score/boost by distance NOTE: Unless otherwise specified, all units of distance are kilometers and points are in degrees of latitude,longitude. Lucene 4 has a new spatial module that replaces the older one described below. If you haven't already, download Solr, start the example server and index the example data as shown in the solr tutorial.

In the example data, certain documents have a field called "store" (with a fieldType named "location" implemented via LatLonType). <field name="store">45.17614,-93.87341</field><! Apache Solr for TYPO3 CMS - Solr. Installation von solr für TYPO3 - Mimi's Blog. -- Update am 11.6.2012. Performante numerische Bereichsanfragen mit Solr 1.4/Lucene 2.9 - Sybit Blog. Suggester. A common need in search applications is suggesting query terms or phrases based on incomplete user input.

These completions may come from a dictionary that is based upon the main index or upon any other arbitrary dictionary. It's often useful to be able to provide only top-N suggestions, either ranked alphabetically or according to their usefulness for an average user (e.g. popularity, or the number of returned results). Solr 3.1 includes a component called Suggester that provides this functionality. Suggester reuses much of the SpellCheckComponent infrastructure, so it also reuses many common SpellCheck parameters, such as spellcheck=true or spellcheck.build=true, etc. The way this component is configured in solrconfig.xml is also very similar: Query Syntax - SolrTutorial.com. Lucene has a custom query syntax for querying its indexes.

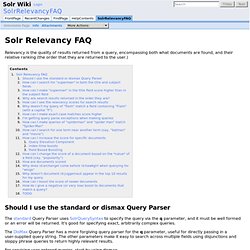

Unless you explicitly specify an alternative query parser such as DisMax or eDisMax, you're using the standard Lucene query parser by default. Here are some query examples demonstrating the query syntax. Keyword matching Search for word "foo" in the title field. title:foo. SolrRelevancyFAQ. Relevancy is the quality of results returned from a query, encompassing both what documents are found, and their relative ranking (the order that they are returned to the user.)

Should I use the standard or dismax Query Parser The standard Query Parser uses SolrQuerySyntax to specify the query via the q parameter, and it must be well formed or an error will be returned. It's good for specifying exact, arbitrarily complex queries. The DisMax Query Parser has a more forgiving query parser for the q parameter, useful for directly passing in a user-supplied query string. The DisMax Query Parser - Solr Reference Guide - Lucid Imagination. The DisMax query parser is designed to process simple phrases (without complex syntax) entered by users and to search for individual terms across several fields using different weighting (boosts) based on the significance of each field.

Additional options enable users to influence the score based on rules specific to each use case (independent of user input). In general, the DisMax query parser's interface is more like that of Google than the interface of the 'standard' Solr request handler. This similarity makes DisMax the appropriate query parser for many consumer applications.

It accepts a simple syntax, and it rarely produces error messages. The DisMax query parser supports an extremely simplified subset of the Lucene QueryParser syntax. Interested in the technical concept behind the DisMax name? Getting Started - Solr Reference Guide - Lucid Imagination. Skip to end of metadataGo to start of metadata Solr makes it easy for programmers to develop sophisticated, high-performance search applications with advanced features such as faceting (arranging search results in columns with numerical counts of key terms).

Solr builds on another open source search technology: Lucene, a Java library that provides indexing and search technology, as well as spellchecking, hit highlighting and advanced analysis/tokenization capabilities. Both Solr and Lucene are managed by the Apache Software Foundation (www.apache.org). The Lucene search library currently ranks among the top 15 open source projects and is one of the top 5 Apache projects, with installations at over 4,000 companies. Lucene/Solr downloads have grown nearly ten times over the past three years, with a current run-rate of over 6,000 downloads a day. Solr - User - Querying Solr Index for date fields. Nested Queries in Solr. Nested Queries in Lucene Syntax To embed a query of another type in a Lucene/Solr query string, simply use the magic field name _query_.

The following example embeds a lucene query type:poems into another lucene query: text:"roses are red" AND _query_:"type:poems" Now of course this isn’t too useful on it’s own, but it becomes very powerful in conjunction with the query parser framework and local params which allows us to change the types of queries. Apache Solr - Do only what matters.

Apache SolrCloudZooKeeper ClusterJ LeaderLatch, Barrier and NodeCache Overseer and OverseerCollectionProcessor We do not want to have to write a separate init script for Solr. If we are already running Tomcat, and if Tomcat already have an init script, we should deploy Solr using Tomcat. My unanswered questions on SolrUnread articlesMiscellaneousRunning Apache Solr in a cloud I was having a problem with using wildcard. Try using EdgeNGrams. I was also having a problem with phrase synonym. How to escape special characters? Lucene supports escaping special characters that are part of the query syntax. Why is "Medical" highlighted when user search for "medication"? This is because of SnowballPorterFilter. What are the differences between string and text? String is not analyzed. Xml - Solr Indexing Splitting Field On Delimiter. Solr Post.jar – post to different Solr port other than 8983 « Borort's Diaries..

UTF-8 encoding problem on one of two Solr setups. Install SOLR 1.4.1 on Ubuntu 10.10 Maven Maverick Multicore using Tomcat 6 Complete Guide Yodi Aditya Researcher + Traveller. Installing Apache Lucene SOLR 1.4.1 in Ubuntu 10.10 using tomcat 6 is easy like in another version. Some changes come in Tomcat 6 path in Ubuntu 10.10. But don't worry, it's not too difficult. If you using ubuntu Karmic Koala 10.04, you can go to my previous article . Solr Enterprise Search. The book is focused on the 3.1 version of Solr. The content is divided into ten thematic chapters, each of which consists of a few to several subsections.

The book is maintained in the convention cookbook which means that it is not a guide from A to Z about Solr – it is a ready-made solutions to some of the problems that can be encountered while working with Solr. The book will include topics such as: Indexing the data in different formats and formsUsing and understanding the administration panelDifferent techniques of data groupingSolr performance lifting techniquesCreation of your own Solr modulesIndexing the data using the Data Import HandlerThe use of phrases, sorting and spatial searching If you are interested, please refer to the Packt Publishing page: We would look to ensure that the reception of the book should be as good as possible and because we have found some mistakes in the book we decided to write a little errata. Page 20. And autocomplete (part 1) Almost everyone has seen how the autocomplete feature looks like.

No wonder, then, Solr provides mechanisms by which we can build such functionality. In today’s entry I will show you how you can add autocomplete mechanism using faceting. Indeks Suppose you want to show some hints to the user in the on-line store, for example you want to show products name. Suppose that our index is composed of the following fields: And autocomplete (part 2) In the previous part I showed how the faceting mechanism can be used to achieve the autocomplete functionality. Today I’ll show you how to use a component called Suggester to implement autocomplete functionality. The begining. CoreAdmin. Multiple cores let you have a single Solr instance with separate configurations and indexes, with their own config and schema for very different applications, but still have the convenience of unified administration. Individual indexes are still fairly isolated, but you can manage them as a single application, create new indexes on the fly by spinning up new SolrCores, and even make one SolrCore replace another SolrCore without ever restarting your Servlet Container.

See MultipleIndexes. Solr Schema. If you’ve ever worked on a project that involved Coldfusion’s bundled version of Verity, you’ve no doubt run into the issue of trying to confine your fields into the structure that Verity imposes, and those custom fields are really precious in these instances. About 6 months ago, I ran into an issue with a search project where I had about 125,000 documents to index. Since we also wanted to be able to use the indexes for some other projects, I was a bit nervous to commit almost the entire allotment of indexable objects to one collection. This launched me into writing a custom search engine and indexer using Lucene and slapping Coldfusion around the responses to do things that Verity did. However, once the projects were complete, I never really got around to making it easy to use. It does cool stuff like search across multiple collections, context highlighting, relevancy calculations, term vector calculations, “did you mean”, etc.