E-marketing : ces entreprises qui ont exploité avec succès le Big Data. Google Trends nous rapporte la naissance de ce phénomène du Big Data début 2012.

Que signifie au juste « Big data » et comment s'en saisir concrètement ? Le Big data, buzzword ou pas ? Focus sur des cas pratiques et outils opérationnels pour saisir ce nouvel eldorado. Volume important de données brutes, les big data n'ont de valeur que dans leur traitement. Ainsi, ces données doivent indiscutablement être raffinées pour être exploitables de façon opérationnelle.

Le big data dans tous ses états. Solution for Hadoop - Products. Store, process and analyze data in any format with high-performance and scale.

The Joyent Solution for Hadoop is a cloud-based hosting environment for your big data projects based on Apache Hadoop. We provide all the necessary utilities and tools to effectively manage the vast streams of data and information generated by your business. By combining the scale and power of Joyent Cloud with the data management capabilities of Apache Hadoop, organizations and enterprises are able to utilize advanced data management services without the need to invest and manage their own server environment. 7 Myths on Big Data—Avoiding Bad Hadoop and Cloud Analytics Decisions. Hadoop is an open source legend built by software heroes.

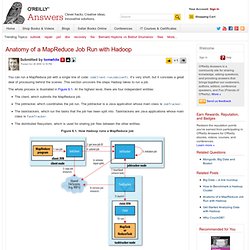

Yet, legends can sometimes be surrounded by myths—these myths can lead IT executives down a path with rose-colored glasses. Data and data usage is growing at an alarming rate. Just look at all the numbers from analysts—IDC predicts a 53.4% growth rate for storage this year, AT&T claims 20,000% growth of their wireless data traffic over the past 5 years, and if you take at your own communications channels, its guaranteed that the internet content, emails, app notifications, social messages, and automated reports you get every day has dramatically increased. This is why companies ranging from McKinsey to Facebook to Walmart are doing something about big data. Just like we saw in the dot-com boom of the 90s and the web 2.0 boom of the 2000s, the big data trend will also lead companies to make some really bad assumptions and decisions. The New Hadoop API 0.20.x. Anatomy of a MapReduce Job Run with Hadoop. You can run a MapReduce job with a single line of code: JobClient.runJob(conf).

It’s very short, but it conceals a great deal of processing behind the scenes. This section uncovers the steps Hadoop takes to run a job. The whole process is illustrated in Figure 6.1. At the highest level, there are four independent entities: The client, which submits the MapReduce job.The jobtracker, which coordinates the job run. Figure 6.1. Hadoop only launch local job by default why. Scalding with CDH3U2 in a Maven project · twitter/scalding Wiki. Introduction Aim This wiki describes a procedure that should allow the dedicated reader to create an executable jar file implementing Scalding, using Maven, that is readily available for deployment on CDH3U2 cluster.

Hadoop Flavors and Compatibility Issues To deploy a MapReduce job on any Hadoop cluster, since the different Hadoop versions are not necessarily compatible with each other, one has to ensure that the core Hadoop libraries the client code uses are identical to those found throughout the entire cluster. Roughly said, client code that is planned to be deployed as an executable jar, should use the same exact jars as are used by the server nodes on the cluster. Protocol. For the Impatient, Part 1. Kianwilcox/hbase-scalding. Video Lecture: Intro to Scalding by @posco and @argyris. Flume and HBase. Cassandra vs HBase. Nosql - Large scale data processing Hbase vs Cassandra. Nosql - realtime querying/aggregating millions of records - hadoop? hbase? cassandra. HBase vs Cassandra: why we moved. My team is currently working on a brand new product – the forthcoming MMO www.FightMyMonster.com.

This has given us the luxury of building against a NOSQL database, which means we can put the horrors of MySQL sharding and expensive scalability behind us. Recently a few people have been asking why we seem to have changed our preference from HBase to Cassandra. I can confirm the change is true and that we have in fact almost completed porting our code to Cassandra, and here I will seek to provide an explanation. For those that are new to NOSQL, in a following post I will write about why I think we will see a seismic shift from SQL to NOSQL over the coming years, which will be just as important as the move to cloud computing. Understanding HBase and BigTable - Jimbojw.com. From Jimbojw.com The hardest part about learning HBase (the open source implementation of Google's BigTable), is just wrapping your mind around the concept of what it actually is.

I find it rather unfortunate that these two great systems contain the words table and base in their names, which tend to cause confusion among RDBMS indoctrinated individuals (like myself). This article aims to describe these distributed data storage systems from a conceptual standpoint. After reading it, you should be better able to make an educated decision regarding when you might want to use HBase vs when you'd be better off with a "traditional" database.