Video. Video is an electronic medium for the recording, copying and broadcasting of moving visual images.

History[edit] Video technology was first[citation needed] developed for cathode ray tube (CRT) television systems, but several new technologies for video display devices have since been invented. Charles Ginsburg led an Ampex research team developing one of the first practical video tape recorder (VTR). In 1951 the first video tape recorder captured live images from television cameras by converting the camera's electrical impulses and saving the information onto magnetic video tape.

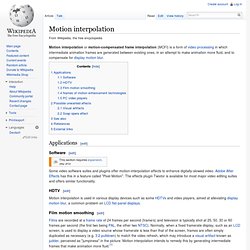

Motion compensation. Visualization of MPEG block motion compensation.

Blocks that moved from one frame to the next are shown as white arrows, making the motions of the different platforms and the character clearly visible. Motion compensation is an algorithmic technique employed in the encoding of video data for video compression, for example in the generation of MPEG-2 files. Motion compensation describes a picture in terms of the transformation of a reference picture to the current picture. The reference picture may be previous in time or even from the future. When images can be accurately synthesised from previously transmitted/stored images, the compression efficiency can be improved. Motion interpolation. Motion interpolation or motion-compensated frame interpolation (MCFI) is a form of video processing in which intermediate animation frames are generated between existing ones, in an attempt to make animation more fluid, and to compensate for display motion blur.

Applications[edit] Software[edit] Some video software suites and plugins offer motion-interpolation effects to enhance digitally-slowed video. Adobe After Effects has this in a feature called "Pixel Motion". The effects plugin Twixtor is available for most major video editing suites and offers similar functionality. Raster graphics. 위키백과, 우리 모두의 백과사전.

RGB 비트맵 그림의 맨 위 왼쪽 모퉁이에 웃는 얼굴이 있다고 치자. 확대를 하면 커다란 웃는 얼굴은 오른쪽과 같이 보이게 된다. 각 사각형은 화소를 나타낸다. 더 확대하면 각 화소는 더 커 보이게 되며 빨강, 초록, 파랑색의 값을 추가함으로써 색을 이루고 있음을 알 수 있다. 전산학에서 래스터 그래픽스(Raster graphics) 이미지, 곧 비트맵은 일반적으로 직사각형 격자의 화소, 색의 점을 모니터, 종이 등의 매체에 표시하는 자료 구조이다. 비트맵은 화면에 표시되는 그림의 비트 대 비트와 일치하며, 일반적으로 장치 독립 비트맵으로서, 디스플레이의 비디오 메모리의 기억 장치에 쓰이는 포맷과 일치한다. 인쇄 산업은 래스터 그래픽스를 연속 톤으로, 벡터 그래픽스를 선형 작업으로 부른다. Interleaving. Interleaving may refer to:

CRT. Cutaway rendering of a color CRT:1.

Three Electron guns (for red, green, and blue phosphor dots)2. Electron beams3. Focusing coils4. Deflection coils5. Anode connection6. Overscan. Overscan is the term used to describe the situation when not all of a televised image is present on a viewing screen.

It exists because television sets from the 1930s through the 1990s were highly variable in how the video image was positioned within the borders of the cathode ray tube (CRT) screen. The solution was to have the monitor show less than the full image ie. with the edges "outside" the viewing area of the tube. In this way the image seen never showed black borders caused by either improper centering or non-linearity in the scanning circuits or variations in power supply voltage all of which could cause the image to "shrink" in size and reveal the edge of the picture.

With the ending of CRT displays, this issue has largely (but not completely) disappeared. YUV pixel formats. YUV formats fall into two distinct groups, the packed formats where Y, U (Cb) and V (Cr) samples are packed together into macropixels which are stored in a single array, and the planar formats where each component is stored as a separate array, the final image being a fusing of the three separate planes.

In the diagrams below, the numerical suffix attached to each Y, U or V sample indicates the sampling position across the image line, so, for example, V0 indicates the leftmost V sample and Yn indicates the Y sample at the (n+1)th pixel from the left. Subsampling intervals in the horizontal and vertical directions may merit some explanation. The horizontal subsampling interval describes how frequently across a line a sample of that component is taken while the vertical interval describes on which lines samples are taken. For YVU9, though, the vertical subsampling interval is 4. This indicates that U and V samples are only taken on every fourth line of the original image. Chrominance.

Luminance only, Chrominance only, and full color image. History[edit] The use of two channels, one transmitting the predominating color (signal T), and the other the mean brilliance (signal t) output from a single television transmitter to be received not only by color television receivers provided with the necessary more expensive equipment, but also by the ordinary type of television receiver which is more numerous and less expensive and which reproduces the pictures in black and white only. Previous schemes for color television systems, which were incompatible with existing monochrome receivers, transmitted RGB signals in various ways. Television standards[edit] In analog television, chrominance is encoded into a video signal using a subcarrier frequency.

MPEG. MPEG logo Sub Groups[edit] ISO/IEC JTC1/SC29/WG11 – Coding of moving pictures and audio has following Sub Groups (SG):[6] RequirementsSystemsVideoAudio3D Graphics CompressionTestCommunication Cooperation with other groups[edit]

MPEG-2 system. MPEG-2 (aka H.222/H.262 as defined by the ITU) is a standard for "the generic coding of moving pictures and associated audio information".[1] It describes a combination of lossy video compression and lossy audio data compression methods, which permit storage and transmission of movies using currently available storage media and transmission bandwidth.

Main characteristics[edit] MPEG-2 is widely used as the format of digital television signals that are broadcast by terrestrial (over-the-air), cable, and direct broadcast satellite TV systems. It also specifies the format of movies and other programs that are distributed on DVD and similar discs. TV stations, TV receivers, DVD players, and other equipment are often designed to this standard. PSI. Program-specific information (PSI) is metadata about a program (channel) and part of an MPEG transport stream. The PSI data as defined by ISO/IEC 13818-1 (MPEG-2 Part 1: Systems) includes four tables: PAT (program association table)CAT (conditional access table)PMT (program map table)NIT (network information table) PSI is carried in the form of a table structure.

Each table structure is broken into sections and can span multiple transport stream packets. Adaptation field also occurs in TS packets carrying PSI data. The sections comprising the PAT and CAT tables are associated with predefined PIDs as explained in their respective descriptions below. DSM-CC. Digital storage media command and control (DSM-CC) is a toolkit for developing control channels associated with MPEG-1 and MPEG-2 streams. It is defined in part 6 of the MPEG-2 standard (Extensions for DSM-CC) and uses a client/server model connected via an underlying network (carried via the MPEG-2 multiplex or independently if needed).

DSM-CC may be used for controlling the video reception, providing features normally found on Video Cassette Recorders (VCR) (fast-forward, rewind, pause, etc.). It may also be used for a wide variety of other purposes including packet data transport. It is defined by a series of weighty standards, principally MPEG-2 ISO/IEC 13818-6 (part 6 of the MPEG-2 standard). These specifications include numerous implementation options. PES. A typical method of transmitting elementary stream data from a video or audio encoder is to first create PES packets from the elementary stream data and then to encapsulate these PES packets inside Transport Stream (TS) packets or Program Stream (PS) packets. The TS packets can then be multiplexed and transmitted using broadcasting techniques, such as those used in an ATSC and DVB. Transport Streams and Program Streams are each logically constructed from PES packets.

PES packets shall be used to convert between Transport Streams and Program Streams. In some cases the PES packets need not be modified when performing such conversions. PES packets may be much larger than the size of a Transport Stream packet.[3] MPEG-2 video compression. Video compression picture types. DCT. Entropy coding. 위키백과, 우리 모두의 백과사전. 엔트로피 인코딩 혹은 엔트로피 부호화(entropy encoding)는 심볼이 나올 확률에 따라 심볼을 나타내는 코드의 길이를 달리하는 부호화 방법이다. 보통 엔트로피 인코더는 모든 심볼에 대해 같은 길이를 갖는 코드를 심볼이 나올 확률값의 음의 로그에 비례하는 서로 다른 길이의 코드로 바꾸어 부호화한다. 즉 가장 자주 나오는 심볼에 대한 코드가 가장 짧다. 새넌 정리(Shannon's theorem)에 따르면, 가장 좋은 코드 길이는 b를 부호화할 진수(2진수로 부호화 할 경우에는 b=2가 된다), P를 심볼이 나올 확률이라고 할 때 −logbP 라고 한다. 엔트로피 코딩 중에 가장 자주 쓰이는 세 가지는 허프만 부호화, 범위 부호화와 산술 부호화이다. Huffman coding. Huffman tree generated from the exact frequencies of the text "this is an example of a huffman tree". The frequencies and codes of each character are below. Encoding the sentence with this code requires 135 bits, as opposed to 288 bits if 36 characters of 8 bits were used.

MPEG PS. Program streams are used on DVD-Video discs and HD DVD video discs, but with some restrictions and extensions.[9][10] The filename extensions are VOB and EVO respectively. Coding structure[edit] Program streams are created by combining one or more Packetized Elementary Streams (PES), which have a common time base, into a single stream. It is designed for reasonably reliable media such as disks, in contrast to MPEG transport stream which is for data transmission in which loss of data is likely.

Program streams have variable size records and minimal use of start codes which would make over the air reception difficult, but has less overhead. MPEG TS. Diff. TS vs PS.