LING 572. A Library for Support Vector Machines. LIBSVM -- A Library for Support Vector Machines Chih-Chung Chang and Chih-Jen Lin Version 3.20 released on November 15, 2014.

It conducts some minor fixes. LIBSVM tools provides many extensions of LIBSVM. Please check it if you need some functions not supported in LIBSVM. Www.ee.columbia.edu/~stanchen/papers/h015j.pdf. Faculty.washington.edu/fxia/courses/LING572/maxent_berger96.pdf. Faculty.washington.edu/fxia/courses/LING572/maxent_adwait97.pdf. AIxploratorium - Decision Trees. Courses.washington.edu/ling572/papers/joachims1997.pdf. Courses.washington.edu/ling572/papers/mccallum1998_AAAI.pdf. Conditional random field. Conditional random fields (CRFs) are a class of statistical modelling method often applied in pattern recognition and machine learning, where they are used for structured prediction.

Whereas an ordinary classifier predicts a label for a single sample without regard to "neighboring" samples, a CRF can take context into account; e.g., the linear chain CRF popular in natural language processing predicts sequences of labels for sequences of input samples. CRFs are a type of discriminative undirected probabilistic graphical model. It is used to encode known relationships between observations and construct consistent interpretations. It is often used for labeling or parsing of sequential data, such as natural language text or biological sequences[1] and in computer vision.[2] Specifically, CRFs find applications in shallow parsing,[3] named entity recognition[4] and gene finding, among other tasks, being an alternative to the related hidden Markov models.

Description[edit] and random variables. About the Chi-Square Test. Generally speaking, the chi-square test is a statistical test used to examine differences with categorical variables.

There are a number of features of the social world we characterize through categorical variables - religion, political preference, etc. To examine hypotheses using such variables, use the chi-square test. Curse of dimensionality. The curse of dimensionality refers to various phenomena that arise when analyzing and organizing data in high-dimensional spaces (often with hundreds or thousands of dimensions) that do not occur in low-dimensional settings such as the three-dimensional physical space of everyday experience.

The term curse of dimensionality was coined by Richard E. Bellman when considering problems in dynamic optimization.[1][2] The "curse of dimensionality" depends on the algorithm[edit] Latent semantic analysis. Latent semantic analysis (LSA) is a technique in natural language processing, in particular in vectorial semantics, of analyzing relationships between a set of documents and the terms they contain by producing a set of concepts related to the documents and terms.

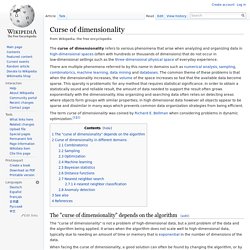

LSA assumes that words that are close in meaning will occur in similar pieces of text. A matrix containing word counts per paragraph (rows represent unique words and columns represent each paragraph) is constructed from a large piece of text and a mathematical technique called singular value decomposition (SVD) is used to reduce the number of columns while preserving the similarity structure among rows. Singular value decomposition. Visualization of the SVD of a two-dimensional, real shearing matrixM.

First, we see the unit disc in blue together with the two canonical unit vectors. We then see the action of M, which distorts the disk to an ellipse. The SVD decomposes M into three simple transformations: an initial rotationV*, a scaling Σ along the coordinate axes, and a final rotation U. The lengths σ1 and σ2 of the semi-axes of the ellipse are the singular values of M, namely Σ1,1 and Σ2,2. LING 571. Prover9 Download. Prover9, Mace4, and several related programs come packaged in a system called LADR (Library for Automated Deduction Research).

If you install one of these LADR packages, you will get command-line programs. (The programs are run by typing commands to a command prompt, terminal, or shell.) Welcome to FrameNet. About WordNet - WordNet - About WordNet. Benoît Sagot - WOLF. Le WOLF (Wordnet Libre du Français) est une ressource lexicale sémantique (wordnet) libre pour le français.

Le WOLF a été construit à partir du Princeton WordNet (PWN) et de diverses ressources multilingues (Sagot et Fišer 2008a, Sagot et Fišer 2008b, Fišer et Sagot 2008). Les lexèmes polysémiques ont été traités au moyen d'une approche reposant sur l'alignement en mots d'un corpus parallèle en cinq langues. Le lexique multilingue extrait a été désambiguïsé sémantiquement à l'aide des wordnets des langues concernées. Par ailleurs, une approche bilingue a été suffisante pour construire de nouvelles entrées à l'aide des mots monosémiques. Nous avons pour cela extrait des lexiques bilingues à partir de Wikipedia et de thésaurus. En 2009, un travail spécifique a été effectué sur les synsets adverbiaux (Sagot, Fort et Venant 2009a, Sagot, Fort et Venant 2009b) Le WOLF contient tous les synsets du Princetown WordNet, y compris ceux pour lesquels aucun lexème français n'est connu.

Longman English Dictionary Online. Bodenstab_efficient_cyk. Parser care package. Cky_control. Start_up_package_package_demo. Start_up_package. Parser_writing_help. LING 567.

The Matrix. [help] The LinGO Grammar Matrix is developed at the University of Washington in the context of the DELPH-IN Consortium, by Emily M.

Bender and colleagues. This material is based up work supported by the National Science Foundation under Grant No. BCS-0644097. Additional support for Grammar Matrix development came from a gift to the Turing Center from the Utilika Foundation. ODIN - The Online Database of Interlinear Text. ODIN, the O nline D atabase of In terlinear text, is a repository of Interlinear Glossed Text (IGT) extracted mainly from scholarly linguistic papers.

The repository is both broad-coverage, in that it contains data for a variety of the world's languages (limited only by what data is available and what has been discovered), and rich, in that all data contained in the repository has been subject to linguistic analysis. IGT is a standard method within the field of linguistics for presenting language data, with (1) being a typical example. Common in IGT is a phonetic transcription of the language in question (line 1), a morphosyntactic analysis which includes a morpheme-by-morpheme gloss and grammatical information of varying sorts and granularity (line 2), and a free-translation (line 3). (1) apiya=ya=at QATAMMA=pat tapar-ta at that time=CONJ=3SG.N in the same way rule-PAST "And at the same time he ruled it in the very same manner.

" ODIN is still under construction. LkbTop - Deep Linguistic Processing with HPSG (DELPH-IN) The LKB system is a grammar and lexicon development environment for use with unification-based linguistic formalisms. While not restricted to HPSG, the LKB implements the DELPH-IN reference formalism of typed feature structures (jointly with other DELPH-IN software using the same formalism). The primary documentation on the LKB is provided by the book Implementing Typed Feature Structure Grammars. Excerpts from the book provide an tour of the LKB (although see LkbInstallation for revised installation instructions) and the user manual. These pages are intended to provide documentation for aspects of the LKB not covered by the book, including recent developments. The use of a wiki forum is intended to enable LKB developers and users alike to contribute to the available on-line documentation.

The LKB has been in active use since around 1991, with a substantially new version in use from about 1997. Other pages can be found by entering Lkb in the wiki search box. Knowledge Engineering for NLP: Testsuite specifications. Navigation Preliminaries If your language uses non-ascii characters, you'll need to either: Settle on a system of transliteration (preferably both non-lossy and standard for the language, but at least the former) Figure out an input method for the standard orthography in emacs as well as how to create unicode files. If your language has complex morphophonology, you'll need to work out an underlying representation to use for your testsuite (and lexicon/lexical rules). If you're not a native speaker of your language, you might try to find out whether there are native speakers you could contact for judgments on examples. (Is the language taught at UW? Back to top Test suites: General guidelines Your test suite should include both grammatical and ungrammatical examples, and ideally more ungrammatical examples than grammatical ones.

Grammatical phenomena This section describes the grammatical phenomena we expect to cover, and the type of examples you should use to illustrate them. Pronouns.