BigQuery | Google. Web Caching/Accelerating/Proxy/Etc | Tech Topics. !MAY BE RELATED! | Scaling/Big Data/Etc. Hadoop. Big Data analytics with Hive and iReport. Each J.J.

Abrams’ TV series Person of Interest episode starts with the following narration from Mr. Finch one of the leading characters: “You are being watched. The government has a secret system–a machine that spies on you every hour of every day. I know because…I built it.” Of course us technical people know better. In JCG article “Hadoop Modes Explained – Standalone, Pseudo Distributed, Distributed” JCG partner Rahul Patodi explained how to setup Hadoop. Hypertable - Big Data. Big Performance. Hypertable Routs HBase in Performance Test - HBase Overwhelmed by Garbage Collection. This is a guest post by Doug Judd, original creator of Hypertable and the CEO of Hypertable, Inc.

Hypertable delivers 2X better throughput in most tests -- HBase fails 41 and 167 billion record insert tests, overwhelmed by garbage collection -- Both systems deliver similar results for random read uniform test We recently conducted a test comparing the performance of Hypertable (@hypertable) version 0.9.5.5 to that of HBase (@HBase) version 0.90.4 (CDH3u2) running Zookeeper 3.3.4.

In this post, we summarize the results and offer explanations for the discrepancies. For the full test report, see Hypertable vs. HBase II. Introduction Hypertable and HBase are both open source, scalable databases modeled after Google's proprietary Bigtable database. OS: CentOS 6.1 CPU: 2X AMD C32 Six Core Model 4170 HE 2.1Ghz RAM: 24GB 1333 MHz DDR3 disk: 4X 2TB SATA Western Digital RE4-GP WD2002FYPS The HDFS NameNode and Hypertable and HBase master was run on test01. Random Write Random Read Zipfian Uniform. MapReduce Patterns, Algorithms, and Use Cases « Highly Scalable. In this article I digested a number of MapReduce patterns and algorithms to give a systematic view of the different techniques that can be found on the web or scientific articles.

Several practical case studies are also provided. All descriptions and code snippets use the standard Hadoop’s MapReduce model with Mappers, Reduces, Combiners, Partitioners, and sorting. MapReduce & Hadoop API revised. Nowadays, Hadoop has become the key technology behind what has come to be known as “Big Data”.

It has certainly worked hard to earn this position. It is mature technology that has been used successfully in countless projects. But now, with experience behind us, it is time to take stock of the foundations upon which it is based, particularly its interface. This article discusses some of the weaknesses of both MapReduce and Hadoop, which we, at Datasalt, shall attempt to resolve with an open-source project that we will soon be releasing. MapReduce. Scaling the Druid Data Store » Metamarkets. Druid, Part Deux: Three Principles for Fast, Distributed OLAP » Metamarkets. William Hertling's Thoughtstream. When I'm not writing science fiction novels, I work on web apps for a largish company*.

This week was pretty darn exciting: we learned on Monday afternoon that we needed to scale up to a peak volume of 10,000 simultaneous visitors by Thursday morning at 5:30am. This post will be about what we did, what we learned, and what worked. A little background: our stack is nginx, Ruby on Rails, and mysql. The web app is a custom travel guide. The user comes into our app and either imports a TripIt itinerary, or selects a destination and enters their travel details. Our site was in beta, and we've been getting a few hundred visitors a day.

What we had going for us: After some experimentation, we decided that we couldn't run more than 125 threads on a single JMeter instance. We quickly learned that: After making these changes, and letting the app servers scale to 16 servers, we saw that we were now DB bound. Why scaling horizontally is better: Fat is the new Fit. Scaling, in terms of the internet, is a product or service’s ability to expand exponentially to meet need.

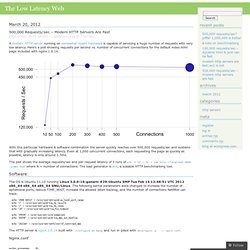

There are two types of scalability: vertical scalability is the traditional and easiest was to expand – by upgrading the hardware you already own, and horizontal scalability is where you create a network of hardware which can expand (and contract) to suit demand at a given time. Let’s all face it: the internet is only going to get bigger. Recent events such as the uprisings of Egypt and Libya have proved without doubt that the third world is beginning to utilise the internet in the same way the west has been. Scaling GitHub. 500,000 requests/sec – Modern HTTP servers are fast « The Low Latency Web. 500,000 requests/sec – Modern HTTP servers are fast A modern HTTP server running on somewhat recent hardware is capable of servicing a huge number of requests with very low latency.

Here’s a plot showing requests per second vs. number of concurrent connections for the default index.html page included with nginx 1.0.14. With this particular hardware & software combination the server quickly reaches over 500,000 requests/sec and sustains that with gradually increasing latency. Even at 1,000 concurrent connections, each requesting the page as quickly as possible, latency is only around 1.5ms. The plot shows the average requests/sec and per-request latency of 3 runs of wrk -t 10 -c N -r 10m where N = number of connections. Software.